Sergey Chernyshev (@sergeyche) is web performance enthusiast, open source hacker and web addict. He organizes New York Web Performance Meetup Group, local community of web performance geeks in New York and helps kick-start local groups about Web Performance around the world.

He volunteers his time to run @perfplanet twitter companion to PerfPlanet site. He is also an open source developer and author of a few web performance-related tools including ShowSlow, SVN Assets, drop-in .htaccess and more.

Sergey also teaches a live on-line course about performance with O'Reilly and often speaks on performance-related topics at various local New York events and global conferences, including Velocity and QCon.

Every list of web performance recommendations has a step to utilize browser and proxy caches. When components of the page are not transferred over the network, but loaded from local drive, page rendering happens much faster.

In practice, there are a few layers of the web infrastructure are involved and I’d like to cover a few important things to know before attempting an easy task of enabling cache headers.

Which headers?

To understand how caching works, first we need to understand what kind of tools HTTP protocol provides for this.

The process is relatively simple – browser sends a request to the server for each component on the page separately and with each such request it sends a series of headers specifying various properties about the request.

Some of these headers are instructing the server on the caching information associated with each resource (HTML page itself, CSS, JavaScripts, images and so on).

There are three important events that happen within the cache:

- Initial load

- Re-validation

- Expiration

Initial load

On initial load, browser doesn’t have the resource in the cache and has no metadata other then URL to send in the request.

After server sends the response that includes content body along with caching policy data headers, browser and intermediate proxy servers store the content in memory or disk cache according to the policies so next time they can serve data locally without incurring high cost of re-fetching it over the network.

Waterfall diagram of initial load

Cache policy information includes resource meta-data like time of resource modification sent using Last-Modified header and content fingerprint information uniquely identifying the version of the content sent using ETag (entity tag) header.

Server can also specify expiration time for the resource which is sent using Expires header or max-age parameter of Cache-Control header value. More detailed information about cache policy can also be sent using Cache-Control header, this includes desired proxy behavior, whatever or not caches must re-validate the object or can apply their own heuristics to avoid excessive network use and so on.

Re-validation

If resource is already in the cache, browser can use cache policy data to perform a conditional request next time it needs the resource, indicating to the server that content needs to be transferred over the network only in case it got updated.

Browser issues a so-called conditional GET request which sends over some of the cache policy properties like time of modification using If-Modified-Since request header or a fingerprint information using If-None-Match header. Server can either send full response back as if it was a regular non-conditional request, or it can return 304 Not Modified response without sending content body indicating that browser (or proxy) should reuse locally cached copy.

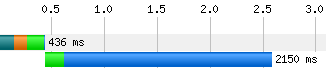

Waterfall diagram with re-validation of second request

Expiration

The best news in caching is the ability for the server to specify the period of time in which clients should reuse the resource without attempting to fetch it from the network. That’s right – clients do not have to make a network request at all until expiration period is over.

To accomplish this, server can send Expires header with absolute end date/time value or Cache-Control header with max-age parameter specifying relative time interval from the moment of the request (in seconds).

Next time browser will need the resource and finds it in the cache, it will check if expiration period is over and if not, it will simply use cached content without making a request, completely avoiding the cost of networking.

When expiration period is over, browser will go through re-validation process and receive 304 Not Modified response (or updated body of the content) with new value of Expires or Cache-Control header headers.

Read more in Mark Nottigham’s Caching Tutorial.

Problem of “Pointless 304s”

Conditional GET request is a great technique to combine initial load and re-validation into one package. It works very well for independent requests when client has no context, like in case of RSS readers when one RSS feed is completely independent from another. In these cases, there is just no way to derive cache policy information for multiple assets from one server response which makes conditional GET the most optimal solution.

In case of web pages, a series of requests comprising a page view share the context, come from the limited set of servers, comprise one or a several web applications and so on. In this case sending conditional GETs for all assets comprising the page will most likely result in a series of 304 responses indicating to the browser that none of those assets actually changed since last visit. Because all requests share the context it’s probably better to somehow instruct the browser about the state of all assets during the first request and avoid unnecessary re-validation for the rest of them.

Repeat view waterfall diagram for the page with Pointless 304s

Caching policy: Never Expire

Usually what you do when you plan caching policies, you look at data lifecycle and determine when content changes, how often it is requested and so on, but for the browser/proxy caching we can simplify this process and cover majority of cases with the following two rules:

- All static content should be cached forever (have far-future expiration date). This applies to JavaScripts, CSS, images, SWF, XML configuration files, favorite icon, and so on – almost everything other then main HTML page

- HTML pages should not be cached if their content is completely dynamic or if pages are static (e.g. plain HTML on the disk drive), then it can utilize

304 Not Modifiedre-validation response to only send data back when it really changes. If some sections of your page actually change (e.g. login and user name in top right corner, dynamic sidebars, ads and so on), consider loading data using AJAX or use some dependency tracking to identify overall re-validation dates.

Unique URLs

First question that comes to mind when developers usually hear this – How will I cache static assets forever if my site might change in the future? All those “static” assets are not processed by PHP or another scripting engine, but they are still changing as my development efforts go on over time!

Yes, that’s absolutely valid question, because the last thing we want is for users to have “cacheing problems” when we published new code/content, but users still sees old files, or partially old ones which is even worse.

One would argue that we should utilize conditional GETs here as well, that it’s the only way to “be safe”. But there is a simple trick that can be used to never ever request cached items again and still be sure that users get updates immediately.

The idea is simple – caches (browsers and proxies) use resource URL together with cache policy to determine the resource’s life cycle in the cache. To invalidate the cache, we can simply change the URL every time resource is changing. Since we as developers are in control of all of our static resources, we can always know when new resource is being deployed and when it actually changes.

All we need to do is to keep track of resource’s version/fingerprint information and use that as part of the URL for cache busting when it changes. This will allow us to use infinitely distant moment in time as a value of Expires headers and infinitely long period of time as Cache-Control max-age parameter.

Generating unique identifiers

There are a couple small challenges with generating unique URLs – algorithms must not depend on specific server – for example, default ETag generation in Apache uses inode of the file on the drive causing them to fluctuate from server to server. Also, URLs must change only when content changes and not file meta-data (like file modification time or permissions, for example).

Using Version Control Systems

One straightforward source of versioning information is version control system for your code. After all, it’s the most authoritative source of this info – it changes when you tell it to change.

I wrote a simple tool called SVN Assets for those who use Sebversion for versioning – it extracts version list and generates and array of versions so all you need to do next is to call assetURL() function to get full URL (current implementation provides PHP API). There is also a script to process static CSS files replacing the url('...') directives. Other helpful feature is to globally override base URL for your assets in case you want to host them on CDN.

Example:

http://www.example.com/image.123.png

This kind of tools is easy to create for any version control system and to work with any language or publishing platform, feel free to join the project or to create your own.

They can be ran manually during deployment or automatically on code commit event.

Content hash

Even though version control systems are good source of information, solution actually requires storing versioning info separately and updating it synchronously with content changes. More over, it is harder to implement in cases when your build process also includes minification and combining of CSS and JS or automated spriting and compression of images. Deriving version numbers for resulting assets requires more rules and logic implemented and therefore prone to more errors.

Another solution that solves some of these problems is to use cryptographic hash function (e.g. MD5 or sha1sum) to get unique* string based on the content of the asset and use it as a “fingerprint” information – either in URLs for static assets or in ETag headers for HTML pages (where changing URL is not an option).

Example:

http://www.example.com/data.e4d909c290d0fb1ca068ffaddf22cbd0.txt

Note that you don’t actually need to keep all the copies of the files on your drives for all the URLs you generated. Only the latest versions that correspond to the pages you use them in are actually needed.

The simplest solution is to keep original file name on the drive, and rewrite URLs to ignore the versioning portion of the URL – remember, we have it only as unique identifier to allow for cache busting when we update file’s contents.

Also note that example URLs in this article do not use query string parameters (portion after the ? In the URL). The reason for this is that some intermediate proxy and potentially some other clients might not cache assets that have query strings because of some basic heuristics that flag such requests calls for dynamic data.

Conclusion

Think about caching policy for your data, figure out the URL mapping process (automated is the best) and use most aggressive cache policy for static assets.

To test the result, run WebPageTest with repeat view (default) and look for yellow lines. To get some serious detail about caching and other request properties, check out RedBot.