Let’s start with some numbers. The speed of light is 299,792 kilometers per second. The refractive index of optic fiber is about 1.5. That means light travels slower inside optic fiber. How slow? That’s roughly 299,792 kilometers per second divided by 1.5. In general, we round it off to 200,000 kilometers per second. The distance from Seoul to Buenos Aires is about 19454 kilometers. So theoretically it will take around 100 ms to transfer a signal from Seoul to Buenos Aires.

In reality, there are many more things to consider for web applications when transferring web contents from Seoul to Buenos Aires. First of all, the line of optic fiber is definitely not straight. Thus the actual distance is much longer. Also there is 3 way handshake needed to establish a TCP connection between the two points. And then there can be multiple objects such as JavaScript, CSS and images to transfer in addition to HTML. Finally there are the actual time to process a request and provide a response in the web server.

According to some studies from Google, users consider anything more than 100ms to be a perceivable delay. i.e. if your web site does not respond within 100ms, it is considered to be slow. The interaction have to be smooth, just like flipping a magazine. However, as the numbers above have shown, it can be quite a challenge.

One of the more popular tricks to lower the latency for web applications is to use edge cache. The idea is to cache JavaScript, CSS and images in servers that are close to the user (i.e. the edge of the Internet). So the latency to access these resources will be much lower.

But what about the HTML page? Normally there are many information captured in a HTML page. Some (e.g. news article) are cacheable. Some (e.g. personal information) are not. That makes it hard to cache the entire HTML page. And that’s where Edge Side Include (ESI) can help.

ESI

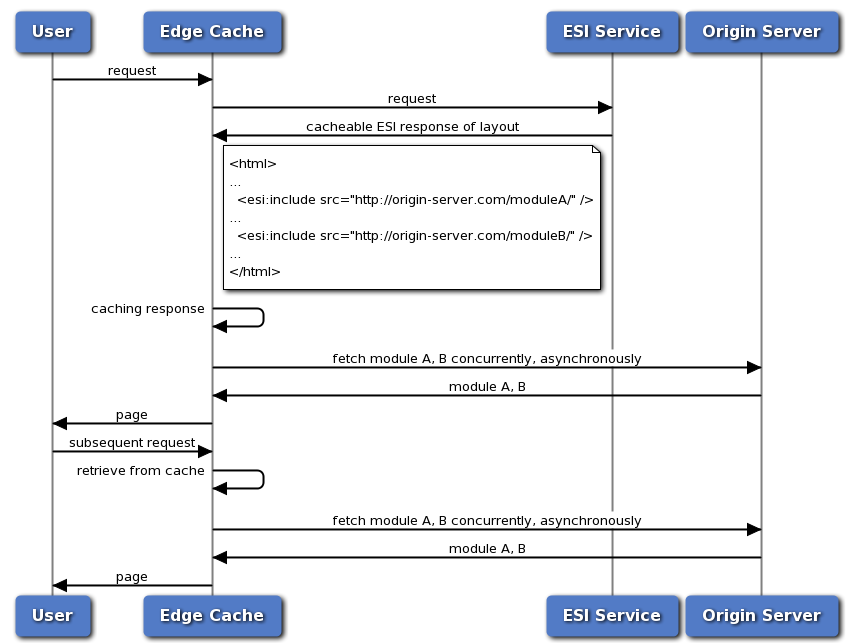

So how can ESI help? We can take a look at the diagram below. Suppose we have a news article page with some user specific modules such as advertisement and recently read news. The edge cache will forward the user request for the article page to a special ESI service. The ESI service will then return a cacheable ESI document.The document will contain the content of the news article as well as one or more “ESI includes” for each of the user specific modules. The ESI includes are markup that instructs the edge cache to request these modules from origin servers. The edge cache will replace the ESI include markups with the actual contents of these requests before sending the final processed response back to user.

For subsequent requests from other users, the ESI document with the news article is already cached in the edge cache and the ESI includes will be processed for each user to request the corresponding personalized modules. The overall latency can be lower because much of the HTML page is coming from an edge cache server close to the user and only parts for the personalized module are coming directly from origin servers where the latency in between could be higher.

One can also use client side Ajax JavaScripts to fetch modules to display on the page. However, JavaScript may not always be supported (e.g. in cell phone) or enabled (e.g. user can disable it in browser) in all situations. Also this requires extra coding while the ESI solution returns a normal HTML page transparent to browser. Finally as a rule of thumb, we would always like to limit the number of HTTP connections from the client but the Ajax solution is actually creating more.

Performance Characteristics

There are two performance characteristics that we need to consider before we talk about how to start using ESI.

- Concurrent Requests for ESI includes – When there are more than one ESI includes in the ESI document, we would want all these requests to be executed concurrently.

- First Byte Flush – After the edge cache server executes the requests for the ESI includes, it should start flushing the ESI document to user till the first ESI include. Then when the request for that include is done with a response, the edge cache should flush the content of the include to user and the rest of the ESI document till the next ESI include is reached.

Where do I get started?

CDN vendors such as F5 and Akamai provide support for ESI. So if you are using services from these vendors, you can take advantage of that. Akamai even extended the original specification. You can read more about it on its web site.

If you aren’t using a CDN vendor, there are still other open source proxy software that support ESI, such as Varnish and Apache Traffic Server. Varnish supported a minimum subset of the ESI specification. However, error handling is missing. So when the ESI includes fail, you cannot use ESI markup to instruct Varnish to take alternative action, such as rendering an error message or fetch a different module. You can read more about the Varnish ESI support here. Apache Traffic Server curreently has an experimental ESI plugin available. It supports most of the specification and you can read more about it here.

In terms of the performance characteristics described above, Varnish supports first byte flush but not concurrent requests for ESI includes. And it is the opposite for Apache Traffic Server ESI plugin. Work is under way to provide first byte flush support for the Apache Traffic Server ESI plugin and it should be available soon.

Future & Conclusion

ESI can be a very powerful tool to lower latency for web sites and applications. However, it had been around for over 10 years without any change. And the markup is not powerful and expressive enough to support some modern use cases. e.g. If we want to render different modules for different device, we need to be able to use a custom function on the user agent request header to determine the device type and use different ESI includes for the modules. So an update on the specification is definitely needed and we should see some progress of it in 2013

For more information, you can also check out my presentation and the corresponding pdf on this topic at Velocity China 2012.