Stoyan (@stoyanstefanov) is a Facebook engineer, former Yahoo!, writer ("JavaScript Patterns", "Object-Oriented JavaScript"), speaker (JSConf, Velocity, Fronteers), toolmaker (Smush.it, YSlow 2.0) and a guitar hero wannabe.

The Facebook Like button social plugin is a URL that looks something like this:

https://www.facebook.com/plugins/like.php?href=…

It’s probably one of the most popular pages on the web today, being present on millions of websites. It’s important that this page is as fast as possible, because many people load it several times during their daily browsing, even if they don’t have a Facebook account. Making the Like button faster is a path to making the whole web a little faster. Being a part of this effort I’d like to share what we’re done to speed up this plugin and hopefully give you some ideas how to improve your pages.

It’s a page

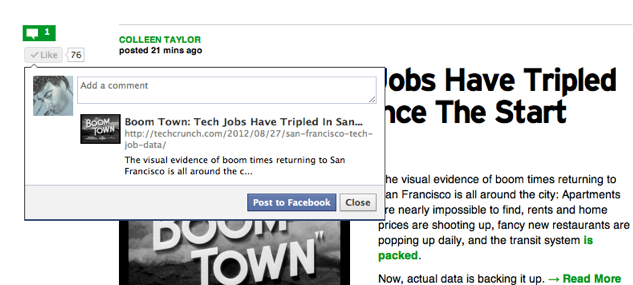

This social plugin may looks like there’s not much to it, but it is a regular web page. It has HTML, images, CSS, JavaScript, XHR requests and so on. It’s also dynamic – supports different languages, comes in different layouts, and brings up a comment flyout too after you “like”.

Starting: OMG!

Before the optimization efforts began, this little page was loading around 7 separate JavaScript files, 2-3 CSS files and some sprites. It looks like quite the overkill, but the reason is that the page was using the same static resource packager that the main facebook.com site uses. This packager is quite complex and intelligent, but designed to solve a different problem. You can learn more about it from a Velocity talk (video), but in resume, the packager handles a big site/app with many UI modules that can be combined in any way, added and removed quickly in a decentralized manner. No one really knows what goes on a certain page. So the packager tracks real usage stats on how UI modules (and their JS, CSS, images) are often combined and decides which files to package together.

Resource packaging

The social plugins such as the Like button are smaller and deterministic. There can be some variations but no big surprises and features that change day to day (or per user, or per region, etc). So the first rule of business was to take a more manual approach to packaging since we know which JS/CSS modules we’ll need.

After this step we ended up with 1 JavaScript resource, 1 CSS and 1 sprite. Performance golden rule (reducing HTTP requests) – check. Whew!

Single hostname

DNS resolution takes time and with so few resources now, domain sharding used on the main site doesn’t make sense anymore.

So: serve all static resources from a single hostname.

Loading JavaScript asynchronously

We want to make quick first impressions, and therefore JavaScript needs to go off the critical path. After all, the plugins are secondary to the user experience: first you read an article, then you decide to click Like. JavaScript is not that critical, as long as the button looks good and ready to use. Using a simple pattern to load the single JavaScript moves it out of the way, yet the download starts as early as possible:

var js = document.createElement('script'); js.src = 'http://path/to/js'; document.getElementsByTagName('head')[0].appendChild(js);

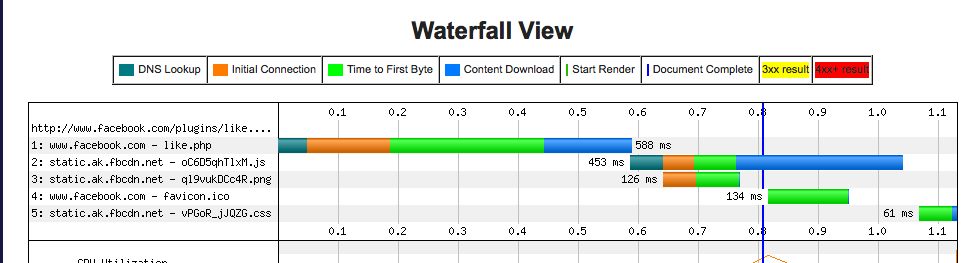

Split and inline CSS

Marching on with the changes that don’t really require any actual coding. Along the lines of good first impressions we split the monolithic CSS into two: one part that’s needed immediately and is used for initial rendering. We inline this part. And the second that is only needed after user action so it can be delayed. The waterfall then looked like:

The inlining of CSS may look a little controversial, but for small amounts of CSS it’s beneficial. Of course we A/B tested this change before settling on the inlining. We lose caching but, as you may have heard, CSS is the worst type of component and needs to be done and over with as soon as possible. Otherwise your users stare at a blank page.

Serializing hidden content

There’s some hidden content on the page that’s only shown after user action. However IE will still download images inside of divs with display: none. So this content is better kept off the live DOM tree. It can be inside an HTML comment, waiting to be “unwrapped”, or can be serialized as JSON string and, when needed, injected in the DOM.

CSS “nubs”

While looking to reduce components, it was clear that some images can be creatively replaced with CSS. For example using CSS borders instead of image arrows.

|

|

|

Not a big performance win since it takes some CSS to achieve this effect of double-arrow, but it still has benefits: looks good regardless of the user increasing font size or zooming or using “retina” resolution and it’s easy to maintain. In fact, this is an old screenshot, the current arrows look different, but were trivial to change because it’s only a CSS change.

Rounded corners

A little hard to believe we’re talking about rounded corners at this day and age, but here we are. UI folks wanted to maintain the Like button brand even in older IEs, so the suggestion to replace the existing complicated, image-using solution with border-radius and a simple box in IE was not acceptable. So we ended up still using border-radius where supported, and in IE fallback to adding two superfluous B elements on top and bottom and making them miss one pixel on each side, so to create the illusion of roundness (simplified “nifty corners”).

Markup:

<!-- IE --> <b></b> <button /> <b></b>

Result as seen in IE7:

Same screenshot 6x bigger:

Aside: A crazy experiment probably around that time was the idea if using the letter O for rounded corners.

Final sweep

Acknowledging that the social plugins’ use case is different than that of a big web app (facebook.com) we tweaked the core parts that generate page markup to support a leaner use case. So we striped some HTML and JS from the payload. And we also rewrote things like the JavaScript that collects performance data to be the bare minimum.

Rewrite

And what do you do when you run out of quick fixes? You start over. This is an opportunity to question assumptions, see how things have evolved.

AFAIK, the Like button was the first social plugin, but then others came up and piggybacked on it. It was clear how the initial JS and CSS has grown to accommodate more use cases. It was time for a clean break, creating smaller reusable UI/CSS/JS modules that can be shared and composed in new ways instead of expanding.

Rewriting and creating pieces allowed us to have smaller JS and CSS payload. Also because of the reduced requirements of social plugins (as opposed to the facebook.com site) we refactored and split some of the core libraries (e.g. DOM, Event).

All CSS inline

Now that we had significantly smaller CSS payload we could inline it all upfront. This also helps us only send the required CSS, as much as possible. For example “box_count” layout doesn’t have faces of your friends who have liked the page, so no need to send the CSS for it.

Additionally, Facebook pages are built out of XHP components that each require its CSS. If the component is not needed, its CSS isn’t included in the response either.

We also have our static resource system generate browser-specific CSS, so for example you don’t send -webkit-* stuff to Firefox and so on. This obviously helps reduce the CSS payload and when you’re inlining it all, every byte counts.

Common JS

At some point we migrated to common JS modules from a home-grown module system. This was probably not a huge performance win, but helped us clean up dependencies, reduce some modules’ scope, move away from globals. Technically a CommonJS module system should be smaller in size, because you can lint and reduce unused modules and also minify better, since all you variables are local.

A module looks like:

// dependencies at the top var DOM = require('DOM'); var Event = require('Event'); // actual work ... DOM.find('#something .or .other'); // ... // exports module.exports = Like;

So it’s easy to lint for unused modules as they will be simply unused variables (e.g. Event). And since all symbols are local (e.g. var DOM =) they can be minified better.

Lazy JS

Since most of JavaScript work in the plugin is performed after a user action, it’s possible to delay the execution of the JavaScript until it’s needed. It’s still beneficial to have the JavaScript preloaded though.

Preloading JavaScript without execution is not straightforward, but turns out not all that complicated either. The execution happens when a script node is added to the DOM. Additionally IE will start fetching the external script as soon as you set the src of the script node. In other words:

// prefetch var js = document.createElement('script'); js.src = "http://path/to/js"; // IE starts loading here // later ... // execute document.getElementsByTagName('head')[0].appendChild(js);

This is IE-only though. For other browsers you can use XHR (if the script on the same domain) or XHR2 (if the script is elsewhere, e.g. CDN domain).

var js = document.createElement('script'); // non-IE if (!js.readyState || js.readyState !== 'uninitialized') { var xhr = new XMLHttpRequest(); if ('withCredentials' in xhr) { // sniff XHR2 xhr.open('GET', url, false); xhr.send(null); } } // IE starts loading here, thanks @getify js.src = url; // later ... // execute document.getElementsByTagName('head')[0].appendChild(js);

As you can see, you don’t worry about handling the response from the XHR request, all you need to do is make the request, so that when you add the script node to the DOM it will hopefully be in the cache. Even if this XHR preload fails, the script will still execute (although not as fast).

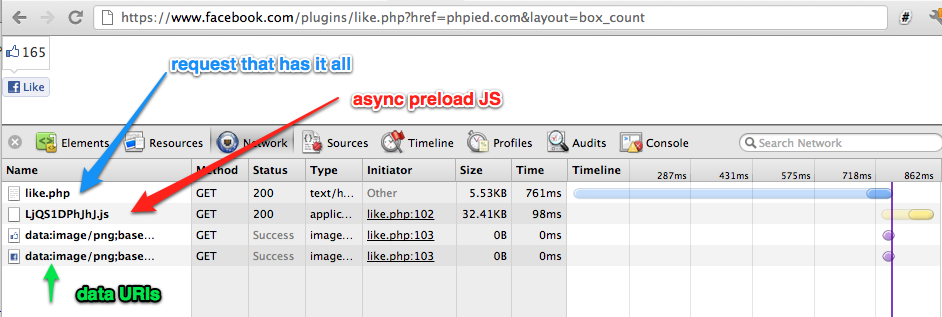

The ultimate: one request to rule them all!

Finally, why not inline the images too using data URIs and have everything render with a single request?! We tried just that:

As you can see, DOMContentLoaded and onload both fire at the end of the single request for HTML payload, since CSS and image dependencies are inline and the JS is just prefetched asynchronously.

Unfortunately this proved to be ever-so-slightly slower when tested and averaged across browsers worldwide and, very reluctantly, I had to scrap the test.

Results

After using all these tricks to reduce the payload as much as possible, then inline the most important parts (CSS, frame resizing, script loading) and delaying the JavaScript execution, you’ll get a significantly faster page. How much faster exacfly? Well, today applying these techniques and optimizing a new plugin, we see time to DOMContentLoaded and end-to-end time (after everything is done) usually cut almost in half.

And as for the Like button, today /plugins/like.php takes only about 500ms to load for the average user worldwide among all connection speeds and under 400ms for US users on cable connection. And this journey is not done yet!