Stefan Wintermeyer (@wintermeyer) created his first webpage when there was no <table> element defined yet (over 20 years ago). He writes books and articles about Ruby on Rails, Phoenix Framework and Web Performance. Stefan offers consulting and training as a freelancer for all three topics.

As a WebPerformance consultant I tell my clients that a faster webpage will give them an edge on their competition. Lower bounce rate. Better conversion rate. Better everything. A couple of years ago I started an experiment to proof it on a green field. In January 2014 I started mehr-schulferien.de which is a German webpage that displays school vacations for 27.716 German schools in 8.290 cities. There are a lot of webpages which offer this data so it is a tough competition. A perfect test ground to show that a faster webpage will lead to more pageviews.

It takes time. A lot of time!

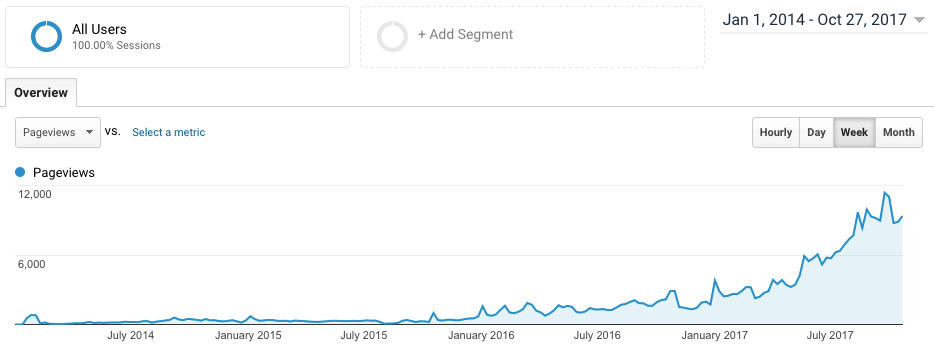

I started with a page nobody knew. No backlinks at all. I just announced it on my Twitter feed (@wintermeyer) and waited. Compound interest became my friend. It took 2 years (TWO!) to get a little bit of traction. And an other two years to get to the current volume of some 10,000 pageviews per week.

During the first 4 years I didn’t change a thing on the webpage. I just sat back and watched what happened. I did upgraded from http to https at one time but that didn’t result in a SEO push by itself.

Handle big load on a small server

I’m running the webpage on a 10 year old very slow server which I had to spare in 2014. The current revenue of Google Ads is about 100 Euro per month. It covers the bandwidth costs but is not enough to buy a new server. But I always saw this budget limitation as a challenge.

The first version of the page was a Ruby on Rails application. Users can update data on the page. It’s a bit like a Wiki to make it possible to update vacation dates for schools (which in some federal states can set up to 6 days of school vacation by themselves). To give you an idea of the complexity: Google currently lists some 624,000 pages for this domain.

The program logic of the page is quite complex. Depending on the school, the city, the federal state, the religion, the date and bank holidays it renders a customized calendar.

All pages were created by the Ruby on Rails application. Of course they were all Web Performance optimized (e.g. inlined CSS, smaller than 14 KB, etc.) but creating the HTML on the server became a performance bottleneck.

In 2017 I switched to a Phoenix Framework application and introduced a couple of new features for bridge days in the process. The Phoenix application was 10 times faster than the Rails application but CPU load still was high all the time. Search engines became a problem. It’s not just Google and Bing. There is an unbelievable amount of crawlers in the internet which visit the page everyday. I even stopped logging their visits to save hard drive storage.

Static files, seriously?

Each page was rendered by Phoenix and than gzipped or brotli compressed by nginx. That took a lot of CPU time. But because it is a data driven application there was no way to solve this, or was there? For a moment I thought about using Varnish Cache but RAM was a limitation. No way I could cache 624,000 pages in RAM. But to be sure I made the calculation: A typical page on mehr-schulferien.de weights about 118,638 Bytes. The gzip compressed version about 14,000 Bytes and brotli compressed about 10,900 Bytes. I need all three files.

624,000 pages * (118,638 + 14,000 + 10,900) = 83 GB

83 GB was not feasible to store in RAM on the given hardware but it would be no problem at all to store it on hard drive. So I wrote a script that generates all HTML files and compresses them after each software release. Brute force everything. When data changes the files for that school are deleted and created again in a background job. Because I do the compression not on the fly I can use a better but more CPU intense compression (brotli level 10 and zopfli —i70 for gzip). By that I save storage and get a better Web Performance.

It was only possible because I created small webpages for better web performance in the first place. I couldn’t have done it with the average 2 MB big webpage. All puzzle pieces fell together.

It takes the server some 2 days to generate all files but that is just a one time effort. Because I use hard drive even a reboot is not a problem.

Now the Web Performance goes through the roof because 99% of all pages are small static pages which are already compressed. nginx has a very relaxed time. CPU load is rarely higher than 0.5 on a multicore system.

Without the given hardware limitations I would have never thought about this solution.

The Impact

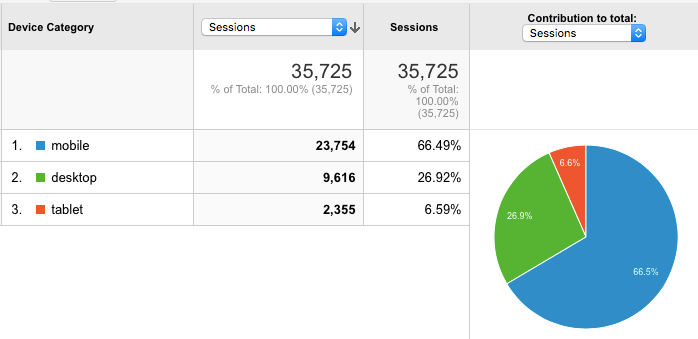

By providing very fast webpages I got a big market share for the mobile devises. About 66% of all visitors use a mobile devise.

The user numbers triple each year. The main reason for this is the good web performance.

Lessons Learned

Static webpages are lighting fast and use few resources. Even if your application is data driven it makes sense to think twice if there is a way to create static versions of the pages. Going old school pays off in these scenarios.