Doug Sillars (@dougsillars) has been helping developers improve mobile app performance since Android 1.5. The author of O'Reilly's High Performance Android Apps, he currently leads the Video Optimizer team at AT&T, helping developers of all sizes improve their app and video performance. He and his family are digital nomads, having worked and lived in 17 countries in the last 2 years. Doug is currently looking for new opportunities in the EU or where he can continue working remotely.

As more and more customers use high bandwidth networks, video has become the norm on the web. Social media, websites and (of course) streaming services like YouTube and Netflix all stream right onto your phone. Research has shown video enhances customer engagement, so we should expect that the growth of video on the web and on mobile will continue to grow at a rapid pace. But what makes for good video playback? And (perhaps more importantly) how can you implement good video playback that also is highly performant?

In this post, I’ll focus on a few ways you can optimize HTTP Live Streaming (HLS) for improved delivery. These best practices also apply to MPEG-DASH and other streaming formats, and are by no means a comprehensive list, but merely an introduction into ways to improve the performance of video streaming.

The Research: What Makes a Good Stream?

The answer is: it depends. Customers exhibit different behaviours for different types of streams. This intuitively makes sense – if you’re sitting down to stream a TV show or movie (over 15 minutes long) – you’ll generally be more patient than for a video of a cat riding on a Roomba.

In this post, I’ll walk through 3 major indicators of video quality that are important to consider.

- Startup delay: The time from pressing play until the video streams

- Stalls: No video remains in the device buffer and playback halts.

- Video Quality: How many pixels are on the screen at any given time.

These metrics are highly influenced by how quickly the video can be transported across the network. A research paper from Akamai finds that after 2 seconds of startup delay, customers begin abandoning at a rate of 5.8% per additional second. They also find that longer (and more numerous) stalls lead to abandonment. Finally, high quality video is more pleasant to watch, so avoiding pixelated and low quality video is important.

So, we want fast startup, no stalls, and high quality video to every customer. But, we also know that we have no control over the network conditions or the device being used to view our video content.

The screenshots in this post come from AT&T’s Video Optimizer, a free tool that collects network captures on your mobile device. It grades the network traffic against ~40 best practices to improve the network performance of your app. More than just video, it also looks at images, text files, connections and other network performance features as well.

How Can We Ensure Fast and Regular Video Delivery?

When it comes to video streaming, the best way to ensure fast, high quality video delivery is to have several different bitrates of the same video available for download. In HLS, a video request kicks off with delivery of a manifest file. This file (often with the extension .m3u8) itemizes the available video encodings for the video to be delivered. Each row of this text file lists information about the available streams. In the following chart, I have extracted the critical information from a video stream:

The first thing you might notice is the ID column is slightly out of order. There are values 1-7, but the list begins with 3. Each ID lists the bandwidth, he resolution, and the audio and video codecs used to create the stream.

Video Startup

The first bitrate listed in the manifest is the video quality that the player will initially request. If this list were sequential, the video stream would have commenced at a very low quality 1 (128×320 @193 KBPS). On the plus side, 193 KBPS will download very quickly on most networks.

If the order were reversed, the initial video quality would be extremely high (676×1024 3.6 MBPS). And while great video quality is important, this could lead to a very long startup delay on a network with less than 3.6 MBPS throughput.

Best Practice #1: To balance initial video quality and startup delay, place a medium bandwidth/quality stream as the first selection to balance fast video download/startup and initial video quality.

Video Playback

Once the player has begun downloading video segments (2-8s chunks of video to playback), the player will measure the download speed. If it calculates that the network can deliver higher quality video fast enough, it will attempt to download a higher quality version of the video. Conversely, if the network appears to be slower, it will drop to a lower video quality to ensure a constant stream. Every time the video quality is changed, the manifest for the new stream is downloaded, and the video can begin downloading the new version.

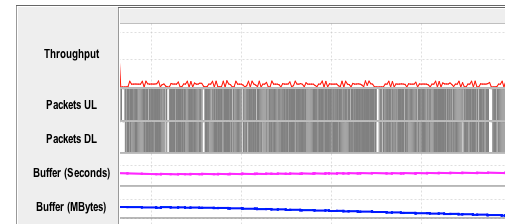

Video Optimizer can track the number of segments in the local device buffer, and reports back the amount of buffered video in seconds and MB during the data collection:

If either of these numbers reaches 0, there is no more video on the device to playback, and the video will stall.

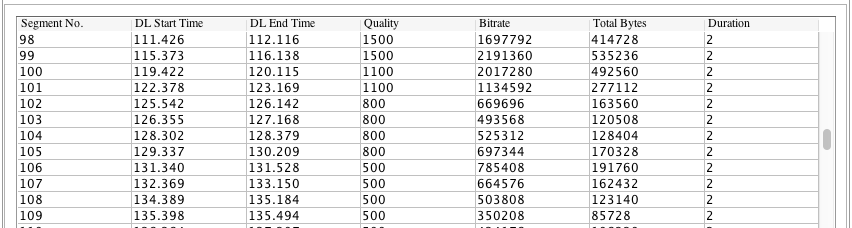

Using the Network Attenuation function in Video Optimizer, I changed the network throughput from 5 MBPS to 1 MBPS mid-stream, and we can see that the video player begins requesting lower quality video segments, dropping from 1.5 MBPS and eventually settling at 500 KBPS.

(Aside: You may be thinking – if the network throughput is 1 MBPS, why does 800 KBPS video not stream well? It turns out, there are two streams, one for video, and a 128 KBPS audio stream. The player determined that the 928 KBPS (plus overhead + analytics) was too close to 1024 KBPS, and downgraded the video further. In this case, one might make an argument for having a lower quality audio track, to ensure that the higher resolution video plays. Aside Best Practice: Audio quality (either separate stream or embedded in the video stream) has an effect on the overall bitrate of your video.)

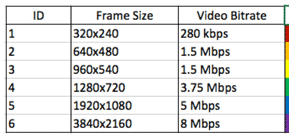

Clearly, multiple bitrates will help deliver a good video experience. The examples I’ve shown above have encodings with bitrate changes that increase in pretty regular intervals. This means that small changes in network throughput will only have minor impacts to the quality of the video on the screen. Compare that to this recommended bitrate list that I discovered online:

Imagine that you are viewing a video encoded this way on a mobile device with 1.4 MBPS throughput. The only possible option is ID 1, meaning that anyone on 3G will only see the lowest quality video stream. Further, the difference in video quality between streams 1&2 is probably significant. If the video moves between bitrates 1&2 several times, the change in video quality will likely be obvious to the end user. This set of encodings is not well suited for customers streaming on mobile devices.

Best Practice #2: Have multiple bitrates available, with regular intervals between qualities. This helps to ensure smooth video quality progression, and to prevent significant changes in video quality.

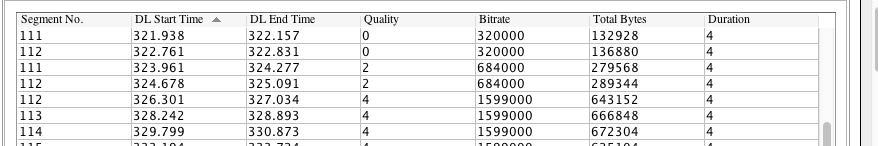

Video players vary on their aggressiveness to improve the video quality. Some video players, upon sensing a higher bandwidth, will begin a process of segment replacement – where video segments already downloaded at a lower quality are downloaded again at a higher quality. This does result in the same segment being downloaded more than once, but since it improves the displayed video, I consider it a tradeoff that is generally appreciated. For example, in table below, segments 111-112 are initially downloaded at quality 0. The player registers an uptick in throughput, and estimates that these 2 segments can be replaced, and re-downloads at quality 2. However, the player is also quite aggressive, downloading 112 a third time at quality 4. All in all, ~2MB of data are consumed for the 4 second segment 112. This might be considered too aggressive – as it wastes a large amount of data.

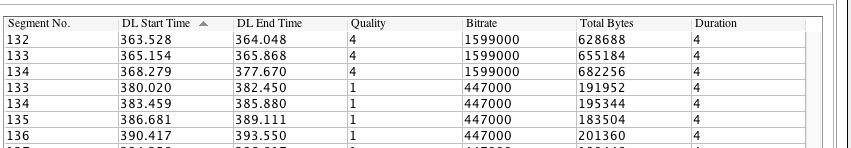

We have also seen examples of “reverse segment replacement”, where the player downloads a lower quality version after already having a higher quality version on the device. In this case, segments 134-134 are downloaded at quality 4 (1.6 MBPS), and then subsequently downloaded at quality 1 (447 KBPS):

At the very least, if quality 4 is played to the end user, ~ 370KB is wasted (the sum of the quality 1 segments). If quality 1 is played back, ~1.3MB of data is wasted, and the user is provided with degraded video playback.

Best Practice #3: If your video player aggressively pushes for high bitrate video, ensure that segment replacement only improves video quality. Monitor the data usage of segment replacement for your users (In Video Optimizer, this is reported as redundancy).

For videos with several high bitrate streams, an aggressive bitrate algorithm can lead to increased stalls. If the local buffer is 30 MB, but the stream is playing at 8 MBPS, there may only be 2-3 seconds of video queued locally. A sudden throughput change will likely lead to a stall before the network and server can react.

Best Practice #4 When streaming high bitrate video, ensure that the device’s buffer can support many seconds of video to account for sudden throughput changes. Alternatively: restrict highest bitrates from memory limited devices.

Conclusion:

Streaming video is becoming more and more prevalent on the web and in mobile applications. However, video streaming is complicated with dozens of potential variables, all of which can affect the quality of the playback to your customers. In this post, we’ve outlined just a few of the features in HLS streaming that can affect the video startup time, prevent stalls, and ensure the highest quality video is streamed to the customer, while minimizing wasted data.