David Calhoun (@franksvalli) has been building with the web ever since he was maintaining his personal site in high school. Career highlight so far: building a customizable satellite telemetry dashboard for Skybox Imaging, and watching the live telemetry flowing soon after the company's first imaging satellite was launched into space!

We’re about to enter into a new decade – 2020 and beyond, so what a good time to re-evaluate our best practices of yesteryear to see if they still hold up. Sometimes the best practices of yesterday become the antipatterns of today…

I’ll be looking at three approaches with bundling a simple React.js “hello world” app. Some of these examples assume some basic knowledge of module bundlers such as Webpack, which still seems to be the most popular of the module bundler options out there today.

Approach #1: Bundle all the things! (big ball of yarn)

(TLDR: don’t use this approach!)

This approach simply uses a module bundler to package everything, dependencies and all, into one big ball of yarn. For my “hello world” example, this bundle includes react, react-dom, as well as the “hello world” code. The entrypoint includes just this one script bundle:

<!-- index.html --> <script src="bundle.[hash].min.js"></script>

Before HTTP/2, this pattern may have been kind of acceptable because it reduces HTTP requests, but with most sites now using HTTP/2, this has become an antipattern.

Why? Because with HTTP/2 there is no longer a big overhead with making multiple HTTP requests, removing any substantial advantage in bundling everything up.

Browser caching also becomes very problematic with this approach. Think of this: changing just one line of code in the simple “hello world” code invalidates the hash of the entire bundle, forcing all users to redownload everything, even though 99% of the code may be exactly the same compared to the last time a user visited. That’s a lot of wasted bandwidth for code that hasn’t changed.

HTTP/2 is now supported by over 95% of clients worldwide, and as of this year is now the majority protocol implemented by web servers across the web. Check out the Web Almanac for more information about HTTP/2 usage this year.

Approach #2: Separated vendor and client code (code splitting)

(TLDR: please use this approach!)

Ok, let’s learn our lesson from Approach #1 and take better advantage of browser caching by splitting out our own code from its dependencies. This solves the simple use case above where we just changed one line of code and redeployed. Now just the index file hash has changed, and the vendor hash remains the same. Returning visitors will save some bandwidth by only re-downloading the changed index file!

In Webpack land, this requires some extra code splitting config to tell Webpack where the vendor code lives (node_modules in the path is a nice low-maintenance way of determining that, since all our vendor dependencies live there).

Here’s what our site looks like now:

<!-- index.html --> <script src="vendor.[hash].min.js"></script> <script src="index.[hash].min.js"></script>

And here’s a waterfall chart for this approach (served on HTTP/2):

This is a step in the right direction, and we can even optimize further. If you think about it, you will realize that some of your vendor dependencies probably change slower than others. react and react-dom probably change the slowest, and their versions are always paired together, so they both form a logical chunk that can be kept separate from other faster-changing vendor code:

<!-- index.html --> <script src="vendor.react.[hash].min.js"></script> <script src="vendor.others.[hash].min.js"></script> <script src="index.[hash].min.js"></script>

To optimize even further, consider that visitors probably don’t need to download all of your app code to view just one page. Just like us humans have a tendency to grow larger this time of the year, over time your site’s index code will also grow larger, so eventually it may make sense to code split even further by dynamically loading individual components and even prefetching/preloading individual modules.

To me this still sounds pretty scary and experimental (and probably a nice opportunity for difficult bugs to creep in somewhere), but it seems clear that this is the direction we’re headed. Perhaps with the native browser support of JavaScript modules, we may eventually abandon module bundlers like Webpack altogether and simply deliver code in module-sized chunks! It’ll be interesting to see this evolve over time!

Approach #3: Public CDN for some vendor code?

(TLDR: don’t use this approach!)

If you are a bit oldschool (like yours truly), you may have a gut intuition that we could maybe benefit by replacing vendor.react in Approach #2 with script tags from a public CDN:

<!-- index.html --> <script crossorigin src="https://unpkg.com/react@16.12.0/umd/react.production.min.js"></script> <script crossorigin src="https://unpkg.com/react-dom@16.12.0/umd/react-dom.production.min.js"></script> <script src="index.[hash].min.js"></script>

(note: for this approach you’d also have to separately configure Webpack to exclude react and react-dom from the index build)

Unfortunately, this approach now has mostly downsides. Here are four strikes against it:

Strike 1: Shared vendor files between websites? Not anymore…

Traditionally, the hope was that if all sites linked to that same CDN and used the same version of React as we’re using, we could assume that visitors to our site would arrive with all the React dependencies already cached, speeding up their experience considerably. What’s more, the React.js docs support that use case, so surely some folks are using this pattern, right?

While this may have been true in past times, browsers are recently starting to implement something called partitioned caches due to security concerns. What this effectively means is that even in ideal conditions where two sites point to the exact same third party resource URL, the code is downloaded once per domain, and the cache is “sandboxed” to that domain due to privacy implications. As Andy Davies discovered, this is already implemented in Safari (apparently it’s been around since 2013?!). And as of Chrome 77, this partitioned cache is implemented behind a flag.

The Web Almanac also makes an educated guess that usage of these public CDNs will decrease as more browsers implement partitioned caches.

(credit to Anthony Barré’s article, Joseph Scott’s comment on that article, and research by Andy Davies)

Strike 2: Extra time for pre-request stuff (for every domain)

Also mentioned in Anthony Barré’s article is the fact that using a public CDN incurs a hit due to a lot of browser back and forth that needs to happen before even sending an HTTP request: DNS resolution, initial connection, and SSL handshake. This is stuff that already needs to happen one way or another for browsers to connect to your main site, but hitting the public CDN incurs an additional hit:

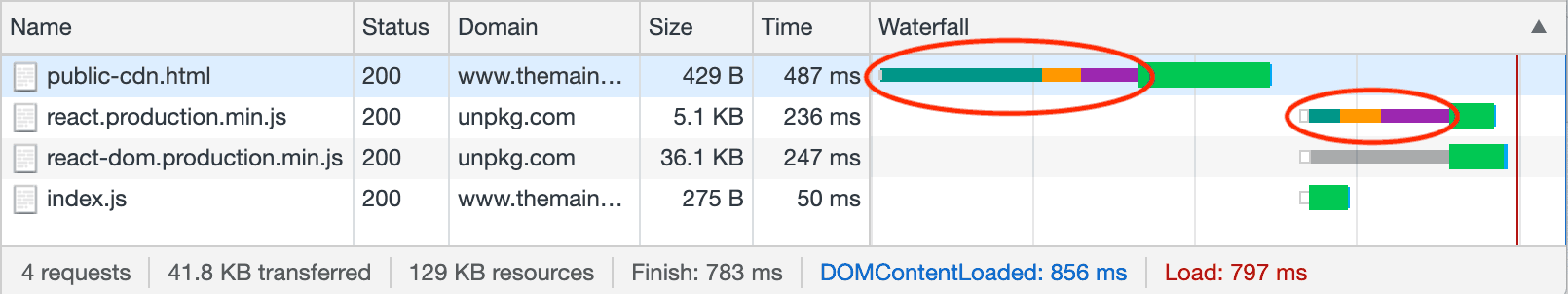

Here’s a waterfall to illustrate when we use a public CDN:

Note the areas circled in red, which is all that pre-request stuff happening behind the scenes. That’s a lot of extra pre-request overhead for just this simple “hello world”.

As my simple “hello world” site grows, I expect that soon a custom font would get added, say from Google Fonts, resulting in more of this sort of waiting from an additional domain. Suddenly the case becomes stronger for simply hosting all the assets on your main domain (behind your own CDN of course, such as Cloudflare or Cloudfront).

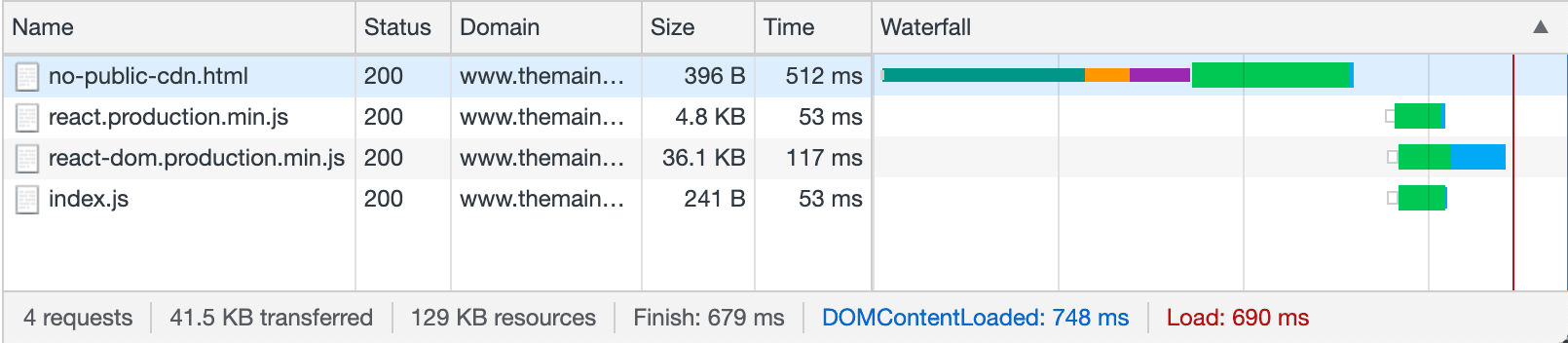

Simply moving these two React dependencies from the public CDN onto the primary domain results in a much cleaner waterfall:

Strike 3: Disparate vendor versions

I performed a quick unscientific study of 32 major websites that use React.js. Sadly, I found that only 10% used a public CDN to host React. But it turned out not to matter much. Even in an ideal world, if we could get everyone to coordinate and use the same public CDN (and without the partitioned cache concern in Strike #1), the React versions used by these websites are too disparate to be useful:

If you browse from one popular React-based website to another React-based site, the chances are not in your favor that the React versions are the same.

I did find some other interesting tidbits about these React.js sites that you may find interesting:

- 2/3 use Webpack to bundle their code.

- 87% use HTTP/2, way more than the current 58% average.

- The majority host their own custom fonts (~56%).

You can view my raw data from this quick unscientific study here.

Strike 4: Non-performance implications

Unfortunately there are more than just performance downsides to using public CDNs today:

- Security concerns. Aside from the security concerns that are leading browsers to use partitioned caching (see Strike #1 above), there are other security concerns. If hackers compromise a public CDN, they can very subtly inject malicious JavaScript into libraries. These scripts would have all the privileges of clientside code running on your domain, and be able to access data of logged-in users.

- Privacy concerns. Many companies harvest user data from third party requests. Theoretically, if all websites implemented a public CDN for their vendor code or fonts, that CDN would be able to track a user’s session and browsing habits, and make guesses at their interests (e.g. for advertising), without the aid of cookies!

- Single point of failure. Spreading content out across multiple domains increases the chances that one of those domains will have an outage, resulting in your page getting only partially loaded. To be fair, there are JavaScript workaround for this, but it involves some extra work.

Conclusions

Clearly the future is in Approach #2.

Put your app behind HTTP/2, a non-shared CDN (e.g. Cloudflare or Cloudfront), and serve up your site into several bite-sized chunks to take advantage of caching. These bite-sized chunks may get even smaller in the future, probably at the JavaScript module level, as browsers start to natively support JavaScript modules.