Alex Podelko (@apodelko) has specialized in performance since 1997. Currently he is a staff performance engineer at MongoDB, responsible for performance testing and optimization of the MongoDB server. Before joining MongoDB, he worked for Oracle/Hyperion, Aetna, and Intel.

Alex periodically talks and writes about performance-related topics, advocating tearing down silo walls between different groups of performance professionals. His collection of performance-related links and documents (including his recent articles and presentations) can be found at alexanderpodelko.com. Alex currently serves as a director for the Computer Measurement Group (CMG), an organization of performance and capacity planning professionals.

As the year coming to the end, it would be a lot of posts about technology trends. So I wanted to share my personal thoughts here too – as it appears that we are getting close to drastic changes in performance management. Recently I shared my list of five trends in performance engineering in 2020 State of Performance Engineering report (you can find them on pages 69-70). But, actually, they are all interconnected and the main point is that we need integrated products (performance infrastructure) that would work across the DevOps (as development and operations are becoming a single continuous process – which also gets more and more integrated into business).

Tools View

The problem was not even that tools were too specialized – some of them of them had quite a lot of functionality. But the problem was that tools served one specific performance viewpoint and targeted one specific group of users. Adrian Cockcroft in the Observability paper (in 1999) identified there three different viewpoints: operations, engineering, and management. Actually it may be broken down further – development and testing definitely have different viewpoints. Even functional and performance testing have different viewpoint. So tools were built around these different viewpoints. And while there were attempts to appeal a wider audience, it didn’t quite work as these tools were designed around a specific viewpoint.

For example, load testing tools often have integrated monitoring and other functionality. Their scripts can be used for synthetic monitoring. However practically nobody used these tools for production monitoring or synthetic monitoring as they were built with the performance testing viewpoint in mind.

And now we got a paradigm change – DevOps – that united several groups of users in some way (like engineering and operations with further attempts to integrate testing and business into it). So tools serving just one category doesn’t make much sense anymore (in long run – as we don’t have much real alternatives right now, people continue to use what exists, but it becomes a serious pain and it push vendors toward integrated products).

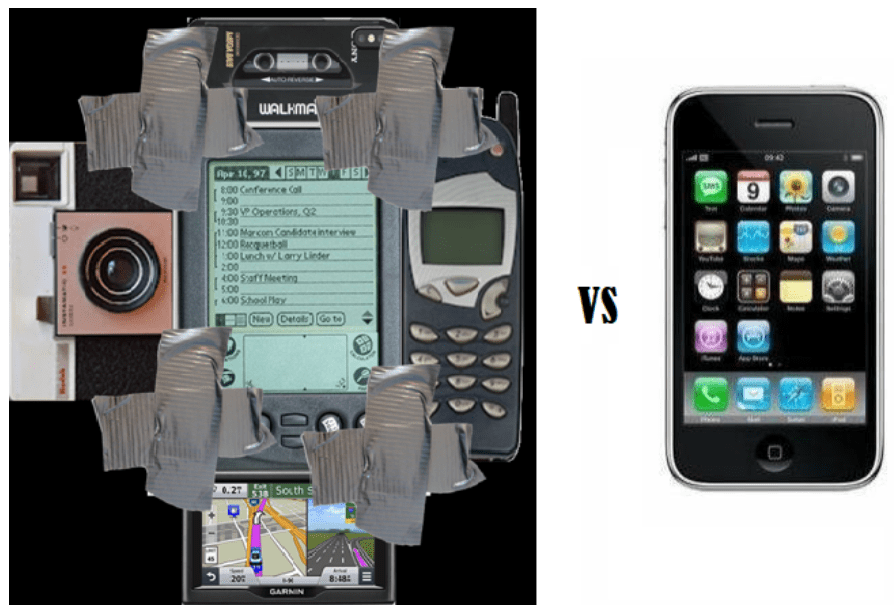

To provide a graphical analogy, we had all performance tools somewhat integrated as shown on the left part of the picture – but we need get it into something really integrated similar to the right side of the picture:

Performance Requirements View

To look at the issue from another angle, here is what I wrote in the Performance Requirements: An Attempt at a Systematic View article (2011):

At first glance, the subject of “performance requirements” looks simple enough. Almost every book about performance has a few pages about performance requirements. Quite often a performance requirements section can be found in project documentation. But the more you examine the area of performance requirements, the more questions and issues arise.

Performance requirements are supposed to be tracked from the system inception through the whole system lifecycle including design, development, testing, operations, and maintenance. However different groups of people are involved on each stage using their own vision, terminology, metrics, and tools that makes the subject confusing when going into details.

Business analysts are using business terms; architects’ community uses its own languages and tools (mostly created for documenting functionality so performance doesn’t fit them well); developers often think through the profiler view; load testing tools bring the virtual-user view; queuing models, used in capacity planning, use mathematical terminology; production people have their own tools and metrics; and executives are more interested in high-level, aggregated metrics. These views are looking into the same subject – system performance – but through different lenses and quite often these views are not synchronized and differ noticeably. While all these views should be synchronized to allow tracing performance through all lifecycle stages and easy information exchange between stakeholders.

That remained true for a while. No need even to change anything in the quote. But now it started to change as the DevOps / Agile concepts don’t tolerate it anymore. Of course, these concepts introduced their own jargon and roles – but the main idea is still breaking silos between groups and working as a single team with a single view.

Do We See the Trend in the Vendor Market?

We have seen a lot of attempts to get integrated products by bundling different products together in some ways (with some formal integration). Many large companies – such as CA or IBM – definitely have products covering almost every aspect of performance engineering as they acquired many great products. But integration of the products designed around different viewpoints proved to be not very effective – and you hardly hear about these integrated products beyond specific vendor ecosystems.

It is interesting that small companies that start from single successful offering were able to expand quickly and now adding additional services on the road to integrated performance management. DataDog started as a simple cloud monitoring service, Splunk – as a log analysis tool, Elastic as a search technology, Catchpoint – as a synthetic monitoring tool. I should admit that at the time I didn’t paid much attention to these companies thinking that their offering are rather basic. Well, I was wrong. Now they (along with other companies such as Dynatrace, AppDynamics, and New Relic) are on the way to integrated performance management (of course, using whatever fancy names their marketing was able to come with). I guess that it is a good illustration of the Minimum Viable Product (MVP) concept – better to have a simple product that actually helps some customers than a very advanced technology that is difficult to utilize.

However, it is worth noting that there are some areas which are not really included in the current integration trend yet. For example, performance testing – where we may even see some downward trends and the generic concept of how it should be integrated remains somewhat unclear (although we see some progress in continuous performance testing in cases when workloads are easier to formalize).

Of course, we don’t know where it all ends. We can’t say yet who is the winner in the race, we can’t even say what this integrated performance management product should look like. We still see companies going on buying spree (Splunk recently acquired quite a lot of interesting companies) – it is an open question if they would be more successful than their predecessors in integrating all these products. But what we can say for sure that performance engineering in general and its tools in particular are changing drastically – and we are right in the middle of that transformation.

Monitoring vs. Management

And one more very important sub-trend here is shift from performance monitoring to performance management. Monitoring in a broad sense here – observing system behavior through monitoring, log analysis, tracing, etc. While most vendors defined APM as Application Performance Management, Gartner specifically pointed that it is Application Performance Monitoring, that there is no management per se there (still do in their 2020 APM Magic Quadrant). Which mostly true until now – although started to change.

Infrastructure as Code (IaC), Everything as Code, etc. – one more important part of DevOps / Agile trends – actually opened a way to real tool-based automated performance management. It is rather in the beginning – although it definitely got significant progress in some niche areas (autoscaling up and down is probably the best example). As most modern technologies provide more integration ways – including levers to manage these technologies – we will definitely see more advances in that area.

Saying that, it appears that jobs of performance engineers are safe enough in the foreseeable future as all this automation is rather on the infrastructure / operations side of things. Optimizing architecture and code (both back-end and front end) is still not even well formalized, leave alone automated.

Getting Back to Performance Engineering

As the walls between different silos in technology are disappearing, the need of a holistic approach in performance engineering becomes more and more apparent. I already wrote about it in Performance Calendar in 2017 Shift Left, Shift Right – Is It Time for a Holistic Approach? and 2018 Context-Driven Performance Engineering. One reason why we don’t see much progress here is that we don’t actually have good tools to support it across different teams and technologies. While open source revolution caused great progress in some areas – it looks like its impact to integrated performance tools was rather mixed. Are Times still Good for Load Testing? has, for example, my thoughts about load testing tools.

What is the Name?

By the way, nobody is consistently using the term I put into the title (as far as I am aware). We have quite a few terms around for that, which is used almost randomly by different vendors and analysts. “Almost randomly” I mean using whatever definition / message they want to put into it mostly based on marketing considerations. The point is that we don’t have established terminology here and it is very difficult nowadays to differentiate products based on its description without diving very deeply in details what exactly they are actually doing.

Here are some names that are often used:

- APM (Application Performance Monitoring / Management)

- ITOA (IT Operations Analytics)

- ITOM (IT Operations Management)

- AIOps (Artificial Intelligence for IT Operations / Algorithmic IT Operations)

- DEM (Digital Experience Management / Monitoring)

- End-User Experience Management

- Observability

- Service Mesh

You may see quite a few different definitions for each term and how they relate to each other. So I don’t even try to discuss any term here – as for every explanation you can find another explaining it quite differently. AppDynamics, for example, states that “Digital experience monitoring (DEM) is the evolution of application performance monitoring (APM) and end user experience monitoring (EUEM)”, while Gatner defines APM as “a suite of monitoring software comprising digital experience monitoring (DEM), application discovery, tracing and diagnostics, and purpose-built artificial intelligence for IT operations.” and Dynatrace defines itself as “Software Intelligent Platform” including multiple components such as APM and Digital Experience.