Barry Pollard (@tunetheweb) is one of the maintainers of the Web Almanac. By day he is a Software Developer at an Irish Healthcare company and is fascinated with making the web even better than it already is. He's also the author of HTTP/2 in Action from Manning Publications.

Introduction

Testing is an important part of software development and especially so for those items that may not be immediately noticeable when they regress. Unfortunately web performance, accessibility, SEO and other best practices all fall into that category. Using an unoptimized image may work fine on your high-spec development machine, on your high speed internet connection, but will negatively impact users in the real world.

Automating tests is key for catching these sorts of issues, and doubly so for regressions that can sneak into a project while you are concentrating on other functionality. Unfortunately not everyone has the time, experience or infrastructure necessary to run continuous integration (CI) tests on every commit.

Launched in 2019, GitHub Actions can take away much of the hassle of setting up and managing CI infrastructure for those who manage their source code on GitHub, like many of us do. They provide (for free!) servers to run code, easy integration into the GitHub ecosystem to show results on pull requests, for example. They also allow people to share pre-built GitHub Actions so other projects can benefit from them, by adding a simple YAML file to their repo. They even have a Marketplace where you can list your action for others to find.

Lighthouse is an open-source auditing tool that runs a number of tests against a web page and reports on how it is performing in the categories mentioned above (Performance, Accessibility, Best Practices, and SEO). Like GitHub Actions, using Lighthouse is free – there are no licences to purchase or accounts to be set up. Many developers, particularly in the web performance community will be familiar with running a Lighthouse audit from either the many online tools or from Chromium-based browsers dev tools. But they also offer a command line interface (CLI) which can be used to automate runs.

Combining GitHub Actions and Lighthouse CLI therefore allows you to automate a huge number of tests with relative ease. In this post I’ll discuss how to automate Lighthouse audits in your GitHub repository and some of the things to consider to use it efficiently.

The value of Lighthouse

As I mentioned, Lighthouse’s value has long been recognised by the Web Performance community, and with Google’s launch of Core Web Vitals, and their planned impact on search rankings next year has opened this tool up to a much wider community of people involved in building and running websites.

Additionally the Accessibility, Best Practice and SEO audits are very useful and a lot more sophisticated than they are given credit for. They are something that, in my opinion, should no longer be seen as lesser audits, even with the limelight that the launch of Core Web Vitals has further shone on the Performance audits. Accessibility is being increasingly recognised as the fundamental right it should be – as well as the moral and legal implications, it is just plain rude to exclude people from your site because you don’t follow good coding practices. Similarly every website needs to think about how they will be seen by search engines and other micro-browsers, which is covered by the SEO audits.

A big part of the value of Lighthouse is the sheer number of audits it performs across a wide variety of disciplines and best practices. You don’t need to be a web perf nerd, or an accessibility expert to figure out how to test your code or what exactly to look for – just run Lighthouse against it and see what issues it spots, or advice it gives, and then you can follow up on those to more fully understand them. It is well documented, with links to “Learn More” should you need to find out more detail.

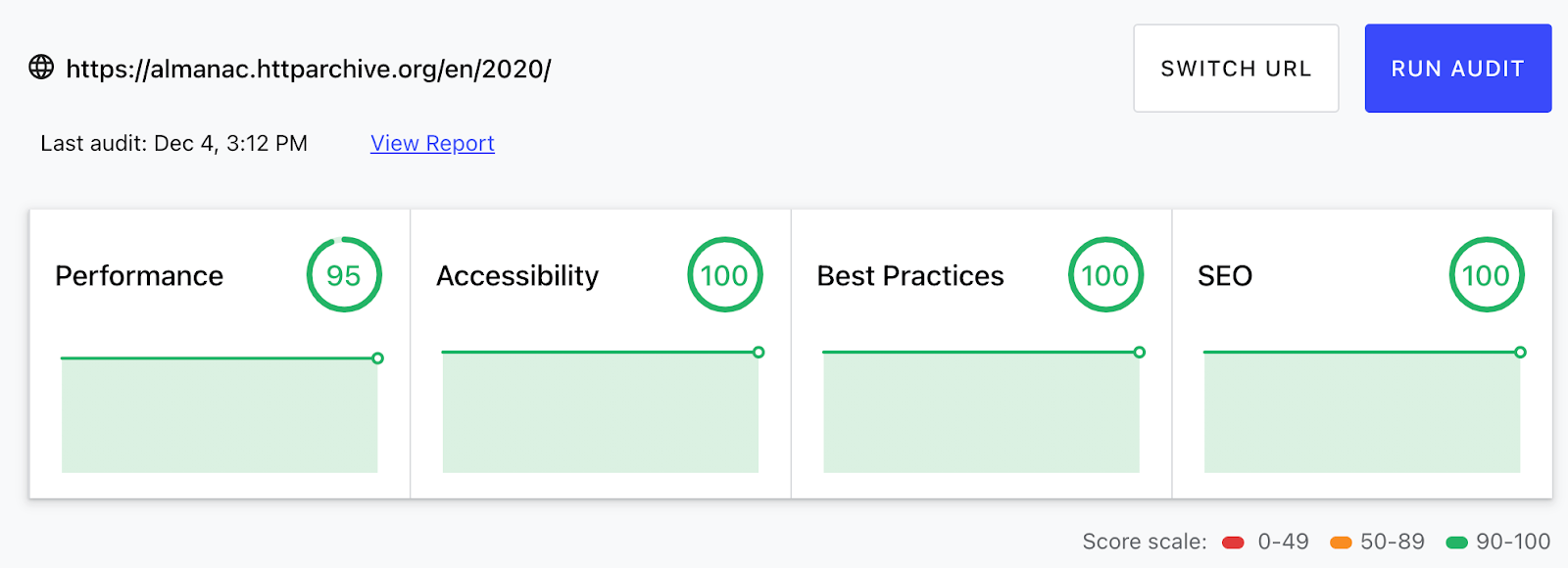

The simple score and red, amber, green colour coding gives an easy visual of how your site is performing in each category, what are major issues, and what are simple suggestions as possible areas for improvement.

Of course, all automated testing is limited. And I’ve seen advocates and experts from all the communities point to the limitations of Lighthouse: web performance evangelists will tell you the scores and the Core Web Vitals are too high-level and not based on Real User Monitoring. Similarly, accessibility enthusiasts will point out the limitations of not performing a manual audit to actually use the website, and how it is possible to “game” the score, and SEO specialists will point to the fact it is basically on-page audits only and is no substitute for dedicated tools in this space. They are all right of course, and if you can hire dedicated experts in each of these areas and pay for commercial tools then of course you (would hope to!) get much better understanding and details. However, it is surprising exactly how much is available in this one tool and the value of this is not to be underestimated.

I have blogged before about what Lighthouse scores look like across the web and in that touched upon just how detailed it gets, and the intricacies they take into account and yet somehow still manage to present in a simple set of scores at the end. Perhaps one of the best things about this is that it is continually being enhanced by Google, and others, as can be seen in the level of activity in its GitHub repository. Whereas adding to your own tests to keep up with changes in the web development landscape requires extra effort, by running Lighthouse, you should get new changes, like the aforementioned Core Web Vitals update, for free. Though admittedly it may require some maintenance if new audits, or even bugs, start flagging issues in your code, that you either have to fix, or configure not to flag!

The HTTP Archive, and in particular the Web Almanac, that I’m heavily involved in, also makes a lot of use from the data it gets from running Lighthouse across over seven and a half million websites and the 2020 edition, due to be published around the same time as this blog post, will make even more use of this data than we did last year. In fact it was for that project that I first set up automated Lighthouse testing and that led to this post.

The basics of running Lighthouse in GitHub Actions

Lighthouse can be run in many ways in GitHub actions: by initiating the CLI directly, by calling a paid-for service, or by using some of the pre-built Lighthouse GitHub Actions available in the GitHub marketplace. I’m going to use Lighthouse CI Action published by Treo as it is free, simple to use, partially sponsored by Google, and I saw contributions from Google Lighthouse team members to this project. There is no official Lighthouse Action but this seems as close as anything out there.

To use the action is as simple as adding a YAML file to the .github/workflows/ folder calling it something like lighthouse.yml. They give an example YAML file in their documentation saying it should contain something like this:

name: Lighthouse CI

on: push

jobs:

lighthouse:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v2

- name: Audit URLs using Lighthouse

uses: treosh/lighthouse-ci-action@v3

with:

urls: |

https://example.com/

https://example.com/blog

budgetPath: ./budget.json # test performance budgets

uploadArtifacts: true # save results as an action artifacts

temporaryPublicStorage: true # upload lighthouse report to the temporary storage

This basically says on every push to GitHub, checkout your code, run Lighthouse on those two URLs, using a budget file and then upload the reports to temporary online storage so you can view the results in the same way as if you’d run it from an online tool.

That’s it – add this and you have automated Lighthouse runs!

Well not quite. That probably isn’t that useful as it runs the tests on real URLs, when you probably want to test the code committed rather than your website. How to do this really depends on your stack and how you generate your website.

For the Web Almanac we write our content in markdown, then have a node script to generate them into Jinja2 templates and then serve the pages via Flask – a python based web server. Therefore we have a more complicated Test Website YAML file which is run on pull requests, that then checks out the code, builds the website, runs the other non-Lighthouse tests we’ve written, and then runs Lighthouse on the pages that have been changed in that commit. If all is good, we get the lovely green checks at the bottom of the Pull Request:

If an audit fails, we don’t get a green tick, and instead get a scary red cross, and we can click on the “Details” link for more information – including the full Lighthouse report. This means that for every, single pull request we have on the project, we can be sure Lighthouse is clean giving us enormous confidence to merge the code without introducing regressions or bad practices.

As an aside, you can also see in this screenshot our actions for the GitHub Super-Linter and Calibre Image Actions GitHub which I also highly recommend but time and space means those are for another post.

Our checks include not just code changes, but also any new content – which, as I mentioned above, managed as a markdown file maintained in our repository in the exact same way as code. As we have well over a hundred contributors each year, from varying degrees of technical ability, and who all cannot be familiar with the best way of building performant, accessibility, SEO-friendly websites, this is an important check for quality.

So far Lighthouse has flagged for us:

- Accessibility regressions in colour contrast

- Un-optimised images

- Incorrect links

- Poor link text

- Bugs in our code that have prevented certain pages running

And surfaced them directly to the pull request raiser so they can deal with it before the core contributors even have to look at it.

Since introducing this we have maintained our perfect Lighthouse scores of 100 on Accessibility, SEO, and Best Practices and kept scores of 90-100 on the much tougher Performance category (more on this later).

Customising the Audits to run

By default the Lighthouse Action, will run Lighthouse and fail on any unsuccessful audits. Unless you are running a perfect, and very simple website, this is likely to end up failing for non-issues and leading to false positives, alert fatigue and so people ignoring this check.

Therefore it is important to configure the checks you want to run, and any budgets you want to keep within. This may be because you don’t use some of the advice Lighthouse offers or because your development environment is not the same as production.

Lighthouse supports a JSON-based config file, or a simpler JSON-based budget file (I couldn’t get it to support both so it seems it is one or the other). For our development website we have the following:

{

"ci": {

"assert": {

"preset": "lighthouse:no-pwa",

"assertions": {

"bootup-time": "off",

"canonical": "off",

"dom-size": "off",

"first-contentful-paint": "off",

"first-cpu-idle": "off",

"first-meaningful-paint": "off",

"font-display": "off",

"interactive": "off",

"is-crawlable": "off",

"is-on-https:": "off",

"largest-contentful-paint": "off",

"mainthread-work-breakdown": "off",

"max-potential-fid": "off",

"speed-index": "off",

"render-blocking-resources": "off",

"unminified-css": "off",

"unminified-javascript": "off",

"unused-css-rules": "off",

"unused-javascript": "off",

"uses-long-cache-ttl": "off",

"uses-rel-preconnect": "off",

"uses-rel-preload": "off",

"uses-text-compression": "off",

"uses-responsive-images": "off",

"uses-webp-images": "off"

}

}

}

}

I’ll explain some of these choices, but won’t bore you with them all.

We run the main recommended tests, but turn off the PWA checks as we’re not a PWA site. The recommended setting “asserts that every audit outside performance received a perfect score, that no resources were flagged for performance opportunities, and warns when metric values drop below a score of 90. This is a more realistic base that disables hard failures for flaky audits.”

Our test website runs locally on http://127.0.0.1:8080 and so we turn off a few audits:

canonical,is-crawlablebecause the canonical link is to our real site and we deliberately disable indexing on test sites.is-on-httpsanduses-text-compressionare both not available on the development server There are some other audits for items we don’t use:dom-size– we have large complex documents which do often exceed recommended DOM sizes – particularly when we embed our interactive charts.font-display– we do use this, but found we got alerts for this for our Google Sheets embedsrender-blocking-resources,minified-css,minified-js– we don’t inline our CSS as it is not for me, and because our CSS and JS is small and with text-compression I don’t see massive value in this.uses-long-cache-ttl– Personally I’m not convinced to the value of this, but that’s a post for another day!uses-rel-preconnectanduses-rel-preload– I should find time to revisit these.uses-responsive-imagesanduses-webp-images– we use responsive images and webp for our banner images, but not for all chapter images due to the maintenance overhead and the fact we don’t use an image CDN

The point is not to understand the audits we have turned off for our environment, but to realise you can (and should!) turn off audits that don’t suit your project or for optimisations you have not implemented yet. I would much rather have 10 audits that only flag when there’s something to fix, than 1,000 audits that frequently fail and cause more hassle to investigate, and ignore, because they aren’t something you are going to fix at the moment. I strongly advise to be liberal in turning off audits to get Lighthouse working consistently and reliably and then build upon that

Let’s talk about automated testing of Performance metrics

On that last point, let’s talk about performance audits and using them in automated checks like this: basically “don’t” is my advice – including enforcing limits on the new Core Web Vitals. This may sound like sacrilege and defeat a lot of the point in automated Lighthouse testing given all that I said at the beginning of this post, but there are some very good reasons for this – mostly related to the noise versus the benefits.

One of the big problems with performance audits is the variability in runs. GitHub makes no promises about the speed and performance of the servers that will run actions and differences in machines, load and network glitches all mean that performance metrics can vary considerably. This leads to the aforementioned “noise” therefore reducing the usefulness of an automated check.

So while it would seem ideal to ensure that no changes impact your Largest Contentful Paint (LCP) time or introduce Cumulative Layout Shifts (CLS), the reality is these will vary. You can set minimum budgets as alluded to previously, but even then the usefulness is questionable, if you have to set the limits so low that they won’t catch new regressions in this space. It is also possible to configure multiple runs and take the mean but at the expense of run times and no guarantee on whether variability will impact you.

I would much rather check for audits that influence these metrics but don’t change between runs – for example un-optimised images – than for the end timing metrics that will be variable.

There is also the question of how useful lab experiments are in these areas (yes I’m about to temporarily turn into one of those people I warned you about previously!). If your website runs great on whatever server config you’ve set up to test then that’s all well and good, but how reflective is that of your users? Are a large proportion of your users on unreliable mobile networks or less powerful hardware than your test machines? To me, timing based metrics really are best measured with RUM tools and even if you don’t have the time and money to invest in an expensive RUM-based product, you can alternative methods like building a dashboard in Data Studio using the Chrome UX Report data or the basic performance metrics in Google Analytics to get some visibility into this. It’s even possible to capture the Core Web Vitals metrics yourself and post them back to Google Analytics (something else we implemented in the Web Almanac).

Additionally the Performance metrics are hard to get a perfect score on! Unlike the other metrics which have a binary pass or fail, the Performance score are based on a log-normal curve of all websites meaning they are subject to fluctuations depending on how other sites are performing so they are not completely in your control. If every website in the world suddenly did better at performance your score may well go down, despite you not regressing in performance at all. This may seem strange, but in reality if every website in the world performs better, then the bar has gone up so in many ways it is correct to measure like that. But it does make automated testing for scores difficult because that’s another factor of variability.

So, despite Lighthouse being a great window into timing based performance metrics, it is not something we use in our automated run, and I would advise to think carefully (or even test it out!) before you undertake this. Despite those often being the headline features, Lighthouse has plenty to offer even without them.

Choosing which URLs to run Lighthouse on

Our next challenge is to figure out which pages to run our tests on. Do we test only sample pages? Or all pages every time – which will probably take too long. As mentioned, we have many contributors and we want to audit the content being submitted so needed to run the URLs affected by that particular pull request. To do this took a bit of scripting, some funky grep and sed commands, so let’s delve into how we addressed this challenge.

First of all we set some base URLs we will check for every commit:

LIGHTHOUSE_URLS="" # Set some URLs that should always be checked on pull requests # to ensure basic coverage BASE_URLS=$(cat <<-END http://127.0.0.1:8080/en/2019/ http://127.0.0.1:8080/en/2019/javascript http://127.0.0.1:8080/en/2020/ END )

This will cover non-content changes (e.g. to our server), or CSS or JS changes not-specific to individual pages.

Then we do a git diff from the commit, search for the content or template files changed, strip out some files we don’t need to check, and then convert any of the content files into the http://127.0.0.1:8080 URLs mentioned previously and get them in a space separated list:

# If this is part of pull request then get list of files as those changed

# Uses similar logic to GitHub Super Linter (https://github.com/github/super-linter/blob/master/lib/buildFileList.sh)

# First checkout main to get list of differences

git pull --quiet

git checkout main

# Then get the changes

CHANGED_FILES=$(git diff --name-only "main...${COMMIT_SHA}" --diff-filter=d content templates | grep -v base.html | grep -v ejs | grep -v base_ | grep -v sitemap | grep -v error.html)

# Then back to the pull request changes

git checkout --progress --force "${COMMIT_SHA}"

# Transform the files to http://127.0.0.1:8080 URLs

LIGHTHOUSE_URLS=$(echo "${CHANGED_FILES}" | sed 's/src\/content/http:\/\/127.0.0.1:8080/g' | sed 's/\.md//g' | sed 's/\/base\//\/en\/2019\//g' | sed 's/src\/templates/http:\/\/127.0.0.1:8080/g' | sed 's/index\.html//g' | sed 's/\.html//g' | sed 's/_/-/g' | sed 's/\/2019\/accessibility-statement/\/accessibility-statement/g' )

# Add base URLs and strip out newlines

LIGHTHOUSE_URLS=$(echo -e "${LIGHTHOUSE_URLS}\n${BASE_URLS}" | sort -u | sed '/^$/d')

Again don’t worry too much about the actual code as that will be very specific to your site, but the principal is what matters here.

Now we’ve have the list of URLs we print them out to screen and use jq to inject them into our Lighthouse config (many thanks to Sawood Alam for this piece which was much cleaner than my original sed-based hack!):

echo "URLS to check:"

echo "${LIGHTHOUSE_URLS}"

# Use jq to insert the URLs into the config file:

LIGHTHOUSE_CONFIG_WITH_URLS=$(echo "${LIGHTHOUSE_URLS}" | jq -Rs '. | split("\n") | map(select(length > 0))' | jq -s '.[0] * {ci: {collect: {url: .[1]}}}' "${LIGHTHOUSE_CONFIG_FILE}" -)

echo "${LIGHTHOUSE_CONFIG_WITH_URLS}" > "${LIGHTHOUSE_CONFIG_FILE}"

URLs can be provided in either the JSON, or as an input to the GitHub action as shown previously. Here we decide to do it via the JSON as slightly simpler than passing dynamic inputs to the GitHub Action.

I’m sure there’s other ways to do this for those less familiar with shell scripting but I’ve a Linux background, so this was the easiest for me to do. Again the principal is what counts.

This then allows us to have the following in our YAML file:

######################################

## Custom Web Almanac GitHub action ##

######################################

#

# This generates the chapters and tests the website when a pull request is

# opened (or added to) on the original repo

#

name: Test Website

on:

workflow_dispatch:

pull_request:

jobs:

build:

name: Build and Test Website

runs-on: ubuntu-latest

if: github.repository == 'HTTPArchive/almanac.httparchive.org'

steps:

- name: Checkout branch

uses: actions/checkout@v2.3.3

with:

# Full git history is needed to get a proper list of changed files within super-linter fetch-depth: 0 - name: Setup Node.js for use with actions uses: actions/setup-node@v1.4.4 with: node-version: 12.x - name: Set up Python 3.8 uses: actions/setup-python@v2.1.4 with: python-version: '3.8' - name: Run the website run: ./src/tools/scripts/run_and_test_website.sh - name: Set the list of URLs for Lighthouse to check env: RUN_TYPE: ${{ github.event_name }} COMMIT_SHA: ${{ github.sha }} run: ./src/tools/scripts/set_lighthouse_urls.sh - name: Audit URLs using Lighthouse uses: treosh/lighthouse-ci-action@v3 id: LHCIAction with: # For dev, turn off all timing perf audits (too unreliable) and a few others that don't work on dev configPath: .github/lighthouse/lighthouse-config-dev.json uploadArtifacts: true # save results as an action artifacts temporaryPublicStorage: true # upload lighthouse report to the temporary storage - name: Show Lighthouse outputs run: | # All results by URL: echo '${{ steps.LHCIAction.outputs.manifest }}' | jq -r '.[] | (.summary|tostring) + " - " + .url'

And leads to output like this on a failing pull request:

3 results for http://127.0.0.1:8080/en/2020/pwa Report: https://storage.googleapis.com/lighthouse-infrastructure.appspot.com/reports/1606936320277-1740.report.html ❌errors-in-consolefailure forminScoreassertion (Browser errors were logged to the console: https://web.dev/errors-in-console/) Expected >= 0.9, but found 0 ❌link-textfailure forminScoreassertion (Links do not have descriptive text: https://web.dev/link-text/) Expected >= 0.9, but found 0 ❌unsized-imagesfailure forminScoreassertion (Image elements do not have explicitwidthandheight: https://web.dev/optimize-cls/#images-without-dimensions) Expected >= 0.9, but found 0

Testing production URLs

With that in place we added one more separate action, which calls a script to do the following to get a list of ALL our production URLs from our production sitemap:

# Get the production URLs from the production sitemap LIGHTHOUSE_URLS=$(curl -s https://almanac.httparchive.org/sitemap.xml | grep "<loc" | grep -v "/static/" | sed 's/ *<loc>//g' | sed 's/<\/loc>//g')

We strip out some static content which doesn’t need testing (for example we have our eBook PDF in our sitemap) and then run the production URLs weekly in a similar (but simpler!) YAML config:

######################################

## Custom Web Almanac GitHub action ##

######################################

#

# Run checks against the production website

#

name: Production Checks

on:

schedule:

- cron: '45 13 * * 0'

workflow_dispatch:

jobs:

checks:

runs-on: ubuntu-latest

if: github.repository == 'HTTPArchive/almanac.httparchive.org'

steps:

- name: Checkout branch

uses: actions/checkout@v2.3.3

- name: Set the list of URLs for Lighthouse to check

run: ./src/tools/scripts/set_lighthouse_urls.sh -p

- name: Audit URLs using Lighthouse

uses: treosh/lighthouse-ci-action@v3

id: LHCIAction

with:

# For prod, we simply check for 100% in Accessibility, Best Practices and SEO

# We don't check Performance as too much variability and no guarantees on perf in GitHub Actions.

configPath: .github/lighthouse/lighthouse-config-prod.json

uploadArtifacts: true # save results as an action artifacts

temporaryPublicStorage: true # upload lighthouse report to the temporary storage

- name: Show Lighthouse outputs

run: |

# All results by URL:

echo '${{ steps.LHCIAction.outputs.manifest }}' | jq -r '.[] | (.summary|tostring) + " - " + .url'

We use a slightly different config to development (since we don’t have canonical or HTTPS issues on production) but the principle is the same.

This runs every Sunday and takes about 18 minutes to complete on the current site. Testing all production URLs may not be practical for some sites, but for smaller sites like ours this gives great assurance that no regressions or bad code or content has creeped into the code base.

It also allowed us to clean up existing issues to set the set of audits we wanted to run. This ensured that an unlucky editor of an old page wasn’t landed with a Lighthouse issue just because they happened to fix a typo, or some other unrelated change. Again, depending on your code base, there may be lots of legacy issues so being liberal with turning off audits until you can turn those on can help enormously.

Conclusion

So that’s how to add automated Lighthouse checks to your project if hosted on GitHub. The steps can be adapted to your own CI environment if not on GitHub but hopefully that gives you some ideas of how to implement this and what measures we took to give useful, but not noisy checks.

We’ve been running this for over a month now and the repo has been very active lately as we prepare to launch the 2020 edition (heavily recommend reading this amazing resource btw and two chapters are available already in early preview).

The Web Almanac is an open-source, community driven project so if you are interested in working on development items like this, or other interesting development we have done in that project then please do come and join us on GitHub but I hope you found this post useful for your own projects too.

Thanks to Andy Davies and Rick Viscomi for reviewing this post.