If you deal with Web Performance, you’ve probably heard about HTTP resource prioritization. This is especially true since last year, as Chromium added so-called “Priority Hints” with the new fetchpriority attribute, which allow you to tweak said prioritizations. You may have also heard that the prioritization system changed between HTTP/2 and HTTP/3.

However, what exactly does prioritization mean? How does it work under the hood? Why is it important to have some control over it? and, crucially, do all browsers agree on which resources are most important (hint: no, they don’t)? This, and much more, down below!

- What is prioritization?

- Prioritization signals

- Browser differences

- Raw protocol details

- Key learnings (this is what you’re probably most interested in)

- Server differences

- Conclusion

Note: HTTP prioritization is a very expansive topic; I’ve heard you can even do a PhD on it :O. In this post, I intentionally leave out a lot of nuances to focus on the key points.

What is prioritization?

HTTP resource prioritization is mainly a concept for HTTP/2 (H2) and HTTP/3 (H3). In HTTP/1.1 (H1), browsers typically open multiple TCP connections (up to 6 per domain) and each connection loads only 1 resource/file at a time. Prioritization is implicit by which resources are requested first on the available connections.

However, in H2 and also H3, we instead aim to improve efficiency by using only a single TCP/QUIC connection. If however that single connection could also only have just a single resource “active” at a time like H1, that would be bad for performance. So instead, H2 and H3 can send multiple requests at the same time. Crucially though, this does not mean these requests can also be fully responded to at the same time! This is because, at any given time, a connection is limited in how much data it can send by things like congestion and flow control.

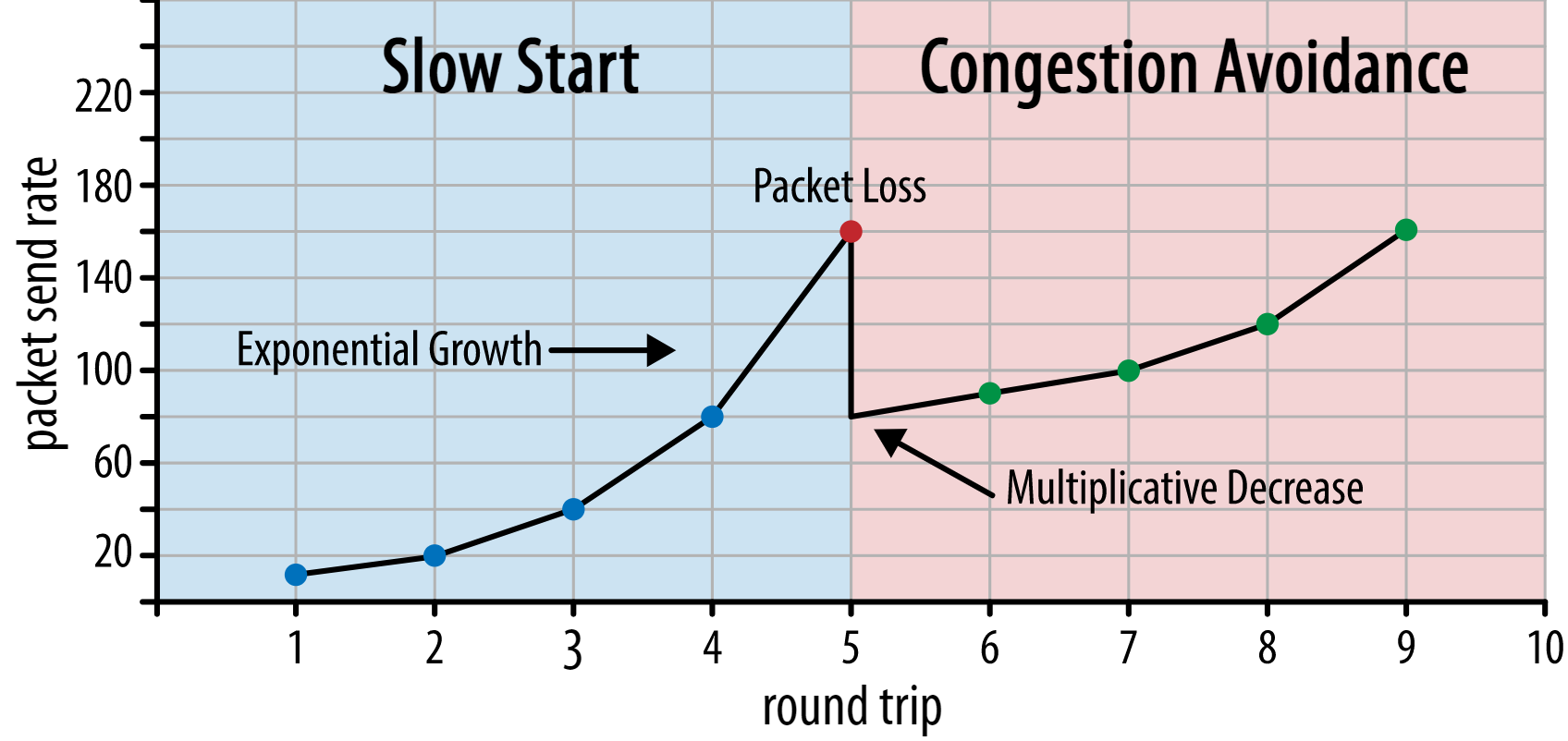

Figure 1: typical congestion control algorithm with aggressive slow start and more conservative growth afterwards. Source

Especially at the start of the connection, we can only send a limited amount of data each network round trip, as the server needs to wait for the browser to acknowledge it successfully received each burst of data. This means the server needs to choose which of the multiple requests to respond to first.

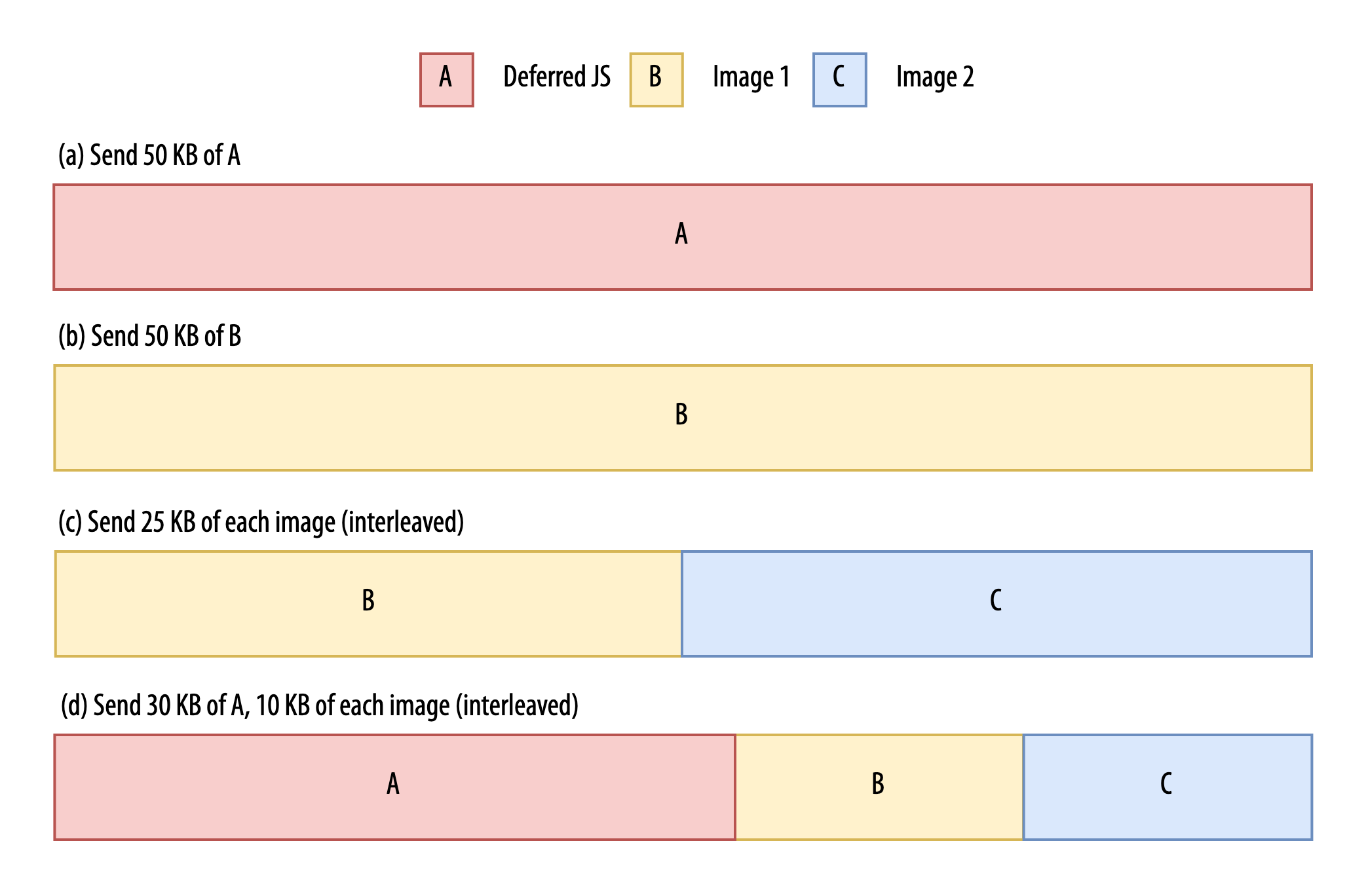

Let’s take an example where the browser has loaded the .html page and now requests 3 resources at the same time on one H2/H3 connection: a deferred JS file (100KB), and two .jpg images (300 and 400KB). Let’s say the server is limited to sending just 50KB (about 35 packets) in this round trip (the exact numbers depend on various factors). It now has to choose how to fill those 35 packets. What should it send first though?

You could make the argument that JS is always important (high priority), even though it’s deferred. You could also claim instead that there’s a high chance that one of the images is a likely Largest Contentful Paint candidate and that one of them should be given preference (but which one?). You could even go as far as to say that jpg images can be rendered progressively, and that it makes sense to give each image 25KB (we call this the “interleaving” or multiplexing of resource data). But what if the deferred JS is the thing that will actually load the LCP image instead… maybe give it some bandwidth too?

Figure 2: Bandwidth can be distributed across the three resources in very different ways.

It’s clear that the server’s choice here can have a big impact on various Webperf metrics, as some resource data will always be delayed behind “more important” data of a “higher priority”. If you send the JS first, the images are delayed by 1 or multiple network round trips, and vice versa. This is especially impactful for things like (render-blocking) JS and CSS, which have to be downloaded in full before they can be applied/executed.

Figure 3: If resources are loaded interleaved/multiplexed (top), this delays how long it takes for them to be fully loaded. Source

Note: this is why I hate it when people say that H2 and H3 allow you to send multiple resources in parallel, as it’s not really parallel at all! H1 is much more parallel than H2/3, as there you do have 6 independent connections. At best, the H2/3 data is interleaved or multiplexed on the wire (for example distributing data across the two images above), but usually the responses are still sent sequentially (first image 1 in its entirety, then image 2). As such, as a pedant, I rather speak of concurrent resources (or, if you really want, parallel requests and multiplexed responses).

The problem here is that the server doesn’t really have enough information to make the best choice. In fact, it wouldn’t even know the JS file was marked as defer in the HTML, as that piece of context is not included in the HTTP request by the browser (and servers don’t typically parse the HTML themselves to discover these modifiers). Similarly, the server doesn’t know if the images are immediately visible (e.g., in the viewport) or not yet visible (user has to scroll down, 2nd image in a carousel, etc.). It also doesn’t know about the fancy new fetchpriority attribute! If you want some more in-depth examples, see my FOSDEM 2019 talk or my Fronteers 2022 talk.

It follows that it’s really the browser which has enough context to decide which resource should be loaded first. As such, it needs a way to communicate its preferences to the server. This is exactly what HTTP prioritization is all about: a standardized way for browsers to signal servers in what order requested resource data should be sent.

Resource loading delays

At this point, it’s important to note that prioritization isn’t the only thing influencing actual resource delivery order. After all, prioritization only determines how multiple requests that are active at the same time are processed. You might expect that, for HTTP/2 and HTTP/3, browsers will indeed request resources as soon as they are discovered in the HTML, relying on just prioritization to get correct behaviour. However, you would be wrong.

In practice, all browsers have some (advanced or basic) logic to actively delay certain requests even after the resource has been discovered. A simple example is a prefetch-ed resource, which is typically indicated in a <link> element in the <head>, but is only requested by the browser when the current page is done loading.

Another example is Chromium’s “tight mode”, which will actively delay less important resources (e.g., images, CSS and JS in the HTML) until the more important resources have (mostly) been downloaded. There is for example also a limit on the amount of preloads that are active at the same time.

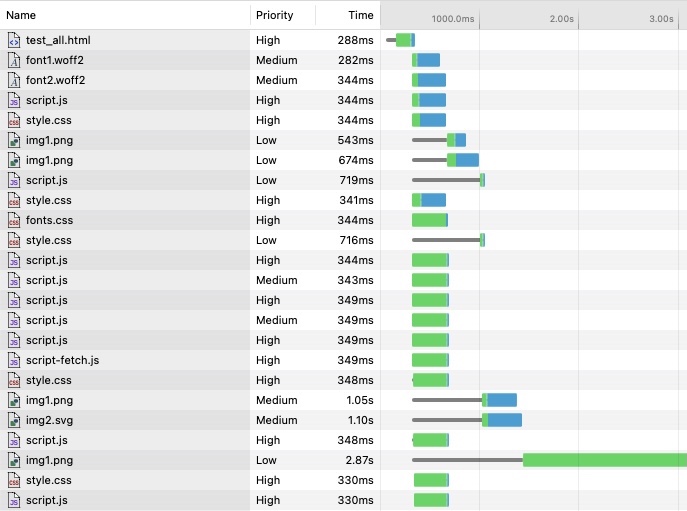

This general concept can also be seen in the following waterfall diagram, which clearly shows some resources only being requested after some time, even though they were discovered much earlier.

Figure 4: In Safari, these resources are discovered, but not requested at the same time.

In practice, we get a quite complicated interplay between which requests the browser sends out at the same time and how they are prioritized in relation to each other. However, exploring this interplay completely would take us too far in this post. My test approach described below does allow for it however, so that’s something I plan to do in the future. You can also help me ping Barry Pollard, who’s been promising me a blog post on the finer points of these things for like, forever!

Prioritization signals

If you look at the priority column in the Network tab of the browser devtools (see Figure 4 above), you can see textual values of High to Low (or similar) and you might think that’s what is sent to the server as well. However, that’s (sadly) not the case.

Especially in HTTP/2, there is a much more advanced system at work, called the “Prioritization Tree”. Here, the resources are arranged in a tree datastructure. The position of the resource in the tree (what are its parents and siblings) and an associated “weight” influences when it is given bandwidth and how much. When they request a resource, browsers use a special additional HTTP/2 message (the PRIORITY frame) to tell the server where in the tree the resource belongs.

Figure 5: Firefox uses a complex prioritization tree for HTTP/2.

This system is very flexible and powerful, but as it turns out, it’s also complex; too complex. So complex, that even today many HTTP/2 implementations have serious bugs in this system, and some stacks simply don’t implement it at all (ignoring the browser’s signals). Browsers also use the system in very different ways.

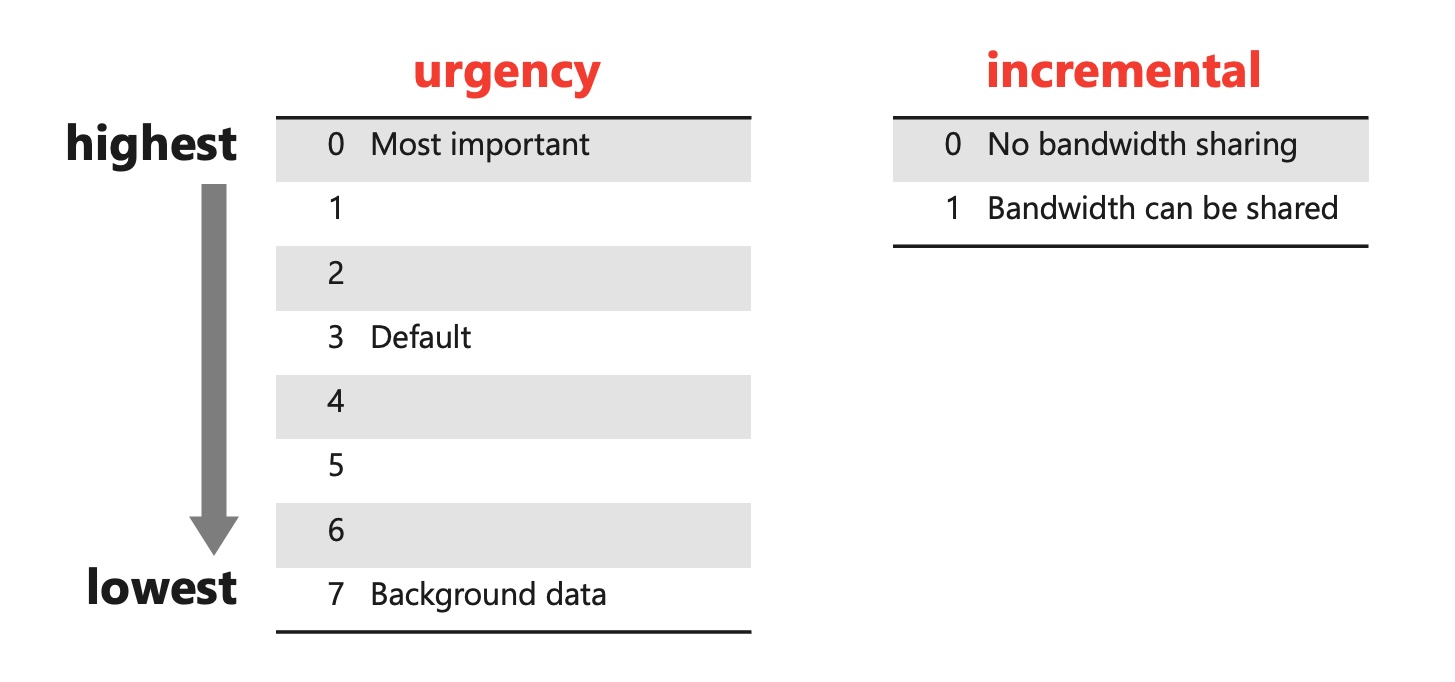

This is why, when work started on HTTP/3, we decided a simpler system was needed. This is what eventually became RFC 9218: Extensible Prioritization Scheme for HTTP. Instead of a full tree, this setup has just 8 numerical priority levels (called “urgency”, with values 0-7) and one “incremental” boolean flag to indicate if a resource can be interleaved with other resources or not (progressive jpgs: yes please. Render-blocking JS: probably best not). By default, a resource has an urgency of 3 and is non-incremental.

Figure 6: The new system uses two parameters: urgency and incremental.

The concept is simple: servers should first send all resources in the highest non-empty priority group (lowest urgency: u=0 should be processed before u=1 etc.) before moving on to the next group. As such, as long as there is a u=0 resource active (and we have data to send for it), there should be no data sent for other urgency groups. Within a single group, resources should be sent in request order (earlier before later), so JS higher in the <head> is delivered before JS lower in the <head> (as expected).

Note: this is all simple if there are only incremental or only non-incremental resources in 1 urgency group. If we start to mix them (say a non-incremental JavaScript and two incremental images, all in u=3, as in our example above), it’s not very clear what to do. Do we send the JS in full first (as it’s non-incremental)? Or should we give some early bandwidth to the images as well (since they’re the same priority AND can be sent incrementally)? Sadly, this is where the RFC itself doesn’t really give concrete guidance, as there are pros and cons for the various options. At this point in time, it also doesn’t matter much, since browsers don’t create these situations yet (though there are plans to change, see below!). For some more discussion on these edge cases, I have a nice video for you.

The new system is also simpler in how it sends the urgency and incremental signals: instead of a special HTTP/3 message, it can just use a new textual HTTP header called, shockingly, priority. The hope is that this overall simpler approach is easier to implement and debug, and that it will lead to much better support and fewer bugs than we had with the H2 system (spoiler for later: that’s not exactly true yet).

Figure 7: The new system uses the new “Priority” HTTP header.

That’s at least the idea. In practice, the HTTP header can only be used to signal a resource’s initial priority. If the priority needs to be updated later (say a lazy loaded image first gets low priority, but needs to switch to high when scrolled into view), this sadly cannot be done using an HTTP header. For that, we do need a special H3 (and H2!) binary message: the PRIORITY_UPDATE frame. These details aren’t really important for most people, but I mention this because while Firefox and Safari use the HTTP header, Chromium does not. For “reasons”, Google only uses the PRIORITY_UPDATE frame, even to signal the initial priority (put differently: it just immediately overrides the default priority).

It’s important to note that this new scheme is not exclusive to HTTP/3. Indeed, the intent is that it would be backported to existing H2 implementations over time (though, by my knowledge, no H2 stack has adopted this today). Additionally, it’s called the “extensible” scheme, with a view towards adding additional parameters beyond “urgency” and “incremental” in the future.

Browser differences

As I mentioned above, browsers used the complex HTTP/2 system in very different ways. Each of the major browser engines (Chromium, Firefox and Safari) produced radically different prioritization trees and signals. Cloudflare has a quite extensive blogpost on this, and I have an academic paper that goes into way too much detail.

For the rest of the post, I will focus on HTTP/3 with the new system only, as all 3 of the main browsers support it. I was curious to find out if there are still big differences in their approaches. Sadly, only Chrome has some accessible public documentation of their approach and logic, and no one had looked into Safari and Firefox behaviour yet, so it was time to roll up my sleeves and get my hands dirty!

I could make a cynical remark here about the Googlers releasing (excellent) blog posts on the encompassingly named “web.dev” talking about “browser features” but then often only describing how their own browser acts. However, let’s not do that, as similar remarks can be made towards the other browser vendors who (in my opinion) put way too little money towards public documentation.

I immediately ran into two problems:

- Observing the new signals was difficult, as no tools have support for them yet. They don’t show up (in their raw form) in the browser devtools, nor in WebPageTest. I decided to modify the excellent aioquic HTTP/3 server to add some additional logging for the prioritization signals.

- I asked around, but there was no single test page that included all the different resource loading options that can impact prioritization (async/defer, lazy/eager, fetchpriority, preload/prefetch, etc.). I thus created my own test page that includes a whopping 36 different situations to make it easy to test.

I then hosted the custom test page on the custom HTTP/3 server and loaded it with the 3 browsers. I stored the HAR files from the browsers, and the logs from the server to find out what the browsers were actually sending on the wire. More details on the results are/will be available on github, and I’ll highlight the key points below.

Raw protocol details

I’ll start here with some of the raw, low-level results. If you don’t care about the details, skip to the next section, which provides a more high-level discussion.

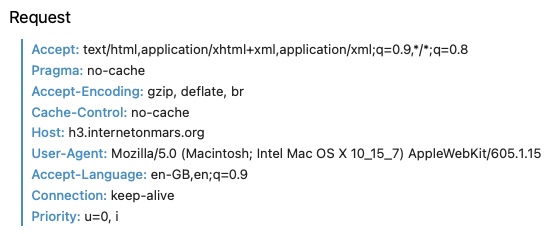

Figure 8: A part of the raw results. Source

Firstly, as I mentioned before, Chromium uses only the PRIORITY_UPDATE frame, and not the HTTP header. Firefox and Safari do the opposite, only using the header. Due to the nature of the test page (just the initial load), I wasn’t able to observe if the browsers actually send updates, though it is known that Chromium does this for images (which start at a low priority and are then bumped to high if they are visible). In this situation, Chromium just sends 2 PRIORITY_UPDATE frames, one for the initial priority, and one for the actual update afterwards. Protocol nerd detail: the initial PRIORITY_UPDATE is sent before the HTTP headers!

A second big difference is the use of the incremental parameter. Chromium and Firefox never set it, causing it to default to “off”. This means that servers should not distribute bandwidth across multiple resources; the most important resource should be loaded in full before moving to the next. Safari does the opposite: it sets the incremental parameter for all resource types, including render-blocking JS and CSS resources. As explained above, that’s not always a great idea.

It’s important to note that there are concrete plans to change Chromium’s behaviour in the near future. From what I’ve heard, it would set the incremental parameter for everything, except the (high priority) JS and CSS files. This would solve some long standing bugs, such as this one from Wikipedia, who found large HTML downloads can delay render-blocking CSS without a good reason. This work will be tracked in this crbug, which highlights another issue with Chromium’s sequential-only approach. Crucially, this means that Chromium will use different prioritization logic for HTTP/2 compared to HTTP/3 (as for HTTP/2, it also doesn’t use the equivalent of the incremental parameter today). This could have a large impact on your pages depending on your setup, and it might be yet another reason to switch to HTTP/3 sooner rather than later. Finally, note that this is already the case for Firefox as well, which does use incremental signals in HTTP/2, but not in HTTP/3.

Thirdly, there are some minor differences in how the signals are used. For example, Chromium and Firefox don’t explicitly send a signal for “u=3”, as it’s the default, which they expect the servers to respect. Safari however does send “u=3,i” explicitly. The browsers also use different urgency values. Internally, Chromium and Safari use 5 priority levels (conceptually: Highest, High, Medium, Low, Lowest), while Firefox seems to use just 4. Chromium maps these to the first 5 urgency numbers (0,1,2,3,4), while Safari spreads them out more (0,1,3,5,7) and Firefox skips 0 (1,2,3,4). This should have no impact on how servers deliver resources (lower urgency values are more important, no matter which exact number is used), but it’s still interesting we have 3 different approaches even for such a simple system.

For the key learnings below, I’ve mapped the different numerical urgency values to Highest, High, Medium, Low, Lowest (except for Firefox, which only uses 4, and so doesn’t have a “Lowest”).

Key learnings

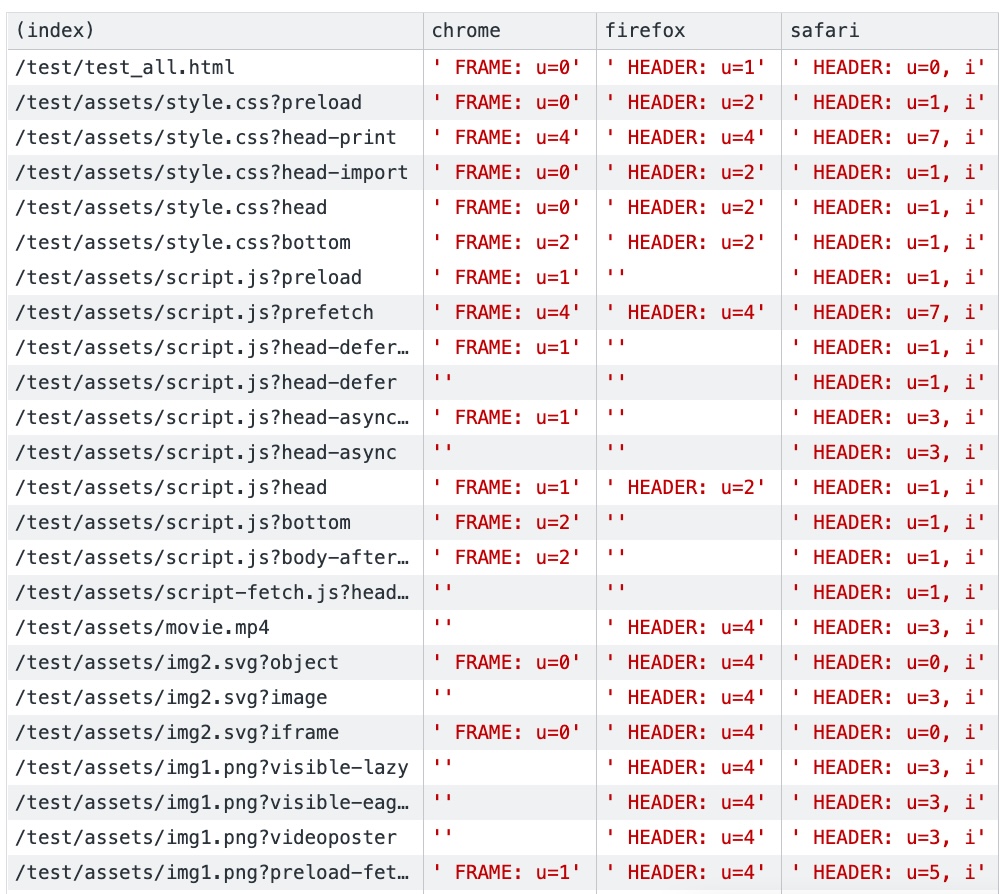

As you probably expected, besides the protocol details, the browsers also differ quite a bit in the importance they assign to different types of resources and loading methods. Below I’ll discuss a few groups of resource types (HTML and fonts, CSS, JS, images, fetch) and some key differences between the 3 browsers. Note that I’ll try to refrain from “passing judgement” on which approach is the best here. This will depend on your specific page setup and which features you use. See these results more as a guide on how to (potentially) tweak things on a per-browser basis.

| ? Type / Priority ? | Highest | High | Medium | Low | Lowest |

|---|---|---|---|---|---|

| Main resource (HTML) | |||||

| Font (preload) | |||||

| Font (@font-face) |

Luckily, all browsers agree the main HTML document is the most important (who knew!). However, for fonts, we see major differences. Chromium finds fonts as important as the HTML itself, while Safari and Firefox put them at Medium or even Low. Especially comparing this to the priority of other resources like CSS and JS (see below), Chromium puts a much higher emphasis on fonts than the other two browsers. Preloading fonts is also interesting: Chromium actually lowers the priority in this case (likely because preloading is used to hint at resources that will be needed down the line, but probably not right now). Firefox employs the opposite logic, assigning preloaded fonts a higher priority.

| ? Type / Priority ? | Highest | High | Medium | Low | Lowest |

|---|---|---|---|---|---|

| CSS (preload) | |||||

| CSS (head) | |||||

| CSS (import) | |||||

| CSS (print) | |||||

| CSS (bottom) |

The key difference for CSS loaded in the <head> is that Chromium assigns it the highest priority, putting it on-par with the HTML document, while the others put all CSS in the “High” category. For CSS lower in the HTML (in my test case, on the very bottom), Chromium interestingly puts it in “Medium” (which makes sense, as it isn’t really “render blocking” for useful content at that point). All browsers (accurately) put the CSS with media="print" in their lowest priority (remember: Firefox doesn’t do “Lowest”).

| ? Type / Priority ? | Highest | High | Medium | Low | Lowest |

|---|---|---|---|---|---|

| JS (preload) | |||||

| JS (prefetch) | |||||

| JS (head) | |||||

| JS (async) | |||||

| JS (defer) | |||||

| JS (body) | |||||

| JS (bottom) |

For JS, things are a bit all over the place. All browsers emphasize render-blocking JS in the <head>, but besides that things diverge. For preloaded JS, only Firefox reduces the priority (probably using Chromium’s logic for fonts), but all browsers do (correctly) severely downgrade prefetched JS. Async/defer are weirder though, with both Chromium and Firefox assigning them equal priorities; personally, I’d maybe expect defer to be lower priority due to the semantics? Safari is the odd one here though, making defer higher priority than async (I really don’t see a good reason for that, as it should support the feature). Additionally, Safari assigns equal priority to JS that’s loaded later on the page compared to the <head>, while the others employ lower values. Finally, Safari uses the same priority (High) for almost all CSS and JS, which means less important files can easily delay crucial ones, especially since Safari uses the “incremental” parameter for all requests (see above).

| ? Type / Priority ? | Highest | High | Medium | Low | Lowest |

|---|---|---|---|---|---|

| Image (preload) | |||||

| Image (preload + fetchpriority) | |||||

| Image (body) | |||||

| Image (lazy) | |||||

| Image (hidden) | |||||

| Image (CSS bg) | |||||

| Image (poster) | |||||

| video |

For the images, the behaviours are more consistent and (arguably) more expected. One interesting anomaly is that Safari gives a lower priority to hidden images that are not lazy loaded (normal <img> inside a display: none <div> in my test page), while Firefox and Chromium treat that the same as a visible image. Additionally, we have two clear examples that highlight that prioritization alone isn’t enough: both preload and lazy loading do not impact the image priority at all! These features will only impact when a resource is actually requested (see above)! This also shows very clearly why we need the new fetchpriority, as that’s the thing that actually increases the priority (at least in Chromium, though Firefox is reported to be working on support). Finally, the test page also had a hidden lazy loaded image, which wasn’t requested in any of the browsers (as expected!).

| ? Type / Priority ? | Highest | High | Medium | Low | Lowest |

|---|---|---|---|---|---|

| Fetch (inline) | |||||

| Fetch (defer) | |||||

| Fetch (fetchpriority) | |||||

| Fetch (manual priority) |

Finally, I wanted to test the prioritization of requests made using the JavaScript fetch() API. Here again, the browsers disagree quite heavily. Chromium finds these requests very important, while Firefox defaults to a Low priority (equal to images or, indeed, even prefetches…). I also tested doing fetch() from inside a deferred JS file (second line), as I thought maybe Chromium would give it lower priority (in line with the deferred JS itself), but that doesn’t happen. Next, we have another illustration of the usefulness of the fetchpriority attribute, in this case to lower the priority in Chromium.

Finally, I wanted to try overriding the priority information. After all, in the new system this is done using an HTTP header, and you can set custom headers in the fetch() call! Unsurprisingly, manually passing a header of priority: u=0,1 we again find 3 different behaviours:

Figure 9: Browser differences in handling a custom priority HTTP header. Source

Chromium sends both its PRIORITY_UPDATE frame and the custom header. Firefox simply sends 2 priority header fields: its own and then the one from fetch(). I’m not 100% sure, but I think that’s not really allowed per the HTTP RFC, Mozillians. The two values are contradictory, so it’s not clear which the server should choose (in the table above, I’ve chosen Firefox’s default of u=4). Finally, Safari overrides its own header with the one we passed to fetch(), which is arguably the “correct” (or at least expected) behaviour.

Overall, it was somewhat surprising to me that the browsers allow manual setting of this header, as previously in discussions at the IETF they indicated they wouldn’t (at least Chromium was quite vocal about it). This can in theory be used to do very fine-grained resource loading with custom priorities for things like Single Page Apps, which would be interesting to explore!

In conclusion, I find it remarkable how much the browsers disagree on how important certain types of resources are. You would expect this would be more consistent, as it’s not dependent on browser implementation, but rather the (HTML) loading semantics, which are (should be) the same across browsers. It’s almost as if these approaches are chosen somewhat willy-nilly with a best-guess approach, without actually testing (nor properly documenting) them in the wild. I feel another rant coming on, so quickly, let’s switch to the next section!

Server differences

As we’ve seen, even in the new and simpler system, there are still plenty of differences in how and which prioritization signals the browsers send to the servers. Still, for the servers this shouldn’t matter: they should just be able to follow whatever the browser throws at them, and be able to adhere to that as best as possible. But do they?

Note: it’s not always possible to follow the signals to the letter. Especially in more complex setups (for example with a CDN), it can be that there’s no data yet available for higher priority resources (for example, they weren’t cached at the edge yet), and it makes sense to start sending lower priority data in the mean time.

Again, I found that no one had yet done a survey of (mature) HTTP/3 servers to determine their support for the new prioritization system (for reference, this was done for HTTP/2 servers a while ago). Time to get my hands dirty, again.

Sadly, here I found some less than stellar results. Almost none of the servers I tested fully adhered to even relatively straightforward priority signals, and most had severe problems with the more complex combinations (which, again, aren’t used by browsers (yet!)).

Since this was the case, I have decided to not “name and shame” server implementations here, as I don’t think that would be a constructive approach. Instead, I have (and will continue to) contact(ed) server implementers directly to see how the issues can be resolved. Then, in 6 months or a year, we can revisit and do a proper comparison.

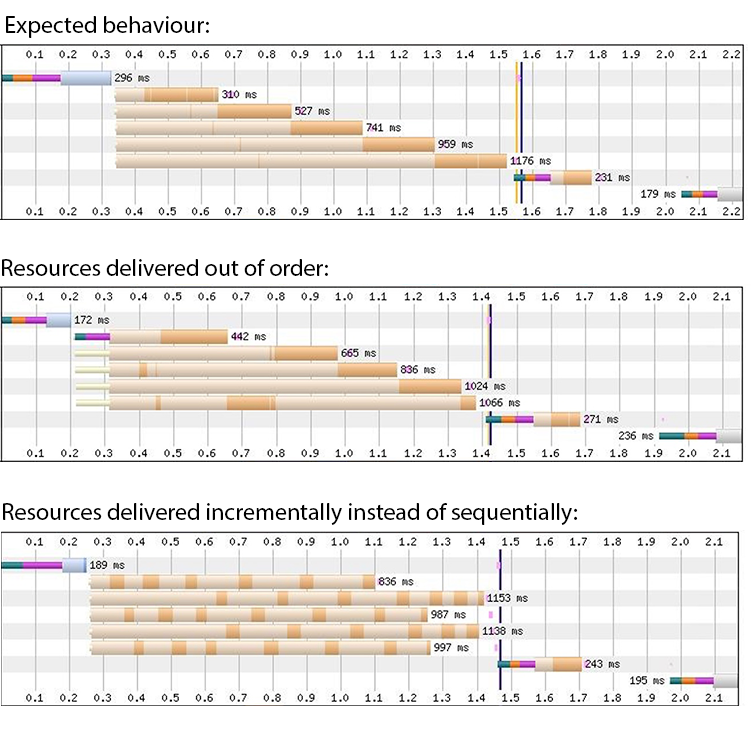

Just to give you an idea of the bad behaviour observed however, look at these WebPageTest screenshots using Patrick Meenan’s original test page loaded via Chromium:

Figure 10: HTTP/3 server differences when loading the same page in the same browser.

As you can see, data is not always received per the browser’s signals, which can again have an impact on some Webperf metrics. If you’ve started experimenting with HTTP/3 and you get some less-than-expected results, this might be one of the reasons/things to look into.

Personally, I’m quite surprised that these issues exist. One of the reasons for bad HTTP/2 server behaviour was that the HTTP/2 prioritization tree was quite complex to implement correctly. Arguably, the new HTTP/3 system should be much easier, and still we see some high-impact bugs in so-called mature implementations. I could rant about this for a few hundred thousand more words, but instead, let’s just conclude this post, shall we 😉

Conclusion

The main thing I hope you take away from this article is that browsers have major differences in how they load resources over HTTP (and priortization is just one example of this!). Depending on your page setup, you might see medium or large webperf differences between the browsers, and thus you should be aware of these nuances to deal with these edge cases.

I hope it also shows how important the new “priority hints” and fetchpriority attribute are. They don’t just allow changing a browser’s default behaviour, they allow getting more consistent behaviour across browsers (at least once Firefox and Safari get around to implementing them…).

Finally, on a broader “Software Engineering” note, it’s interesting that having a simpler system does not immediately imply consistent behaviour across implementations, nor does it mean those stacks will be bug-free from the start. Still, I’m proud to have helped design the new HTTP/3 system. I think it is a step in the right direction and I hope we get to backport it to HTTP/2 implementations down the line as well.

Acknowledgements

Thanks in particular to Barry Pollard, Lucas Pardue and Patrick Meenan for some help when writing this article.