Everything, On the Main Thread, All at Once

Arrays are in every web developer’s toolbox, and there are a dozen ways to iterate over them. Choose wrong, though, and all of that processing time will happen synchronously in one long, blocking task. The thing is, the most natural ways are the wrong ways. A simple for..of loop that processes each array item is synchronous by default, while Array methods like forEach and map can ONLY run synchronously. You almost certainly have a loop like this waiting to be optimized right now.

What’s the problem with long tasks, anyway? Every long task is a liability for an unresponsive user experience. If the user interacts with the page at just the right (or wrong) time, the browser won’t be able to handle that interaction until the task completes, which contributes to its input delay and slow Interaction to Next Paint (INP) performance. You can think of them like potholes on a road, forcing drivers to dodge them or risk damaging their cars—an unpleasant experience either way. Likewise, long tasks create unresponsive UIs, which can frustrate users and impact business metrics. They’re especially problematic when they’re not just coinciding with a user interaction, but in response to one. It’s no longer a matter of poor timing, because every click necessarily becomes a slow click.

Synchronously processing large arrays is one of the easiest ways to introduce long tasks. Even if the unit of work performed on each item in the array is reasonably fast, that time scales up linearly with the number of items. For example, if a CPU can complete one unit of work in 0.25 ms, and there are 1,000 units, the total processing time will be 250 ms, creating a long task and exceeding the threshold for a fast and responsive interaction. The key to breaking up the long task is to use the repetition to your advantage: each iteration of the loop is an opportunity to interrupt the processing and update the UI as needed.

Optimizing interaction responsiveness

Interrupting a task to allow the event loop to continue turning is known as yielding. There are a few ways to yield, with the classic approach being setTimeout with a delay of 0 ms, or the more modern alternative: scheduler.yield. It’s not currently supported in all browsers, so production-ready use cases will need a polyfill or fall back to setTimeout. In both cases, the trick to making the loop asynchronous is to use async/await. But there’s a catch.

If you’re using an Array method like forEach or map, you’ll quickly realize that this doesn’t work:

function handleClick() {

items.forEach(async (item) => {

await scheduler.yield();

process(item);

});

}

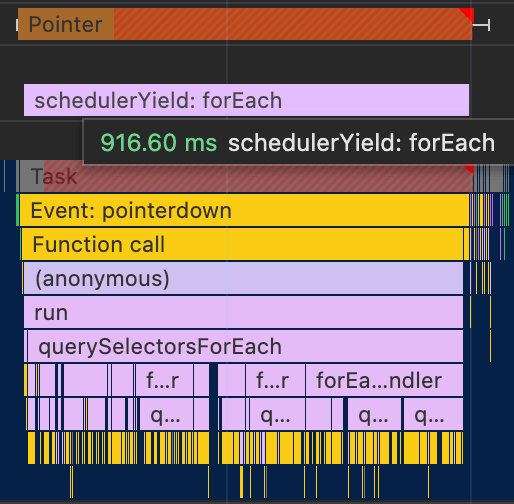

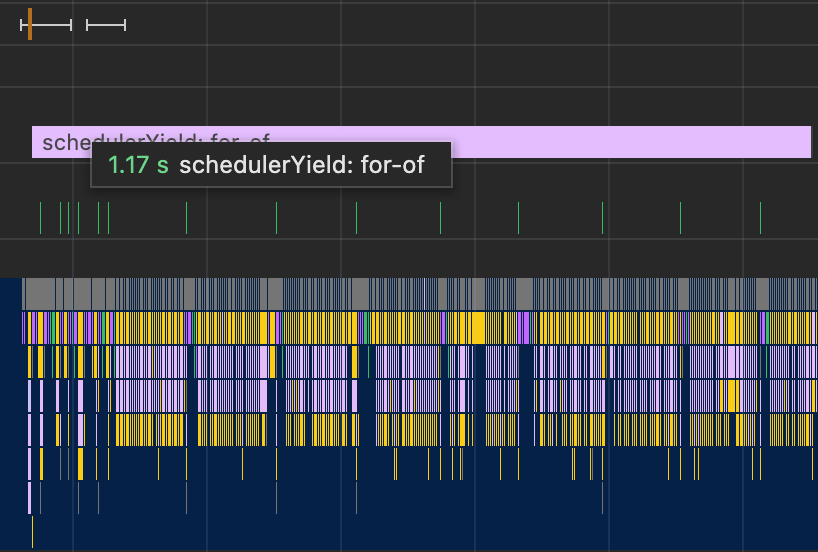

forEach doesn’t care if your callback function is asynchronous, it will plow through every item in the array without awaiting the yield. And it doesn’t matter which approach you use scheduler.yield or setTimeout. Apparently, this trips up a lot of developers, with this StackOverflow question having been viewed 2.4 million times since it was asked in 2016. The solution is in the top answer: switch to using a for..of loop instead.

async function handleClick() {

for (const item of items) {

await scheduler.yield();

process(item);

}

}

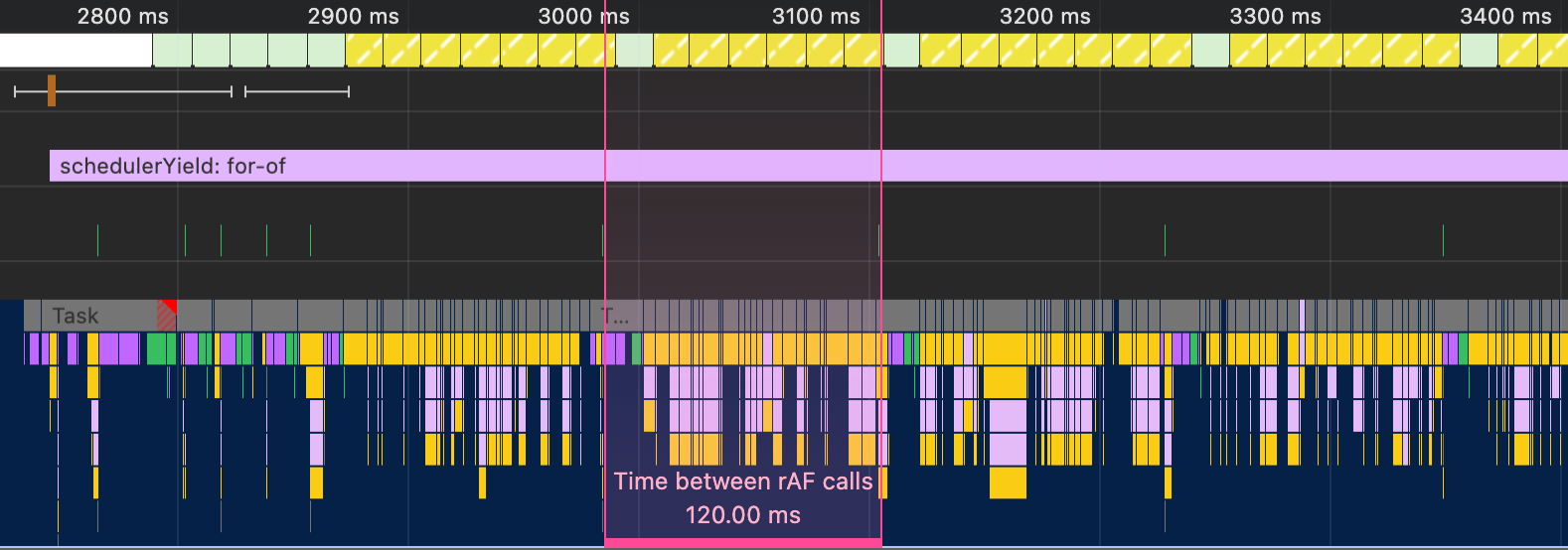

Instead of a monolithic long task blocking the click handler, now we’ve spread the work out into smaller tasks, responding to the interaction instantly. Problem solved, right?

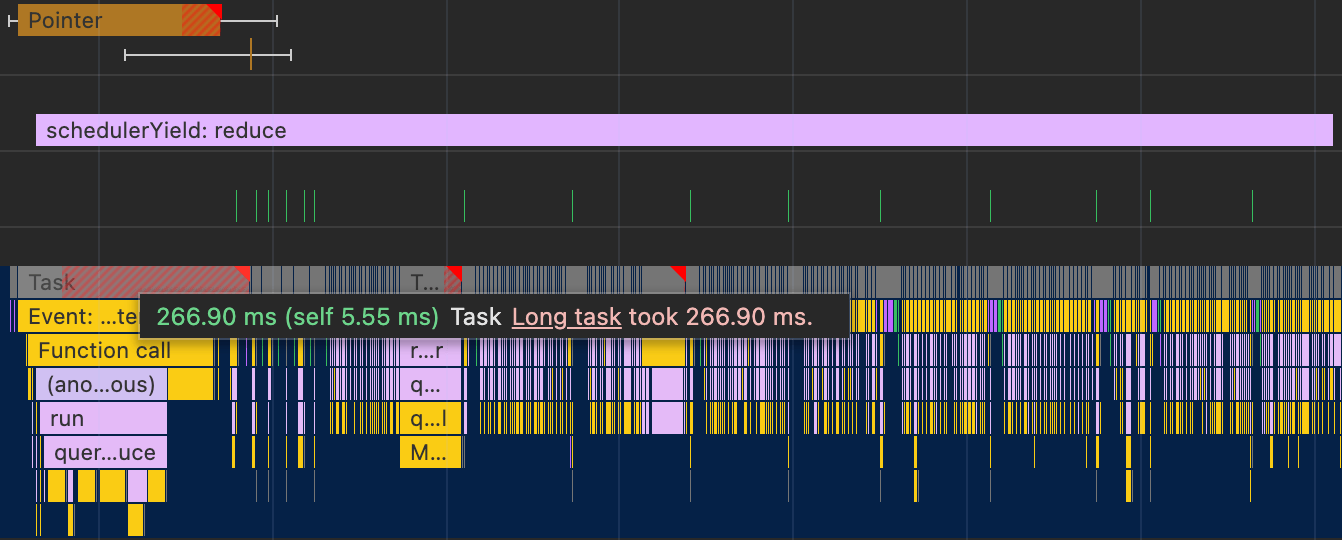

Before we get into the major problem with this approach, you might have noticed the third most upvoted answer on that StackOverflow question, which recommends using the reduce method. In case you were tempted to cling to your functional programming tendencies and use reduce to break up the long task, think again.

function handleClick() {

items.reduce(async (promise, item) => {

await promise;

await scheduler.yield();

process(item);

}, Promise.resolve());

}

This approach passes a promise along from one iteration to the next, which we can await before processing the next item. However, the issue with this is that reduce still plows through the entire array, synchronously queuing up each microtask. It’s not until the promises are fulfilled that it starts processing the items. In other words, even though the actual processing happens asynchronously, the amount of overhead is still enough to make the click handler slow.

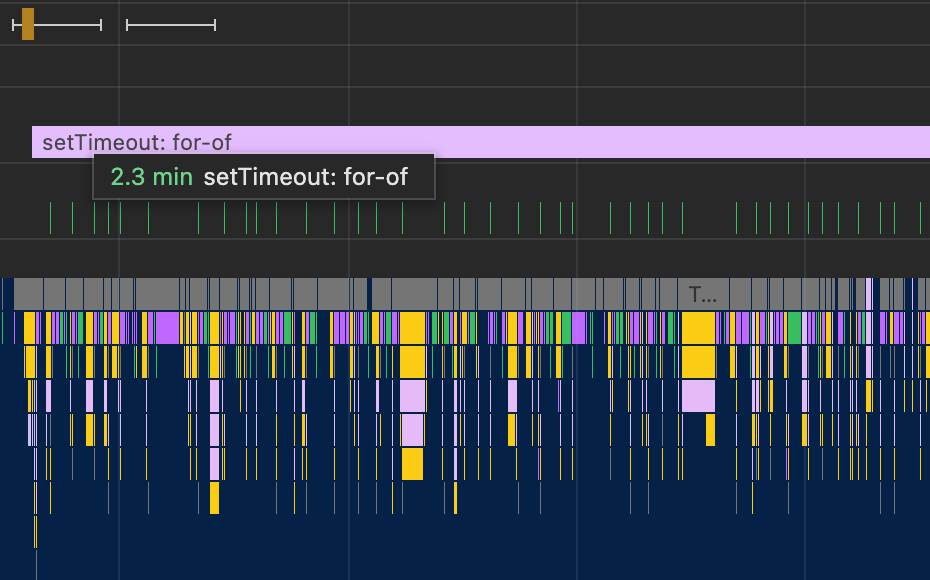

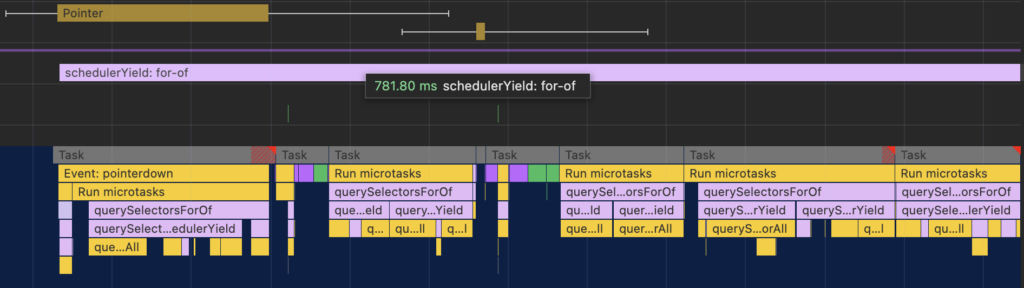

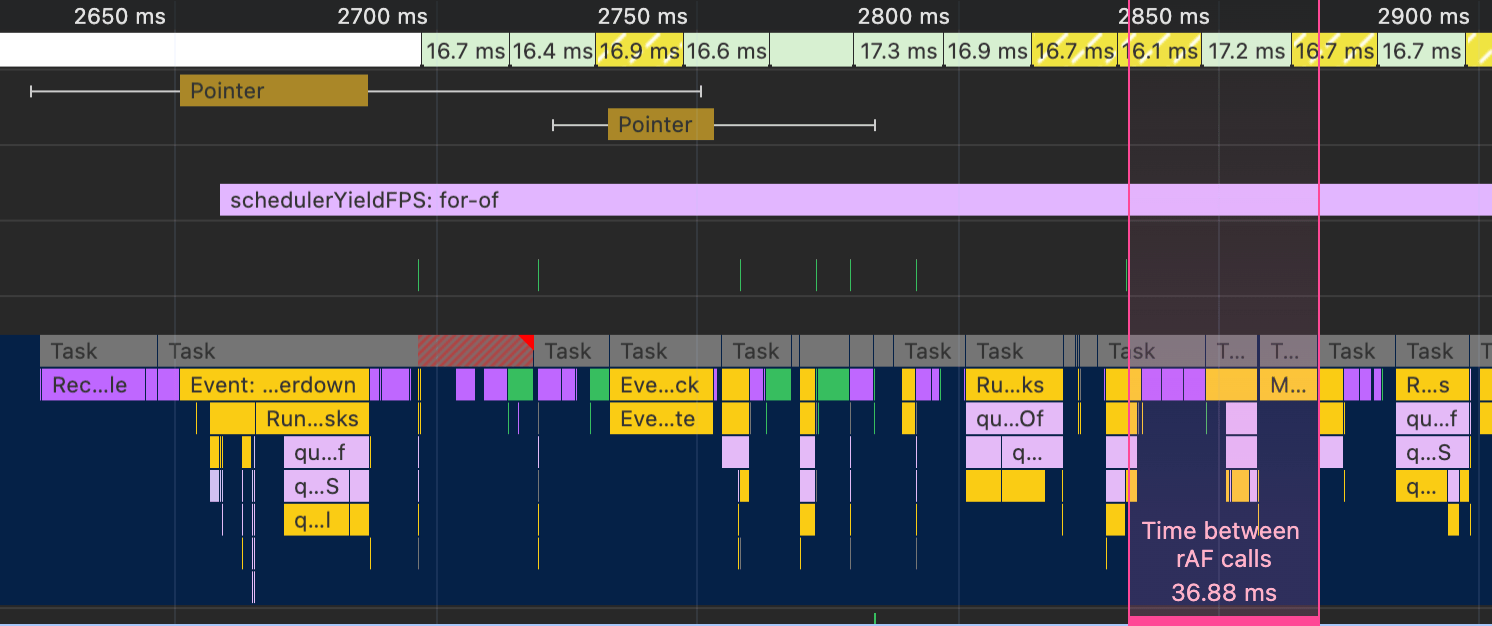

Yielding within a for..of loop seems like the best way to achieve responsive interactions, but the problem is that we’re yielding on EVERY iteration of the loop. Let’s see what happens in browsers that don’t support scheduler.yield:

async function handleClick() {

for (const item of items) {

await Promise(resolve => setTimeout(resolve, 0));

process(item);

}

}

With setTimeout, the job takes over 2 minutes to complete! Compare that with scheduler.yield, which completes in about 1 second. The huge disparity comes down to the fact that these are nested timeouts. Unlike tasks deferred with scheduler.yield, browsers introduce a 4 ms gap between nested timeouts. But that’s not to say that using scheduler.yield on every iteration comes without a cost. Both approaches introduce some overhead, which can be mitigated with batching.

Optimizing total processing time

Batching is processing multiple iterations of the loop before yielding. The interesting problem is knowing when to yield. Let’s say you yield after processing every 100 items in the array. Did you solve the long task problem? Well, that depends on the CPU speed and how much time the average item takes to process, and both of those factors will vary depending on the client’s machine.

Rather than batching by number of items, a much better approach would be to batch items by the time it takes to process them. That way you can set a reasonable batch duration, say 50 ms, and yield only when it’s been at least that long since the last yield.

const BATCH_DURATION = 50;

let timeOfLastYield = performance.now();

function shouldYield() {

const now = performance.now();

if (now - timeOfLastYield > BATCH_DURATION) {

timeOfLastYield = now;

return true;

}

return false;

}

async function handleClick() {

for (const item of items) {

if (shouldYield()) {

await scheduler.yield();

}

process(item);

}

}

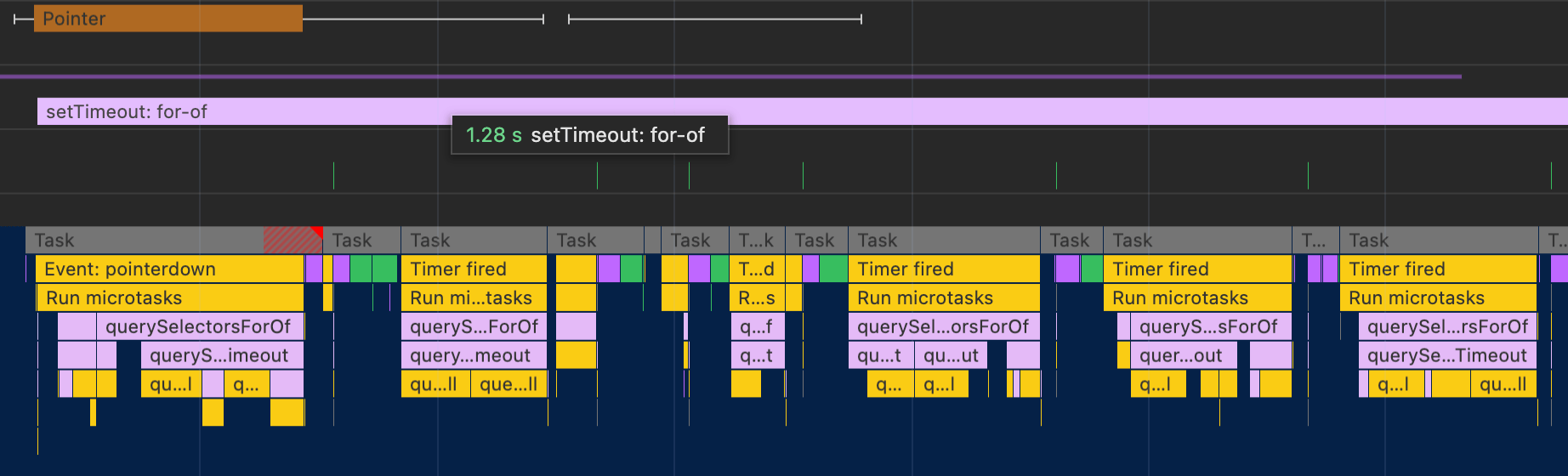

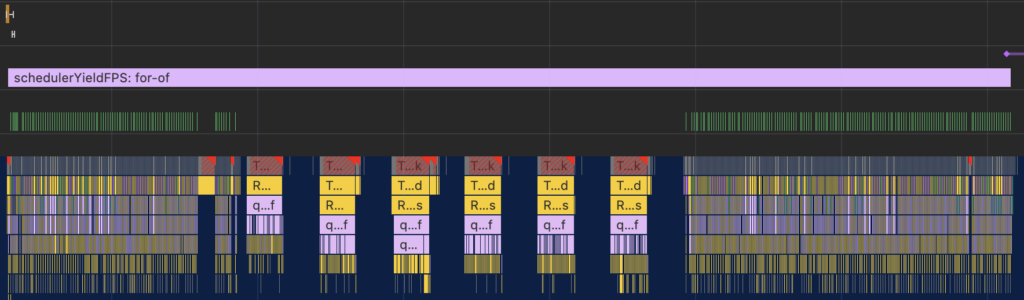

And here are the results with setTimeout:

The choice of batch duration is a tradeoff between minimizing the amount of time a user would spend waiting if they interacted with the page during the batch processing and the total time to process everything in the array. If you chunk up the work into 100 ms batches, that’s fewer interruptions and faster throughput, but at worst that’s also 100 ms of possible input delay, which is already half the budget for a fast interaction. On the other hand, with 10 ms batches, the worst case input delay is almost negligible, but more interruptions and slower throughput.

Your primary goal should be to unblock the interaction so that it feels responsive. That could just mean yielding so that you can update the UI with the first few items, or kicking off a loading animation. How often you yield during the rest of the processing time will depend on what your second priority is. Maybe nothing can be shown to the user until the entire array is processed, so your secondary goal should be to finish as quickly as possible. In that case you’ll want to go with a higher batch duration. Or maybe it’s ok to do the work in the background, but the UI should remain as smooth and responsive as possible. That lends itself to a smaller batch duration. When in doubt, 50 ms can be a good compromise, but it’s always a good idea to profile different approaches and pick what works best for your app.

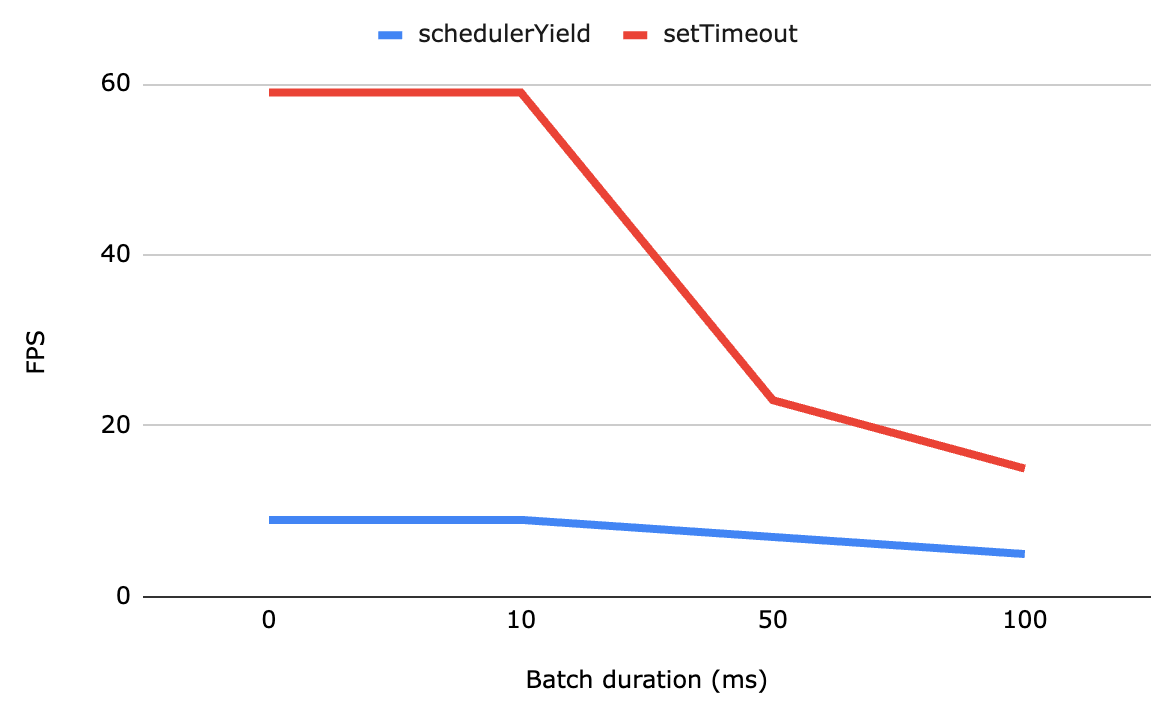

We could stop there, but there’s one more thing that you might want to consider: frame rate. If you look closely at the screenshots above, you’ll notice thin green markers roughly corresponding to the paint cycle. These are custom timings using performance.mark to show when a requestAnimationFrame callback runs. There’s a curious difference in the frame rates of scheduler.yield and setTimeout.

Optimizing smoothness

To reiterate, if the work needs to be completed as quickly as possible, you should minimize the number of yields. But there are plenty of instances where it’s more important to provide visual feedback to the user that something is happening, like a progress indicator. Even if you’re not showing any progress to the user, you might still want to keep the frame rate reasonably fast to avoid janky animations or scrolling behavior. That’s where the preferential priority of scheduler.yield starts getting in the way.

Surprisingly, for batch durations under 100 ms, the frame rate is relatively flat around 10 FPS. However, setTimeout follows the expected curve, where more frames are painted as the batch duration decreases, approaching 60 FPS. Tasks scheduled with scheduler.yield are given preferential treatment, so even if you don’t do any batching at all, the browser will prioritize it over the next paint—but only up to a point.

With no batching, the average time between frames is 120 ms, far from the 16 ms you get with tasks scheduled with setTimeout. This means your frame rate will be a lame 8 FPS. If you’re cool with that, you can skip the rest of this section. But I know there are some people who can’t stand the thought of a laggy UI, so here are some tips.

const BATCH_DURATION = 1000 / 30; // 30 FPS

let timeOfLastYield = performance.now();

function shouldYield() {

const now = performance.now();

if (now - timeOfLastYield > BATCH_DURATION) {

timeOfLastYield = now;

return true;

}

return false;

}

async function handleClick() {

for (const item of items) {

if (shouldYield()) {

await new Promise(requestAnimationFrame);

await scheduler.yield();

}

process(item);

}

}

First, change the batch duration to align with your desired frame rate. When it’s time to yield, before calling scheduler.yield, await a promise that resolves in a requestAnimationFrame callback. This effectively prevents any more work from happening until a frame is painted, ensuring a much smoother UI.

One gotcha is that the rAF callback won’t be fired as long as the tab is in the background. We can make a few adjustments to handle this edge case.

const BATCH_DURATION = 1000 / 30; // 30 FPS

let timeOfLastYield = performance.now();

function shouldYield() {

const now = performance.now();

if (now - timeOfLastYield > (document.hidden ? 500 : BATCH_DURATION)) {

timeOfLastYield = now;

return true;

}

return false;

}

async function handleClick() {

for (const item of items) {

if (shouldYield()) {

if (document.hidden) {

await new Promise(resolve => setTimeout(resolve, 1));

timeOfLastYield = performance.now();

} else {

await Promise.race([

new Promise(resolve => setTimeout(resolve, 100)),

new Promise(requestAnimationFrame)

]);

timeOfLastYield = performance.now();

await scheduler.yield();

}

}

process(item);

}

}

The first change is to the shouldYield function, which now checks the page visibility. If the document is hidden, we can afford to yield in larger batches of 500 ms. Even though there is no user to experience a slow interaction, this still introduces a long task that could block the page from becoming visible if the user returns before the work is completed. document.hidden will continue to be true until the visibilitychange event can be handled, so we still need to yield periodically.

The second change is to the way we yield when the document is visible. We need to make sure that we’re not dependent on the rAF callback, so we can race it against a 100 ms timeout, borrowing from Vercel’s await-interaction-response approach. The 100 ms timeout will be throttled to 1000 ms while the tab is backgrounded, but after that, the timeout will fire and work can resume. Resetting the timeOfLastYield is good so that the first backgrounded batch can run for the full 500 ms.

The final change is to the way we yield when the document is hidden. We want the visibilitychange event to fire, but scheduler.yield will always preempt it, delaying the page from becoming visible until the work is completed. That might be worth more investigation because it feels like a bug, but we can work around it by switching to a timeout-based approach. As long as the document is hidden, work will be done in 500 ms batches with an additional 500 ms delay between each batch, adding up to the 1000 ms delay for throttled timeouts. That way, if the user returns before the work is completed, the visibility state will be updated and the regular batching logic will kick back in.

If all of this feels overly complicated, that’s probably because it is. If your application can withstand pausing array iteration while the tab is in the background, then you should skip this last part for the sake of simplicity. In any case, this was a fun exercise in pushing the limits of yielding.

Try it out

If you’d like to try out the different yielding strategies, you can use this demo. That’s also what I used to make the screenshots in this post.

Hopefully this was a useful overview of the “yield in a loop” problem and how I’d go about solving it. Feel free to let me know if I got something wrong, or if you know of a better way I’d love to hear about it. Good luck out there!

[…] This post originally appeared in the 2024 Web Performance Calendar […]

[…] 网站: calendar.perfplanet.com HN评论: […]

Why not just use generators and iterators, which are well supported?

Did you even read the article? You should be batching your large array processing.

Could save effort by going off the main thread with service workers?

All of this complexity needed a JS package, so I created one, named yieldy-for-loop.

I was also thinking of using the web workers for offloading the expensive work. but that means transferring the potential large array to the worker, and probably also back if the computation returns something. In the real world scenario one could use these two approaches and compare them for better outcome. I’m not sure if the web worker is suspended if web app is out of the screen, most probably not, but it might have some other hidden pitfalls.