Morgan Murrah is a web developer who loves a good coffee and running a few tests. He works for a life sciences marketing agency called CG Life. Morgan is also a contributing volunteer to the Web Sustainability Guidelines 1.0

This article is noticeably light on numbers for a web performance article but hopefully relevant nonetheless. What mattered to me to write about was software acceptance and getting buy-in for web performance solutions. Essentially: did people accept the tool you proposed to work with? Did they feel a sense of ownership of the results of a test? Did performance stay on their radar or did it drop off?

Over the last two years I have worked to raise the profile of web performance within a number of small organizations and the company I work for. My thesis is that many performance tools used by experts are too complex for most people to understand, act on, or reach for in their particular situation. Starting with a simpler solution is often the most durable way to lead up to a more complex solution.

This article talks about one path for introducing a person or typically a small organization to web performance the simpler way including the tool Yellow Lab Tools, potentially to lead up to more complex tools in the future.

Remembering the before times…

I believe that many people reading this blog would forget what it is like before gaining literacy in many web performance topics. In some ways it seems intentional as people identify with a niche and feel it is specialized knowledge they can share as a consultant or adviser.

For some situations, often with small organizations, it is more worthwhile to teach someone to do something for themselves than to do it for them on an ongoing basis. This is different than just being an adviser. Teaching and gaining buy-in requires providing tools and test results that are accepted rather than just put away for the future.

When I showed people the waterfall chart they were dazzled but expected me to do it going forward because it was sort of intimidating. Some forgot all about it quickly afterwards. Even with some “oohs” and “aahs” as I showed them graphs and data, the acceptance was not high.

Vs.

When I showed them the Yellow Lab Tools results, they wanted to make this a thing for themselves to do in the future. With a little gentle encouragement to bookmark it, it became routine or part of the arsenal. I felt I had shifted the needle: software had been accepted.

Worse can be better

My argument about this dynamic of software acceptance has a history: “worse is better”, a term from a 1989 essay by Richard P. Gabriel (designer of Common Lisp). In essence, a simpler tool may be more readily adopted and improved upon than a complex tool being adopted and learned out of the gate.

In the influential essay it was argued that “worse” solutions could have better survival characteristics than the more complete solution that may be more complex for users to accept initially. The “worse” solution may in fact be better eventually because it could be improved upon until it was nearly as complete as the more “ideal” solution to start with. Gabriel contrasted this simpler approach with the ‘The Right Way’ or what was called the MIT Style that aimed for completeness rather than simplicity in design:

the design must cover as many important situations as is practical. All reasonably expected cases must be covered. Simplicity is not allowed to overly reduce completeness.

– a design philosophy attributed to the MIT Style

It is my suggestion that Core Web Vitals, by trying to be a unified set of quality signals, can be broadly said to be aiming to be a complete solution comparable to the MIT Style. WebPageTest also as a tool has so many options and configuration possible that it aims to be a complete solution comparable to the MIT Style.

The Right Way: Core Web Vitals

It may be rote learned to you by now to open up https://pagespeed.web.dev/ to look for Chrome User Experience Report (CrUX) data and lighthouse results about core web vitals for a URL. It is what I personally first reach for. The results may seem fast and quick at hand. But is it too much for the people about to receive the results?

Explaining what core web vitals are and differentiating lab tests from field data can be too much overload for a given situation, before even explaining 75th percentile and other modifiers. Even as simple as the graph for core web vitals can be for CrUX data, it is built on assumptions of the audience and information that is not necessarily shared knowledge yet.

The website you are advising on may not currently qualify for CrUX data and only a lighthouse test result will be returned. In this event you may need to explain the limitations of lighthouse results. Even if it does return CrUX, let’s consider a few sources of initial resistance to learning this shared language that is so vital to many web performance workers:

- Acronyms: LCP, INP, CLS and more – don’t forget what reading these were like before you gained the knowledge of what these meant.

- Literacy about the Metrics specifically, what each metric is intended to measure and why it is important. Sometimes I found myself resorting to hand gestures or using words like “glitchy” or “laggy” to describe INP and CLS. Don’t forget that you might start talking about “hero images” for LCP too. I don’t know how this landed with the audience at times.

- Literacy about the concept of Core Web Vitals: “unified guidance for quality signals that are essential to delivering a great user experience on the web.”. The person or organization may have no background to this issue at all.

- Resistance to perceptions of being sold software. Some people seem perceive “web performance” as synonymous with selling a hosted solution or software as a service that they do not necessarily want or need. This is where open source and indie solutions are potentially effective at increasing awareness of web performance. In the future they may invest in a solution or service but could prefer to learn about other options first to learn about the issue.

These sources of resistance get in the way of taking ownership of the results as people begin to assign the work to be “an expert’s job” rather than “our job”.

The Right Way by WebPageTest.org

A step up the complexity chain from Yellow Lab Tools is WebPageTest.org, a commercial freemium product. WebPageTest aims for a lot of completeness with elements of simplicity introduced in a good attempt to make the product more user friendly. It combines features such as human-readable summaries of categories of results, including Core Web Vitals, with a powerful and detailed waterfall chart.

A Human readable summary from WebPageTest. Elements of simplicity among complexity.

A Human readable summary from WebPageTest. Elements of simplicity among complexity.

WebPageTest makes tactical decisions in its own right such as sensible defaults to keep you on track. These include many default settings such as offering suggested latency, location and device choices, such as a cable desktop connection and 4G on a mobile device. This is good from my perspective, and keeps things a little ‘worse’ in the 1989 essay sense while still being backed by data as sensible defaults.

Still, WebPageTest has a a waterfall chart that inspires many amazing thousands of words of commentary (91 minute excellent read) due to its potential complexity. It is a whole mini language to decipher and stands as a barrier to software acceptance initially. Even with the inclusion of excellent human-readable summaries, sensible defaults and commentary information, I argue WebPageTest is still not simple enough for many cases.

Enter Yellow Lab Tools

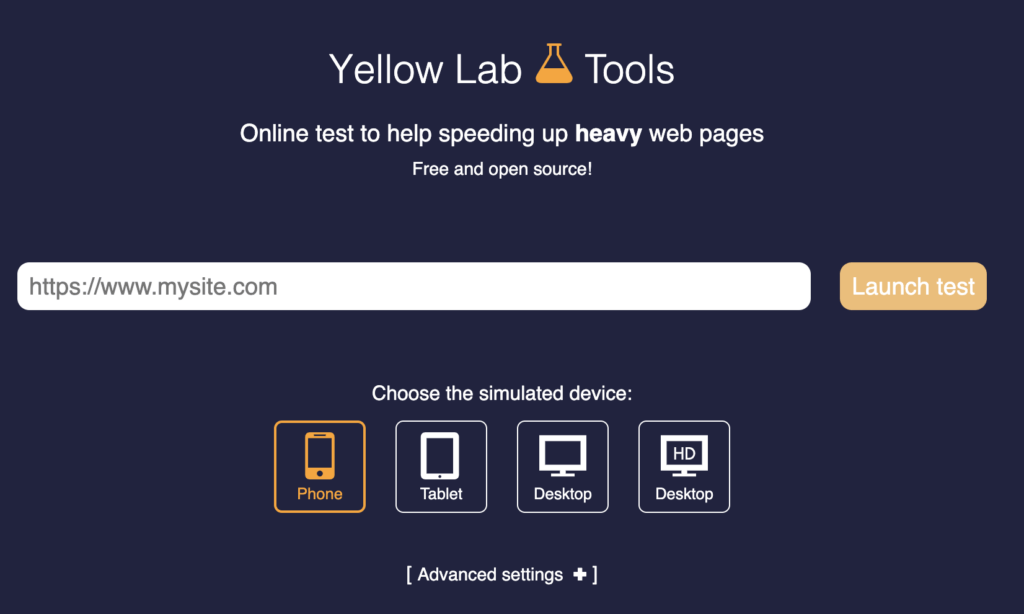

The very simple entry point into Yellow Lab Tools

Yellow Lab Tools is an open source project by Gaël Métais. It allows you to test a webpage (via a URL) and detects front end performance issues with some of the following features:

- Provides results in a language of letter grading that is more immediately understood with fewer assumptions. The results are in a grade format out of 100, ABCDF etc., much like people are accustomed to receiving from schoolwork.

- Provides the most minimal but relevant information to action on certain items. Allows for detailed steps into certain sections if needed.

- Likely to broadly reveal front-end issues that would be caught by more advanced front end tests

- Even if the test results are not exactly what you need to action on a web vitals issue, your team or organization will be warmed up to receive the next thing such as WPT, Pagespeed Insights etc.

A section of a yellow lab tools result for Planet Performance

Features of Yellow Lab Tools, which released a v2 in 2021:

- Put in a URL and go

- Quick results

- Instant Grade and breakdown

- Less special literacy required

- Free and open source

Most users can figure out how to improve or do poorly in Yellow Lab Tools categories, to get them started on a performance journey. Whether it is putting a huge sized image into the header causing issues, or even detecting duplicate jQuery’s on a website, Yellow Lab Tools will reveal some issues pretty well to work on.

For example, Yellow Lab Tools gives results in disk size in kilobytes and megabytes rather than a core web vitals result. Some people have not yet learned the CWV language, but they are primed to understand too large of a file will make things slower. Categories of results are given opinionated clear language: “Bad CSS” and “Bad JS” make it clearer than metrics that require background knowledge to understand.

Acceptance is just the beginning

By conditioning the person to accept the simpler program, they are now primed with essentially fewer expectations for what comes next. Once they are comfortable with a test like Yellow Lab Tools, they will be more likely to reach for the next evolution, whether that becomes WebPageTest, CrUX or other performance tools. You are in the end arguably better off giving an incomplete simpler test that is accepted over one that is fancier but rejected.

I would further add there seems to me to be little or no harm in a overly positive yellow labs result even if the more complex tests are raising issues. They will still be ready to hear, and arguably more ready to hear about more complex performance tests, after being warmed up to the idea of performance tests at all.

Conclusion

Try and remember the before times, the times before you gained experience about web performance, when trying to bring people into the fold of web performance. This is relevant for many smaller organizations and potentially individual people within a larger organization.

Yellow Lab Tools stands out for its simplicity and makes an excellent tool for an early test in some situations. It could possibly be one of the first things that you try to inform people about web performance at least in terms of sharing test results.

In general my advice is: don’t let perfect be the enemy of the good, and may you prosper in your web performance journey and the people you help will prosper too.

A deep thank you to Kathleen, Eric, Stoyan and Brian for helping me review this article.

Thanks!