Emilie Wilhelm, Head of Marketing at Fasterize

For over 10 years, I have been actively committed to democratizing web performance (webperf). As the author of several publications and studies at Fasterize, I have contributed to raising awareness and sharing knowledge about best practices in digital performance, while demonstrating the direct impact of speed on conversion rates and business success.

As web performance experts, we’re all familiar with the oft-cited examples: Amazon, Walmart, and their gains or losses directly tied to page loading speeds. But while these examples are inspiring, they prompt an essential question: what about your company? Your users, buying cycles, and challenges are unique.

The real question is not whether web performance can influence business — that’s a well-established fact. Instead, how can you demonstrate its impact in your specific context? How can you measure it, persuade stakeholders, and prioritize the investments needed to maximize your results? And ultimately, how significantly can your web performance influence conversions?

This is where the distinction between correlation and causation becomes crucial. Raw data can reveal trends, but only rigorous testing can prove that a specific optimization leads to measurable business gains. Leveraging A/B tests specifically designed for web performance, we’ve developed a clear and reliable method to connect technical improvements to tangible results.

Understanding the difference between correlation and causation

Correlation and causation—while related—are fundamentally different concepts. Correlation means two phenomena move together, but without implying one causes the other. Causation, on the other hand, indicates a direct relationship: a change in one variable results in a change in another.

A well-known example illustrates this distinction: increased ice cream sales in summer correlate with a rise in shark attacks. Yet this relationship doesn’t imply causation (at least we hope not!). In web performance, the confusion between these concepts can be just as misleading. Users abandoning slow-loading pages don’t necessarily do so solely because of speed issues. Their behavior may also be influenced by factors like site ergonomics, competitive pressures, or content quality.

To prove that speed is a conversion driver, we must move beyond assumptions and apply the scientific method: test, measure, and analyze rigorously.

A/B Testing: The gold standard for measuring Impact

The key to proving causation lies in A/B testing. Like A/B tests done for improving features or UX, this controlled experiment compares two versions of a website but in terms of web performance:

- An optimized version focused on improving web performance.

- An unaltered version, used as a control.

Using a CDN or edge worker, it’s possible to run these tests with unparalleled accuracy and efficiency. Visitors are randomly assigned to either group via a cookie, ensuring identical conditions across both: the same promotions, timeframes, and product availability.

While powerful, this process demands a rigorous approach. Statistical tools, like the chi-squared test, validate whether observed differences between groups are due to optimizations or mere chance. This level of precision transforms impressions into concrete evidence.

Key Metrics to track and when to expect results

Web performance optimizations manifest across a range of KPIs, but their impacts unfold at different paces:

- Technical metrics such as TTFB or Core Web Vitals (LCP, FID, CLS) react almost instantly. These are the first indicators of improvement.

- User behavior metrics like session duration or bounce rates take a few weeks to stabilize.

- Business outcomes such as conversions or average cart value require a longer timeframe—typically one to two months—to reveal reliable trends.

Premature analysis risks drawing incorrect conclusions. Patience, along with a comprehensive perspective, is critical to accurately measure the impact of optimizations.

Besides, when the A/B test starts, users in the optimized population may first have had an unoptimized experience, due to the length of the buying cycle. So, it’s important to wait at least a couple of weeks before having a look at the results.

Lessons Learned from A/B Testing

Why Web Performance impacts are never uniform

The effect of web performance on conversions varies widely, influenced by factors unique to each business:

- Audience demographics: Younger users, accustomed to seamless digital experiences, are less forgiving of slow pages than older audiences.

- Product type: Impulse purchases (fashion, gadgets) are more sensitive to speed than necessity items (car parts, healthcare products), where users are willing to wait.

- Average cart size: Smaller carts benefit more from optimized performance on mobile, whereas high-value purchases see greater impact on desktop, where users deliberate longer.

- Competitive pressure: In fiercely competitive markets, a fast-loading page is critical to prevent users from switching to a competitor.

- Promotional context: During high-stakes periods like Black Friday, every millisecond counts, amplifying the role of web performance in driving conversions.

Web Performance’s amplified role during Black Friday

High-activity periods, such as Black Friday or major sales events, highlight the strategic importance of web performance. Every millisecond saved can have a compounding effect on conversions. In these moments, urgency and competition demand smooth, rapid experiences to capture impulsive user behaviors.

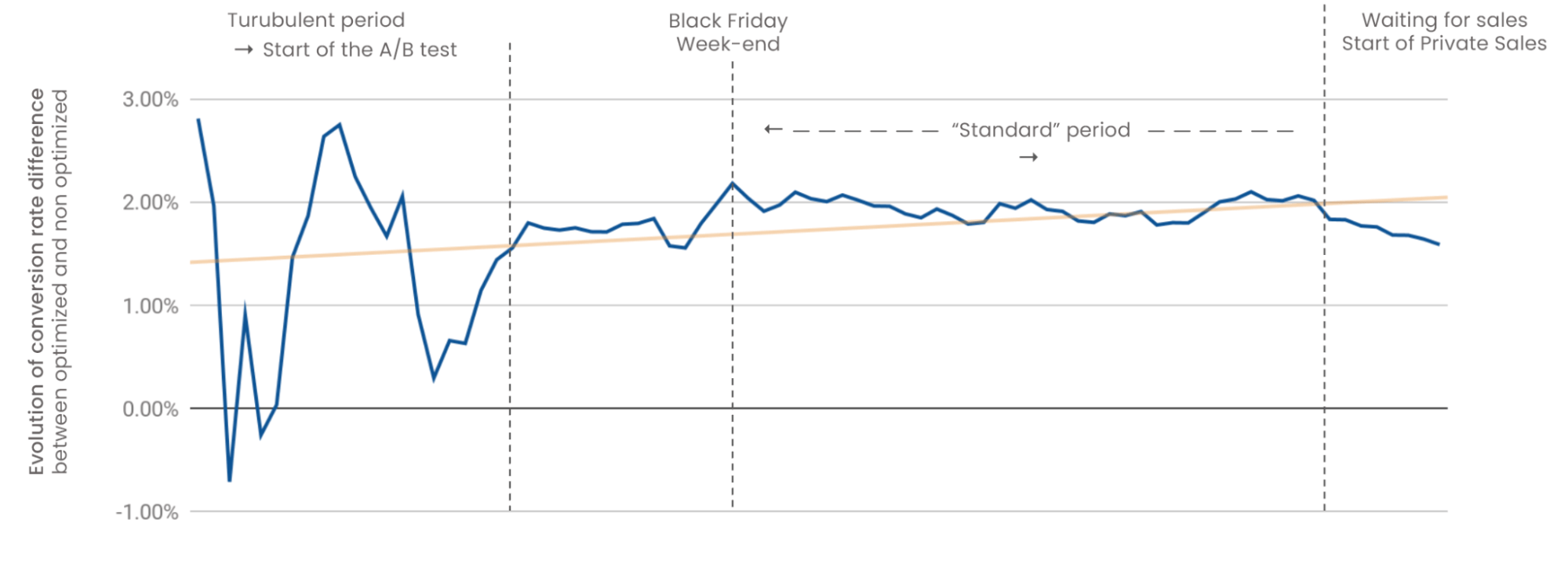

An A/B test conducted during Black Friday perfectly illustrates this reality (see graph below). Conversion rates differences between optimized and non optimized versions peaked during this period of time. Faced with limited-time offers, consumers were far less tolerant of slow load times. In such scenarios, fast-loading pages become a decisive advantage in converting these highly motivated audiences.

Even outside of peak periods, such as during quieter sales like pre-holiday promotions, web performance continues to deliver significant benefits. While users adopt a more measured approach during these times, a smooth and responsive experience still reduces cart abandonment and increases conversions.

These insights reinforce that web performance isn’t just a tool for high-demand moments—it provides consistent value throughout the year. Acting as an accelerator of immediate conversions during peak times, it also serves as a foundational element in delivering a high-quality user experience year-round.

The absence of a clear correlation with specific metrics

One of the biggest challenges in web performance lies in the lack of a direct, universal link between individual technical metrics and business KPIs like conversion rates. Despite extensive analysis, we have been unable to demonstrate that improving a single metric, such as TTFB, FCP, Speed Index, LCP, CLS, or even PageSpeed scores, or even any combination of these metrics, consistently results in increased conversions with a strong correlation.

This doesn’t diminish the importance of web performance but instead highlights the need for an holistic approach. Isolated improvements are insufficient to guarantee better user experiences or meaningful business outcomes. For instance, while TTFB often shows a strong influence in certain cases, this effect is not universal.

Ultimately, causation exists but is shaped by complex interactions among technical metrics, user behavior, and site-specific factors. Longer testing periods and larger datasets are often required to refine this understanding and identify the most effective levers.

The measurable results of our A/B Tests

At Fasterize, we’ve conducted dozens of A/B tests to precisely measure the impact of web performance optimizations. The results are compelling:

- 90% of tests demonstrate a positive impact on conversion rates.

- Average uplift: +8%.

- Median uplift: +4%.

By sector:

- Fashion & Beauty: Median uplift of +5.8%.

- Home & Leisure: Median uplift of +8.9%.

- Travel: Median uplift of +3.1%.

These results underscore that while impacts vary across contexts, web performance remains a universal growth driver.

Conclusion: prove, communicate, sustain

Web performance transcends technical concerns; it’s a strategic lever that directly influences business results. With rigorous A/B testing and a solid methodology, optimizations can translate into tangible gains.

However, demonstrating impact is just the beginning. The true value of web performance lies in effectively communicating these results to stakeholders, ensuring that investments are prioritized. A/B testing becomes a shared language, aligning technical efforts with strategic priorities.

Once priorities are aligned, sustaining these efforts becomes critical. In a landscape where user expectations and technologies evolve rapidly, maintaining focus on performance is essential. What works today may be insufficient tomorrow. Every millisecond remains crucial—not only to meet technical requirements but also to deliver an optimal user experience and secure competitive advantage.

re: The Absence of a Clear Correlation section (following the time-series plot)

It’s refreshing to see a null result stated so openly and honestly, to wit:

The absence of spin-doctoring or, only reporting feel-good news, is laudable.

This inspired me to try and think of a possible explanation, either in whole or in part. FWIW, several things sprang to mind.

Users generally only care about response time performance, rather than say, throughput

performance or resource utilization, etc.

One of the things we discovered at Xerox PARC was that user-perceived response time can be around 3 or 4 times longer than the actual measured response time. Psychology lurks behind the curtain.

Time scales on the back-end, such as, RDBMS operations or latencies arising from accessing remote 3rd-party sites, are generally orders of magnitude longer than front-end time scales, like TTFB.

The back-end is generally going to be the bottleneck and will thus dominate the total response time either observed or measured by the user. Thus, any performance gains on the front-end are less likely to be significant compared to even “small” gains on the back-end.

Another, lesser known, aspect pertains to cloud-based applications. To my great surprise, I’ve seen applications scale as well as they possibly could with little effort beyond the initial choice of cloud configuration options.

See for details.

In other words, there were no significant performance improvements to be had on behalf of a typical user. On the other hand, what emerged was user (e.g., a manager) interest in maintaining that performance level at lower cost: which is more of a capacity management objective than a performance goal.

The missing link (???) in my Comment is

https://speakerdeck.com/drqz/how-to-scale-in-the-cloud-chargeback-is-back-baby

“How to Scale in the Cloud: Chargeback is Back, Baby!”