Stoyan (@stoyanstefanov) is a frontend engineer, writer ("JavaScript Patterns", "Object-Oriented JavaScript"), speaker (JSConf, Velocity, Fronteers), toolmaker (Smush.it, YSlow 2.0) and a guitar hero wannabe.

After removing all the extra HTTP requests you possibly can from your waterfall, it’s time to make sure that those that are left are as small as they can be. Not only this makes your pages load faster, but it also helps you save on the bandwidth bill. Your weapons for fighting overweight component include: compression and minification of text-based files such as scripts and styles, recompression of some downloadable files, and zero-body components. (A follow-up post will talk about optimizing images.)

Gzipping plain text components

Hands down the easiest and at the same time quite effective optimization – turning on gzipping for all plain text components. It’s almost a crime if you don’t do it. Doesn’t “cost” any development time, just a simple flip of a switch in Apache configuration. And the results could be surprisingly pleasant.

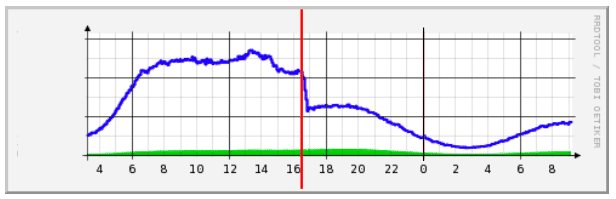

When Bill Scott joined Netflix, he noticed that gzip is not on. So they turned it on. And here’s the result – the day they enabled it, the outbound traffic pretty much dropped in half (slides)

Gzip FAQ

- How much improvement can you expect from gzip?

- On average – 70% reduction of the file size!

- Any drawbacks?

- Well, there’s a certain cost associated with the server compressing the response and the browser uncompressing it, but it’s negligible compared to the benefits you get

- Any browser quirks?

- Sure, IE6, of course. But only in IE6 service pack 1 and fixed for after that. You can boldly ignore this edge case, but if you’re extra paranoid you can disable gzip for this user agent

- How to tell if it’s on?

- Run YSlow/PageSpeed and they’ll will warn you if it’s not on. If you don’t have any of those tools just look at the HTTP headers with any other tool, e.g. Firebug, webpagetest.org. You should see the header:

Content-Encoding: gzip

provided, of course, that your browser claimed it supports compression by sending the header:

Accept-Encoding: gzip, deflate

- What types of components should you gzip?

- All text components:

- javascripts

- css

- plain text

- html, xml, including any other XML-based format such as SVG, also IE’s .htc

- JSON responses from web service calls

- anything that’s not a binary file…

You should also gzip @font-files like EOT, TTF, OTF, with the exception of WOFF. Average about 40% to be won there with font files.

How-to turn on gzipping

Ideally you need control over the Apache configuration. If not full control, at least most hosting providers will offer you ability to tweak configuration via .htaccess. If your host doesn’t, well, change the host.

So just add this to .htaccess:

AddOutputFilterByType DEFLATE text/html text/css text/plain text/xml application/javascript application/json

If you’re on Apache before version 2 or your unfriendly host don’t allow any access to configuration, not all is lost. You can make PHP do the gzipping for you. It’s not ideal but the gzip benefits are so pronounced that it’s worth the try. This article describes a number of different options for gzipping when dealing with uncooperative hosts.

Rezipping

As Billy Hoffman discovered, there’s potential for file size reduction with common downloadable files, which are actually zip files in disguise. Such files include:

- Newer MS Office documents – DOCX, XLSX, PPTX

- Open Office documents – ODT, ODP, ODS

- JARs (Java Applets, anyone?)

- XPI Firefox extensions

- XAP – Silverlight applications

These ZIP files in disguise are usually not compressed with the maximum compression. If you allow such downloads from your website, consider recompressing them beforehand with maximum compression.

There could be anywhere from 1 to 30% size reduction to be won, definitely worth the try, especially since you can do it all on the command line, as part of the build process, etc. (re)Compress once, save bandwidth and offer faster downloads every time 😉

15% uncompressed traffic

Tony Gentilcore of Google reported his findings that a significant chunk of their traffic is still sent uncompressed. Digging into it he realized there’s a number of anti-virus software and firewalls that will mingle with the browser’s Accept-Encoding header changing into the likes of:

Accept-Encoding: xxxx, deflxxx Accept-Enxoding: gzip, deflate

Since this is an invalid header, the server will decide that the browser doesn’t support gzip and send uncompressed response. And why would the retarded anti-virus program do it? Because it doesn’t want to deal with decompression in order to examine the content. Probably not to slow down the experience? In doing so it actually hurts the user to a greater extend.

So compression is important, but unfortunately it’s not always present. That’s why minification helps – not only because compressing minified responses is even smaller, but because sometimes there is no compression despite your best efforts.

Minification

Minification means striping extra code from your programs that is not essential for execution. The code in question is comments, whitespace, etc from styles and scripts, but also renaming variables with shorter names, and various other optimizations.

This is best done with a tool, of course, and luckily there a number of tools to help.

Minifying JavaScript

Some of the tools to minify JavaScript include:

- YUICompressor

- Dojo ShrinkSafe

- Packer

- JSMin

- … and the new kid on the block – Google’s Closure compiler

How much size reduction can you expect from minification? To answer that I ran jQuery 1.3.2. through all the tools mentioned above (using hosted versions) and compared the sizes before/after and with/without gzipping the result of minification.

The table below lists the results. All the % figures are % of the original, so smaller is better. 29% means the file was reduced to 29% of its original version, or a saving of 71%

| File | original size | size, gzipped | % of original | gzip, % of original |

|---|---|---|---|---|

| original | 120619 | 35088 | 100.00% | 29.09% |

| closure-advanced | 49638 | 17583 | 41.15% | 14.58% |

| closure | 55320 | 18657 | 45.86% | 15.47% |

| jsmin | 73690 | 21198 | 61.09% | 17.57% |

| packer | 39246 | 18659 | 32.54% | 15.47% |

| shrinksafe | 69516 | 22105 | 57.63% | 18.33% |

| yui | 57256 | 19677 | 47.47% | 16.31% |

As you can see gzipping alone gives you about 70% savings, minification alone cuts script sizes with more than half and both combined (minifying then gzipping) can make your scripts 85% leaner. Verdict: do it. The concrete tool you use probably doesn’t really matter all that much, pick anything you’re comfortable with to run before deployment (or best, automatically during a build process)

Minifying CSS

In addition to the usual stripping of comments and whitespaces, more advanced CSS minification could include for example:

// before

#mhm {padding: 0px 0px 0px 0px;}

// after

#mhm{padding:0}

// before

#ha{background: #ff00ff;}

// after

#ha{background:#f0f}

//...

A CSS minifier is much less powerful than a JS minifier, it cannot rename properties or reorganize them, because the order matters and for example text-decoration:underline cannot get any shorter than that.

There’s not a lot of CSS minifiers, but here’s a few I tested:

- YUI compressor – yes, the same YUI compressor that does JavaScript minification. I’ve actually ported the CSS minification part of it to JavaScript (it’s in Java otherwise) some time ago. There’s even an online form you can paste into to test. The CSS minifier is regular expression based

- Minify is a PHP based JS/CSS minification utility started by Ryan Grove. The CSS minifier part is also with regular expressions, I have the feeling it’s also based on YUICompressor, at least initially

- CSSTidy – a parser and an optimizer written in PHP, but also with C version for desktop executable. There’s also a hosted version. It’s probably the most advanced optimizer in the list, being a parser it has a deeper understanding of the structure of the styleshets

- HTML_CSS from PEAR – not exactly an optimizer but more of a general purpose library for creating and updating stylesheets server-side in PHP. It can be used as a minifier, by simply reading, then printing the parsed structure, which strips spaces and comments as a side effect.

Trying to get an average figure of the potential benefits, I ran these tools on all stylesheets from csszengarden.com, collected simply like:

$urlt = "http://csszengarden.com/%s/%s.css"; for ($i = 1; $i < 214; $i++) { $id = str_pad($i, 3, "0", STR_PAD_LEFT); $url = sprintf($urlt, $id, $id); file_put_contents("$id.css", file_get_contents($url)); }

3 files gave a 404, so I ran the tools above on the rest 210 files. CSSTidy ran twice – once with its safest settings (which even keep comments in) and then with the most aggressive. The “safe” way to use CSSTidy is like so:

// dependencies, instance include 'class.csstidy.php'; $css = new csstidy(); // options $css->set_cfg('preserve_css',true); $css->load_template('high_compression'); // parse $css->parse($source_css_code); // result $min = $css->print->plain();

The aggressive minification is the same only without setting the preserve_css option.

Running Minify is simple:

// dependencies, instance require 'CSS.php'; $minifier = new Minify_CSS(); // minify in one shot $min = $minifier->minify($source_css_string_or_url);

As for PEAR::HTML_CSS, since it’s not a minifier, you only need to parse the input and print the output.

require 'HTML/CSS.php'; $options = array( 'xhtml' => false, 'tab' => 0, 'oneline' => true, 'groupsfirst' => false, 'allowduplicates' => true, ); $css = new HTML_CSS($options); $css->parseFile($input_filename); $css->toFile($output_filename); // ... or alternatively if you want the result as a string // $minified = $css->toString();

So I ran those tools on the CSSZenGarden 200+ files and the full table of results is here, below are just the averages:

| Original | YUI | Minify | CSSTidy-safe | CSSTidy-small | PEAR | |

|---|---|---|---|---|---|---|

| raw | 100% | 68.18% | 68.66% | 84.44% | 63.29% | 74.60% |

| gzipped | 30.36% | 19.89% | 20.74% | 28.36% | 19.44% | 20.20% |

Again, the numbers are percentage of the original, so smaller is better. As you can see, on average gzip alone gives you 70% size reduction. The minification is not so successful as with JavaScript. Here even the best tool cannot reach 40% reduction (for JS it was usually over 50%). But nevertheless, gzip+minification on average gives you a reduction of 80% or more. Verdict: do it!

An important note here is that in CSS we deal with a lot of hacks. Since the browsers have parsing issues (which is what hacks often exploit), what about a poor minifier? How safe are the minifiers? Well, that’s a subject for a separate study, but I know I can at least trust the YUICompressor, after all it’s used by hundreds of Yahoo! developers daily and probably thousands non-Yahoos around the world. PEAR’s HTML_CSS library also looks pretty safe because it has a simple parser that seems to tolerate all kinds of hacks. CSSTidy also claims to tolerate a lot of hacks, but given that the last version is two years old (maybe new hacks have surfaced meanwhile) and the fact that it’s the most intelligent optimizer (knows about values, colors and so on) it should be approached with care.

204

Let’s wrap up this lengthy posting with an honorable mention of the 204 No Content response (blogged before). It’s the world’s smallest componet, the one that has no body and a Content-Length of 0.

Often people use 1×1 GIFs for logging and tracking purposes and other types of requests that don’t need a response. If you do this, you can return a 204 status code and no response body, only headers. Look no further that Google search results with your HTTP sniffer ON to see examples of 204 responses.

The way to send a 204 response from PHP is simply:

header("HTTP/1.0 204 No Content");

A 204 response saves just a little bit but, hey, every little bit helps.

And remember the mantra: every extra bit is a disservice to the user 🙂

Thank you for reading!

Stay tuned for the next article continuing the topic of reducing the component sizes as much as possible.