Éric Daspet (@edasfr) is a web consultant in France. He wrote about PHP, founded Paris-Web conferences to promote web quality, and is now pushing performance with a local user group and a future book.

Disclaimer: The following article is vulgarization. Some TCP details are omitted or may be simplified.

My Internet connection is really fast!

Talking about front-end performance in France is a bit difficult. French ISPs have been advertising for years about being able to reach 24Mb/s with DSL lines. Why even bother about website performance if you think everyone has 24Mb/s available?

Truth is, only few people have that speed but, to be fair, most of us have a good solid 2 to 5Mb/s and nearly everyone has at least a 1 Mb/s DSL line. Still, bandwidth doesn’t help: even at 24Mb/s, web pages unfortunately don’t load instantaneously.

What really kills us is latency (and badly written websites).

Latency?

Bandwidth is how much data you can transfer at once (number of parallel cars in the road). Latency is how fast a byte of data will travel end to end (length of the road and speed of the cars). Here we will talk about the round trip time: the latency to travel back and forth.

Latency (round trip time) depends mainly on the distance between you and your peers. Take that distance, divide by the speed of light, divide again by 66% (slowness of a light fiber), and multiply by two to have back and forth. Then add about 10 to 20ms for your hardware, your ISP infrastructure, and the web server hardware and network. You will have the minimal latency you may hope for (but never reach):

Latency (round trip) = 2 x (distance) / (0.66 x speed of light) + 20ms

In France the latency of our usual DSL lines goes from 30ms (French websites and CDNs) to 60-70ms (big players in Europe). We can expect 100 to 200ms for an US website with no relay in Europe.

Reading Yahoo!’s blog, it seems that France has better figures than most other countries, so please expect worse than my figures (I am an exception, you too, probably).

Badly written Wi-Fi captive portals and 3G phone networks usually have an added tax of 100ms, sometimes more. VPN, bad proxies, antivirus software, badly written portals and badly set up internal networks may also noticeably increase your latency.

Big companies have their own private “serious” direct connection to Internet. They also often have (at least in France) networks with filtering firewalls, complex architecture between the head office and branch offices, and sometimes overloaded switches and routers. You can expect an added tax of 50ms to 250ms compared to a simple DSL line.

60ms is really small, so latency isn’t so important, is it?

Round trip time is a primary concern. Take a look at the waterfall for fnac.com (our French Barnes&Noble equivalent): the most noticeable color is green (waiting), not blue (downloading), and the average used bandwidth is 31Kb/s on a line able to do 1000x this figure. Mostly, your browser waits; it waits because of the latency. The only long blue item is a flash animation.

Sequence of HTTP requests

When you do a request, you have to wait a few milliseconds to let the server generate the response, but also a few to have your request and its response travel back and forth. Each time you perform a request, you will have to wait one round trip time.

Our main free newspaper’s website, 20minutes, needs 243 requests. LeMonde, one of our main paid newspapers, needs 269 requests. Say you will have 250 requests on some websites you may visit frequently. Microsoft Internet Explorer 7 has two parallel download queues, so that’s 125 requests each. With a standard round trip time of 60ms we will be assured to wait at least 7.5 seconds before the page fully loads. Then we have to add the time needed to download and process the files themselves.

TCP before HTTP

TCP is the protocol we use to connect to a web server and then send it our request. It’s like when you’re chatting on the phone: you never directly tell what you plan to tell, you first say “hello”, wait for your peer to say “hello”, then ask an academic “what’s up?” and wait for an answer (that you probably won’t even listen to but you will wait for it anyway).

In Internet’s life, this courtesy is named TCP. TCP sends a “SYN” in place of “hello”, and gets a “SYN-ACK” back, as an answer. The more latency you have, the more this initialization will take time.

If we were performing a TCP connection for each HTTP request, we would lose another 7.5s to the 7.5s we were calculating before. That would be unacceptable.

Like your phone chat, once the courtesy is done, you may ask multiple questions sequentially, or redial for each question with a new “hello” each time (but this is really annoying, both on the phone and on the web). This functionality of HTTP is called “keep-alive”.

At least in France, servers without keep-alive are not rare, because it generates server load in some situations. Some rare websites have no keep-alive at all. One of our two examples has an intermediate situation: out of 243 requests it serves 27 of them from its own servers with no keep-alive. Considering IE7 with 2 download queues and a latency of 70ms, that’s the loss of about 1 second.

Even with keep-alive, as your browser has not perfect parallelization, TCP connection times may add up and delay significantly the load time.

DNS before TCP

That’s not all. Beforesaying “hello” to your friend on the phone, you have to dial his phone number. For internet it’s the IP address. Either your browser performed a request to the same domain a few seconds before and it may reuse the same result, or it has to perform a DNS request. This request may be in your ISP cache (cheap) or needs to be sent to a distant server (expensive if the domain name server is far away).

For each TCP connection, you will have to wait again, a time depending on the latency: latency to your ISP if you find a result on your ISP cache, latency to the DNS if not.

You don’t check your friend’s number on a paper phone book ten times per hour. Your browser doesn’t either. But again, you may use multiple domains and your browser has no perfect parallelization. DNS requests may add a delay at the wrong time, like a blocking javascript or style sheet, and add up to the total page load time.

But that’s not all! DNS often uses the UDP protocol. UDP is a simple “quick and cheap” request/response protocol, with no need to establish a connection before like TCP has. However, when the response that weight over 512 bytes, it may either send a larger response (EDNS specification) or ask for TCP. Large DNS responses used to be rare in the past, but now DNS uses a security extension (DNSSEC) that requires larger responses.

The problem is that many badly configured firewalls still block DNS responses of more than 512 bytes. A few others will block the UDP fragmentation needed for response of more than 1.5KB (UDP fragmentation is a way to send the response with multiple UDP packets, as each one is limited in size). For short: You may well have UDP DNS requests first then a fallback to TCP.

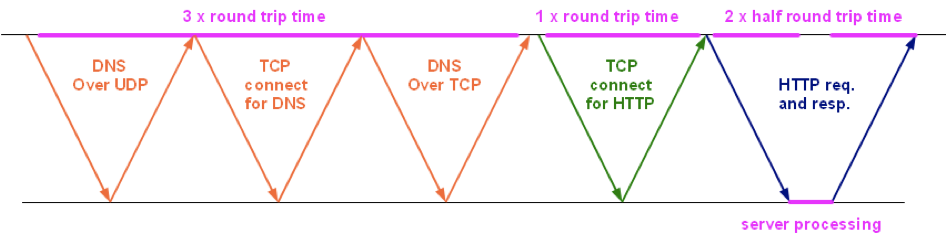

If that happens, the client first requests in UDP, the server answers “please go on TCP”, client opens a TCP connection (SYN + SYN-ACK) and then asks again. In place of one round trip time, we now have three.

TCP again, the useless bandwidth

Let’s say you’ve taken care of all the previous problems. Now you still need time for the downloads themselves. You won’t be able to divide the total web page size by your bandwidth to know the time it will take. You have a shiny 8Mb/s DSL line? You will hardly use more than a fifth of it. In our two examples 20minutes and LeMonde, we used no more than 75Kb/s from a 1.5Mb/s DSL line.

The main reason is that neither the server nor the client knows how much bandwidth they can use. The available bandwidth varies with the client connection, the other downloads it has already started, the network route that is being used (which may change from packet to packet), and so on.

To work around this problem TCP sends a tiny piece of data the first time, waits for the acknowledgement of correct reception, then increases the size it will send next time. The size of the data it may send on the network, waiting for acknowledgment, is called the congestion window.

Data is sent in multiple TCP segments, limited in size (about the size of an IP packet for our context, between 1.4KB and 1.5KB). At first, the server is able to send a maximum of 3 segments until it stops waiting for an ACK. For each ACK it will increase the window size by one segment and will be able to send two new segments (one to replace the one acknowledged and one to fill the new window size). This basically means that the window will double each round trip until we reach the maximum one peer is willing to accept, the maximum size of the window (64KB) or a packet is lost in the network. In the later case, TCP halves the window and start again, slower.

As a consequence, your download speed is directly dependent on your round trip time (the latency). The higher the latency is, the slower you will reach your maximum download speed and the slower your maximum speed will be. For a 64KB maximum window, if your round trip needs 128ms, it will never overload a 5Mb/s DSL line.

It’s even worse! To avoid having too many ACK segments to process, the client retains its ACK for some time. If a second segment comes in, it sends an ACK for both segments in one time. This basically means that the client will send an ACK for every two segments.

Fewer ACK also means that the congestion window and the number of segments per round trip will increase slower (see the numbers at the right of the illustrations, that’s the congestion window in number of TCP segments, for each round trip).

What is annoying is that you will reach this maximum download speed only after 9 round trips and having downloaded about 250KB of data. Most content is smaller than that, and will be more dependent on your round trip time than on your available bandwidth. Small files (90% of the web) are downloaded faster on a low latency network than on a high bandwidth network.

| # of round trips | window size in segments | maximum # of segments sent | size downloaded | instantaneous speed (60ms RTT) |

|---|---|---|---|---|

| 1 | 3 | 3 | 4.5 KB | 600 Kb/s |

| 2 | 4 | 6 | 9 KB | 800 Kb/s |

| 3 | 6 | 12 | 18 KB | 1.2 Mb/s |

| 4 | 9 | 21 | 31.5 KB | 1.8 Mb/s |

| 5 | 13 | 33 | 49.5 KB | 2.6 Mb/s |

| 6 | 19 | 51 | 76.5 KB | 3.8 Mb/s |

| 7 | 28 | 78 | 117 KB | 5.6 Mb/s |

| 8 | 42 | 120 | 180 KB | 8.4 Mb/s |

| 9 | 44 | 164 | 246 KB | 8.8 Mb/s |

A simple 10KB image will need 3 round trips. jQuery (77KB) will need 7 round trips. At 60 to 100ms the round trip time, it is easy to understand that latency is far more important than anything else. Keep most of your content below 4.5KB.

So, my bandwidth is useless? What can I do?

Bandwidth is helping, but your main focus should be on latency. Now you know why. Mike Belshe has a few astonishing graphs about round trip time, bandwidth and web page load time. It would be useless to mirror them here. Go check them now!

This article is already too long so I won’t offer solutions, but here is one: some people are cheating.