Sebastiaan Deckers (@sebdeckers) is a freelance web developer working with startups in Singapore. He is a frequent speaker and co-organiser in the SingaporeJS community.

TL;DR Without Cache Digests there is no clear-cut performance win for HTTP/2 Server Push over HTTP/1 Asset Bundling. However, a Bloom filter based technique, called Cache Digests, makes Server Push more efficient than Asset Bundling in both latency and bandwidth.

| H1 Asset Bundling | H2 Server Push |

H2 Server Push + Cache Digest |

|

|---|---|---|---|

| Latency | |||

| Cold Cache |

|

|

|

| Warm Cache |

|

|

|

| Lukewarm Cache |

|

|

|

| Bandwidth | |||

| Cold Cache |

|

|

|

| Warm Cache |

|

|

|

| Lukewarm Cache |

|

|

|

- H1 and H2 are HTTP/1.x and HTTP/2 respectively.

- Latency means the minimal number of HTTP request/response round trips.

- Bandwidth means the minimal amount of transmitted data.

- Cold Cache means no assets exist in the browser cache.

- Warm Cache means all assets are cached by the browser.

- Lukewarm Cache means some assets are cached by the browser, and some new or modified assets are not cached.

-

— Optimal: no wasted bandwidth nor round trips.

— Optimal: no wasted bandwidth nor round trips.

-

— Sub-optimal: wasted bandwidth or round trips.

— Sub-optimal: wasted bandwidth or round trips.

-

— Wastage due to trade-offs. Varies by implemented strategy.

— Wastage due to trade-offs. Varies by implemented strategy.

HTTP/2 Server Push vs HTTP/1.1 Asset Bundling

HTTP/2 is semantically compatible with HTTP/1.1 which allows its nearly seamless, rapid adoption. The newly introduced concept of Server Push, a protocol level response concatenation mechanism, enables new possibilities for web performance. 1

Let’s compare HTTP/2 Server Push with the widely adopted HTTP/1 best practice of Asset Bundling.

Latency

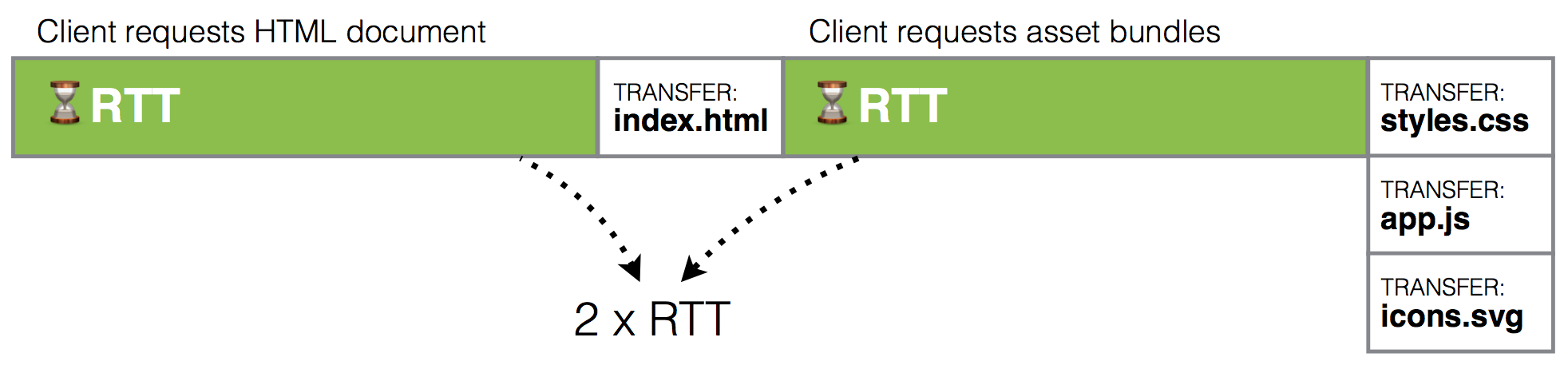

Round trip time (RTT) may compound with nested dependencies. Eliminating request-response round trips at the HTTP level is an important goal for web performance optimisation. 2

Asset Bundling collapses nested dependency round trips but still requires a wasted round trip for the bundles themselves. Inlining “above the fold” assets becomes non-trivial and wastes bandwidth over multiple page loads by sacrificing cached asset re-use. 3

Server Push loads the entire page with all assets loads in a single HTTP request-response round trip, the optimal latency. Assets remain cacheable. Protocol-level prioritisation can deliver critical assets first. 4

Bandwidth

The aggregate size of all assets is roughly identical between server push and asset bundling, with some studies showing minor compression and protocol overhead advantages for Asset Bundling. 5 Results may vary depending on content types (JavaScript, CSS, images, etc). Overhead may improve as loading techniques are optimised for Server Push and application-level overhead is eliminated. E.g. smaller bundling shims for JavaScript, eliminate spritesheets slicing coordinates, eliminate URL fragment addressing of stacked SVGs, etc.

Cache Invalidation

The optimal strategy for cache invalidation should require only a single request-response round trip. With file revving (e.g. a unique filename or Etag for each asset revision) and long expiry times, only the entry point (e.g. HTML document) is revalidated. Upon invalidation, as little unchanged data as possible should should be transferred.

Asset Bundling

TL;DR ![]() The cost of cache invalidation with bundling is proportional to the product of development velocity and total asset size.

The cost of cache invalidation with bundling is proportional to the product of development velocity and total asset size.

Due to increasingly large bundle sizes the cost of invalidation becomes untenable. Transfer size induces delays even if only a small portion of the file was changed. Asset processing, decoding and parsing, can choke browsers. 6

One common mitigation strategy is splitting into “vendor” and “app” bundles. The former being 3rd party libraries, and the latter source code written by the app’s developer. The assumption is that vendor bundles rarely change, yet make up the largest share of the bundle size. 7

However the rate of change may be attributable to outdated dependencies. Large vendor bundles, if they were automatically updated, might change more frequently than the app bundle, defeating the purpose of bundle splitting. Dependency management tools are taking developers in this direction. 8

Increasing package registry usage also suggests more frequently updated vendor bundles. 9

Additionally, continuous delivery is a software development practice where small, incremental code changes are published frequently. This also triggers invalidation of the app bundle. 10

These trends suggest asset bundling is not ideally suited for the needs of future web applications.

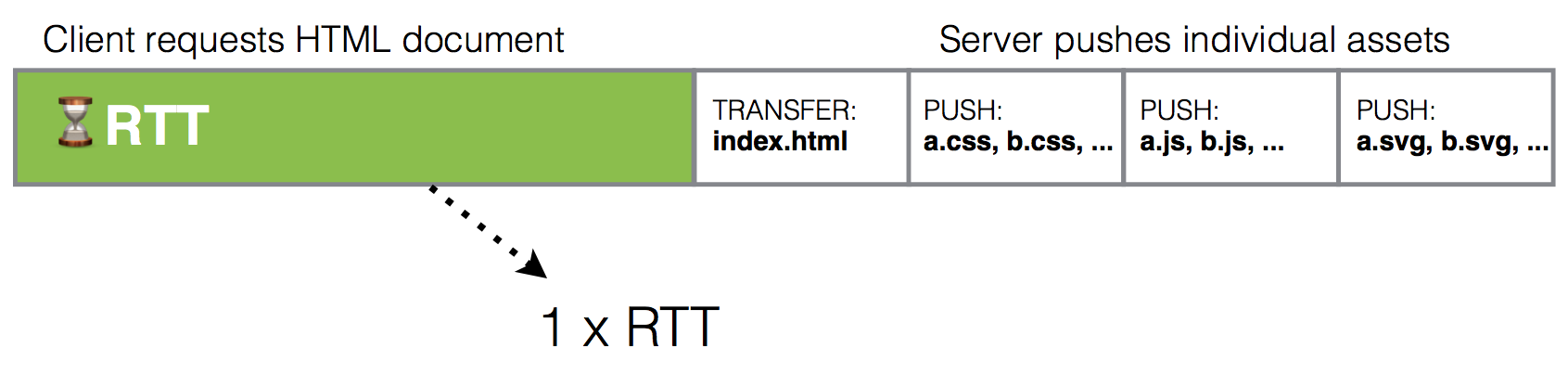

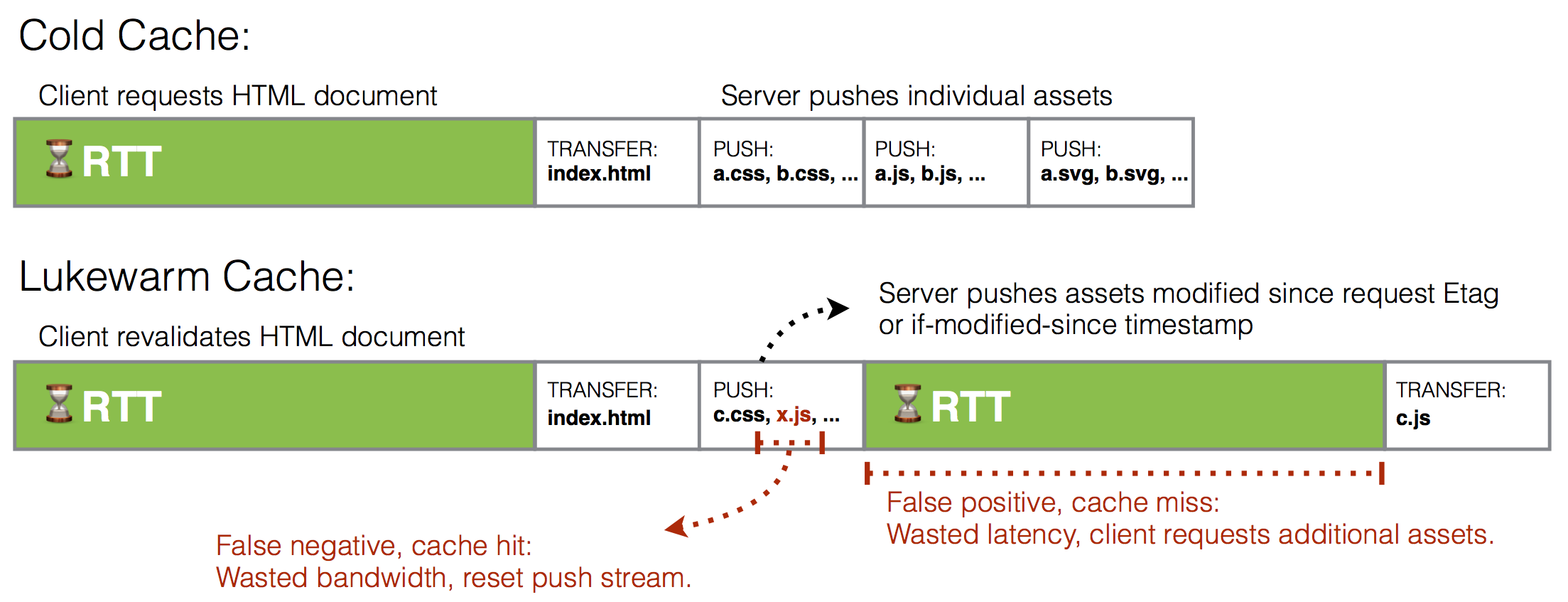

Server Push Without Cache Digests

Individual asset sizes are minimal with server push. Instead, discovery of invalidated assets is the challenge with server push.

Strategy A: Always Push Everything

TL;DR ![]() High bandwidth cost.

High bandwidth cost.

Always push everything. The server blindly pushes all assets, wasting bandwidth on those that were still fresh in client cache. Stream reset frames sent by the client can not eliminate data frames aleady pushed by the server, a problem exacerbated by latency and small asset size.

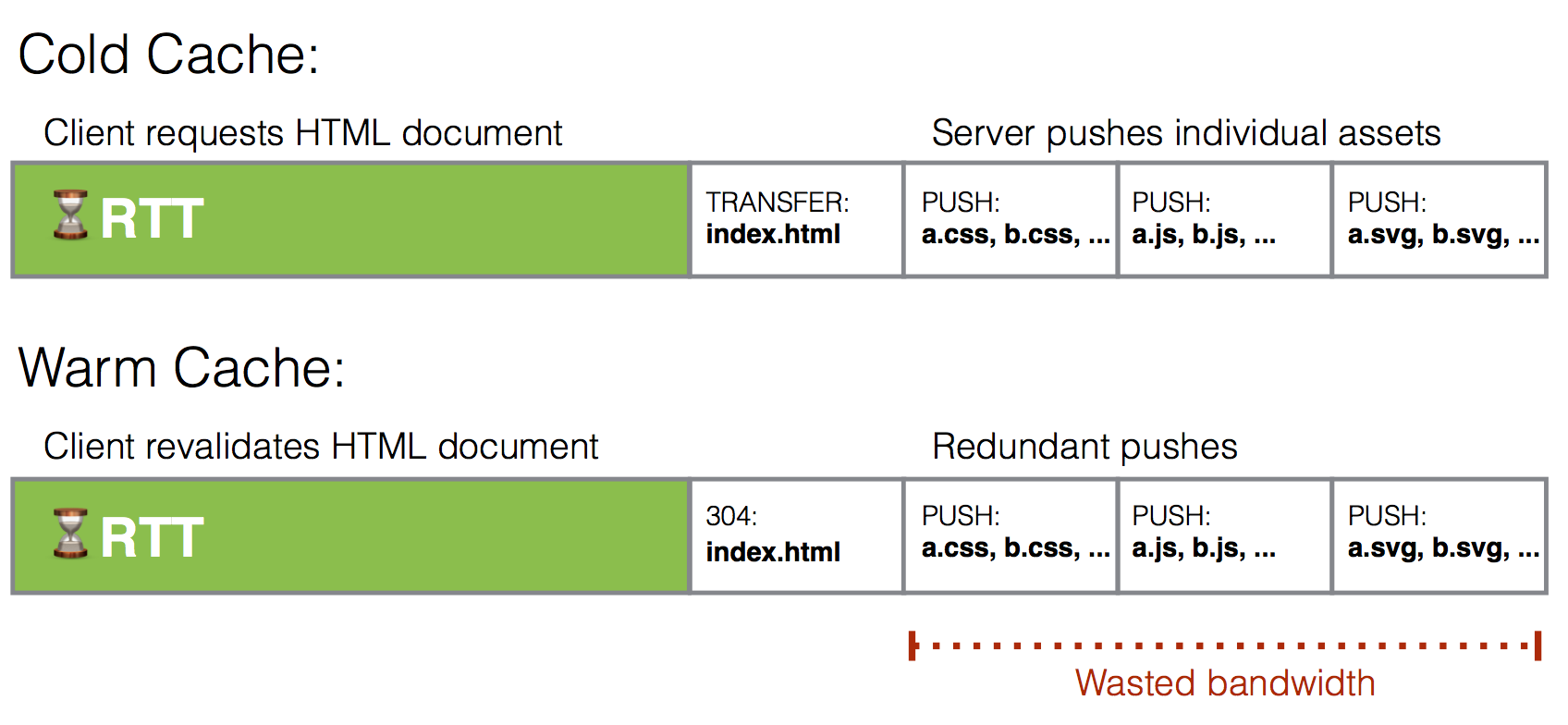

Strategy B: Only push everything the first time

TL;DR ![]() High latency cost.

High latency cost.

Never push anything if the client sent an Etag or if-modified-since header. The client asynchronously discovers outdated assets, potentially incurring multiple round trips.

Strategy C: Dependency tree with revisions

TL;DR ![]() Unpredictable cache miss rate.

Unpredictable cache miss rate.

Use Etags on the incoming request to deduce which dependencies have also since expired, and push those alongside the response. This requires toolchain support to track revisions, typically hashes or modified timestamps. Client cache may have false positives and false negatives, falling back to multiple round trips and/or wasting bandwidth.

The downsides to these strategies prevent Server Push from replacing Asset Bundling. That is, until Cache Digests.

Introducing: Cache Digests

Cache Digest is a specification currently under discussion at the IETF HTTP Working Group. 11 Note that the specification is undergoing changes and what you read here may not reflect the final standard, if it makes it through the standards track at all.

A cache digest is sent by the client to the server. It tells the server what the client’s cache contains.

A server that knows which assets are, or aren’t, already cached by the client, can push exactly what is needed. No more, which would waste bandwidth. No less, which would incur round trip latency.

What are cache digests?

A cache digest is a Golomb-Rice coded Bloom filter. Let’s pick that name apart.

Golomb coding is a compressed way to store a geometric distribution, i.e. numbers on an exponential scale.

Rice coding is a subset of Golomb coding for binary numbers. It is also used in lossless audio and image compression and referred to as Adaptive Coding.

Bloom filters are compressed, probablistic data structures. Think of them as sets that can only report the presence or absence of a specific element in the set. Databases use them to minimise expensive disk access.

What goes into cache digests?

Instead of recording the entire asset’s data, the cache digest records merely its URL and etag. This is enough for a server to know whether an asset is present in the client’s cache.

Each URL and etag tuple is concatenated and SHA-256 hashed. Only a small portion (never more than 62 bits, and typically less than 10 bits) of the hash is added to the bloom filter.

Who generates cache digests?

The client generates the cache digest based on the contents of its cache and sends it in a special HTTP/2 frame. This must be done natively in the browser, but no browser supports it yet. Instead a Service Worker script and the Cache API can be used to send the cache digest in a request header. This can be a standalone implementation like cache-digest-immutable 12 or part of a build tool like unbundle. 13

Alternatively, as a fallback for clients that do not support service workers, the server generates a cache digest based on which assets it pushed and sets it in a cookie. The specification does not currently specify the cookie name nor HTTP request header, so implementations are unlikely to achieve compatibility until the HTTP frame is supported natively.

Who queries the cache digest?

Current experimental implementations, in webservers like http2server 14 and H2O 15, use cache digests to filter pushed assets, skipping any assets present in the cache digest. Future implementations could operate at other stages. For example a CDN, load balancer, or accelerator, could pre-emptively push assets based on known traffic patterns.

How large are cache digests?

The size of bloom filters depends on the number of items and the probability of collisions. More bits means less likely to get a false positive. The maximum number of entries is 231 but in practice a few thousand assets would be realistic. Typically around 6 to 12 bits per element are required. There is also a header of 10 bits describing entry size.

All things considered, the size of a cache digest compares favourably to even a single wasted data frame.

What do cache digests look like?

This small example contains the following URLs, without Etags, and a 1/128 (<1%) chance of collision:

-

https://example.com/style.css -

https://example.com/jquery.js

The result is a 32 bit cache digest. In hexadecimal notation: 09 d6 50 e0

A service worker would base64 encode the cache digest and add it as an HTTP request header:

cache-digest: MTY1MDQwMzUy; complete

A longer example containing 177 asset URLs, set by a server as a 247 byte, base64 encoded, cookie:

set-cookie: cache-digest=Oe6yYGgvbvvsqCLcmGwbUzZisNmMi5QwFDFwMrxwEWjPUmbWv6G3IRu9fr7Mn7CyekvDNkU8NeTOtLJ2Gv4mKVEqsvehrpvJx5/7uLrJvzkiK/Y2sbCCO4rd0+igQnVbzan30ZNa5RQNNbXLVtLSeQcDYqDisumc4Jzsr9C8hJ4UVh4QNZwInQ5aVGkGftHSWzmn9KLzs2tS8hu+X3ETWujVdpPaYi465q6iQUFUxbnWjs6zm5qFRQA

How often are cache digests sent?

The cache digest frame should be sent when the connection is opened, with the server tracking any additional assets served. The header is sent on every request that goes though the service worker, though implementations can further optimise this behaviour. The cookie is sent with every request.

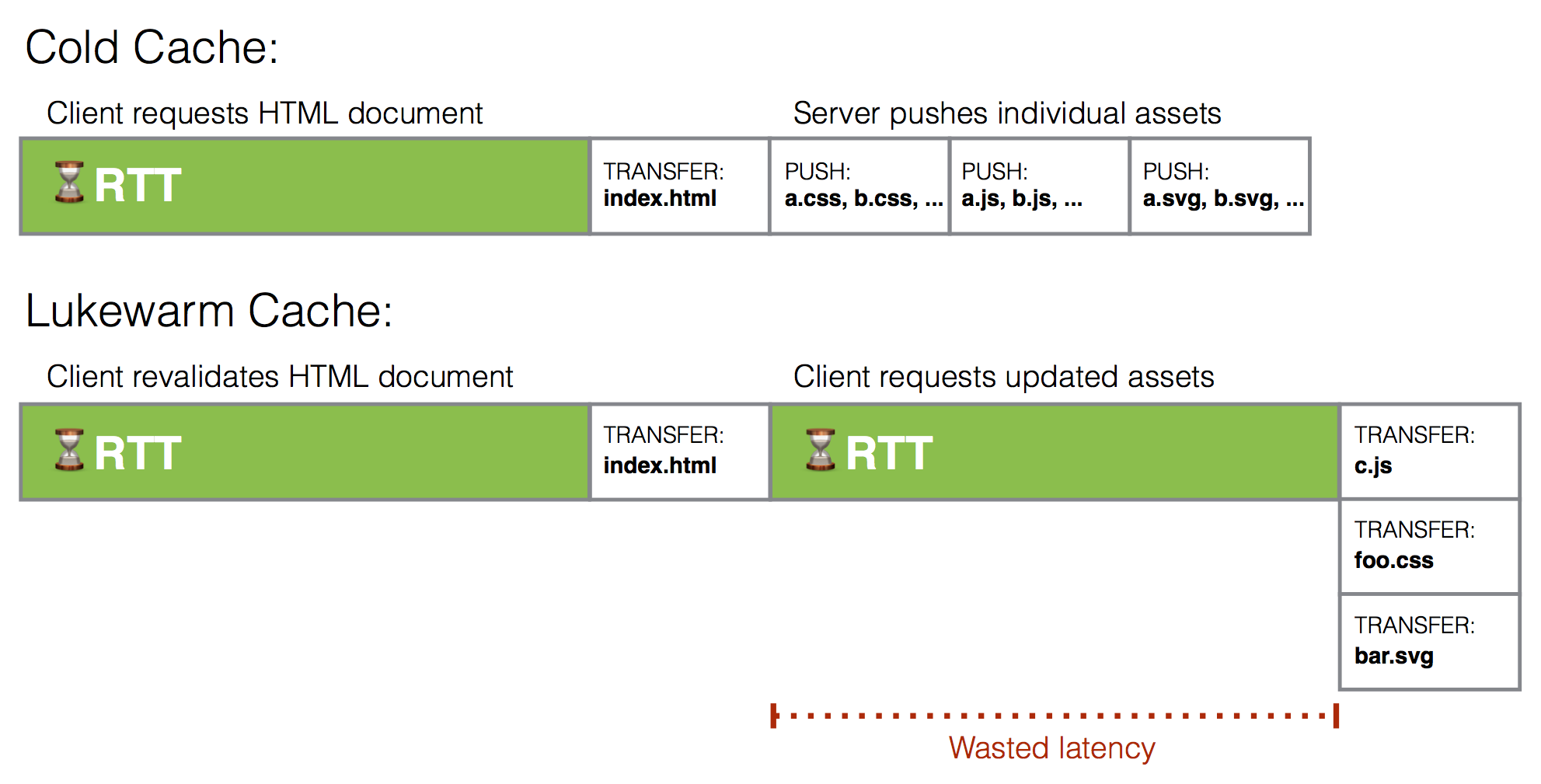

HTTP/2 Server Push with Cache Digests

TL;DR ![]() Optimal bandwidth and latency results are achieved under various scenarios.

Optimal bandwidth and latency results are achieved under various scenarios.

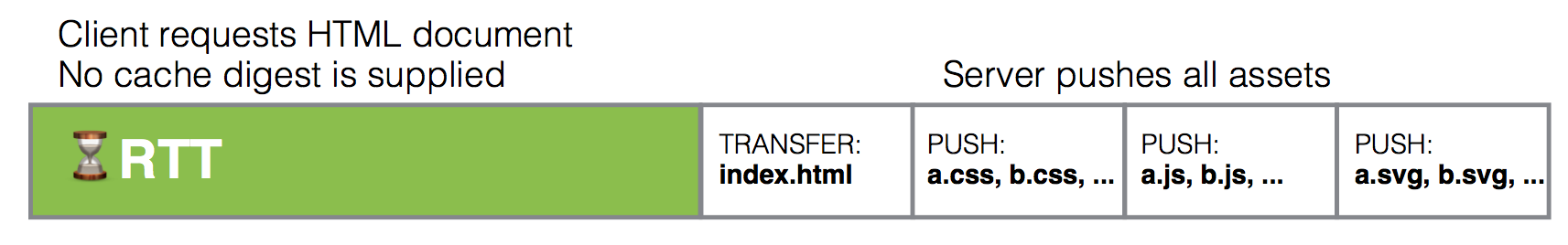

Scenario 1: Cold Cache

The initial page load, when a user first visits the web site the cache digest can not be sent to the server or be empty.

![]() Latency: Single request round-trip.

Latency: Single request round-trip.

![]() Bandwidth: Entire page.

Bandwidth: Entire page.

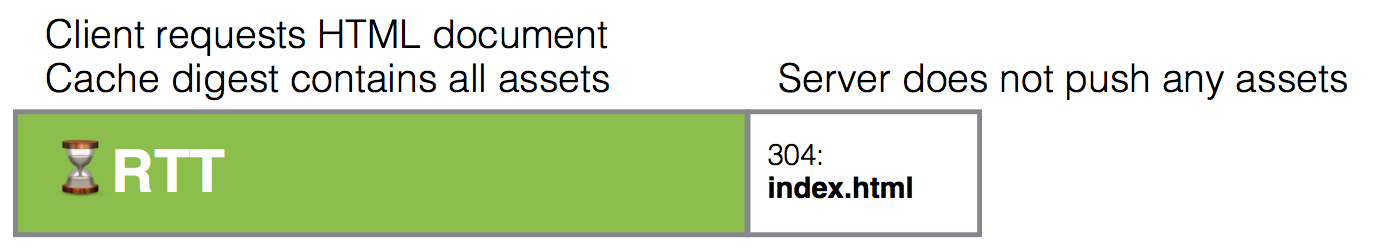

Scenario 2: Warm Cache

When a user navigates between pages on a website within the same browsing session, or if the site has not changed between repeat visits.

![]() Latency: Single request round-trip.

Latency: Single request round-trip.

![]() Bandwidth: Only the revalidated response. No assets are sent redundantly.

Bandwidth: Only the revalidated response. No assets are sent redundantly.

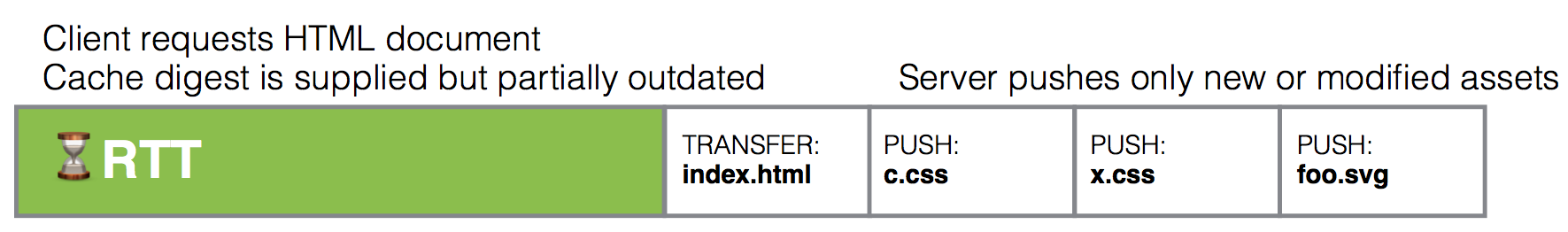

Scenario 3: Lukewarm Cache

The user visits a previously cached page that has new or modified assets.

![]() Latency: Single request round-trip.

Latency: Single request round-trip.

![]() Bandwidth: Revalidated response and uncached assets.

Bandwidth: Revalidated response and uncached assets.

Conclusion

HTTP/2 Server Push achieves optimal latency and bandwidth efficiency by using bloom filters as cache digests.

References

-

https://hpbn.co/primer-on-web-performance/#latency-as-a-performance-bottleneck

-

https://medium.com/@cramforce/why-amp-html-does-not-take-full-advantage-of-the-preload-scanner-7e7f788aa94e

-

https://http2.github.io/faq/#whats-the-benefit-of-server-push

-

http://engineering.khanacademy.org/posts/js-packaging-http2.htm

-

https://webpack.github.io/docs/code-splitting.html#split-app-and-vendor-code

-

https://blog.codeship.com/continuously-deploying-single-page-apps/