Estelle Weyl (@estellevw) started her professional life in architecture and then managed teen health programs. In 2000, Estelle took the natural step of becoming a web standardista. She currently writes for MDN Developer Network and organizes #PerfMatters Conference. She has consulted for Kodak Gallery, SurveyMonkey, Samsung, Yahoo, Visa, and Apple, among others. Estelle shares esoteric tidbits learned while programming in her blog. She is the author of Mobile HTML5 and co-authored CSS: The Definitive Guide, and HTML5 and CSS3 for the Real World. While not coding, Estelle works in construction, de-hippifying her 1960s throwback abode.

Estelle Weyl (@estellevw) started her professional life in architecture and then managed teen health programs. In 2000, Estelle took the natural step of becoming a web standardista. She currently writes for MDN Developer Network and organizes #PerfMatters Conference. She has consulted for Kodak Gallery, SurveyMonkey, Samsung, Yahoo, Visa, and Apple, among others. Estelle shares esoteric tidbits learned while programming in her blog. She is the author of Mobile HTML5 and co-authored CSS: The Definitive Guide, and HTML5 and CSS3 for the Real World. While not coding, Estelle works in construction, de-hippifying her 1960s throwback abode.In 2003, to normalize a setClass function across browsers, I created my first JavaScript library. It checked for support of the standard document.getElementById, gracefully degraded to check for the non-standard document.all, and used UA sniffing to check for old Netscape. I used it to do only one thing: change class names. It was ugly, but necessary. By today’s standards, at 885 bytes unminified, it was also tiny. Popular libraries and frameworks are much larger as they are written to be more robust than mine, but what is their impact on performance?

While we’ve focused a great deal of effort in minimizing the time to download assets, and some sites have improved first render by becoming isomorphic, in general there hasn’t been enough attention paid to the performance of client-side JavaScript, which can have terrible performance consequences. For most websites, It is generally faster to generate content server-side. When a site spends more time processing scripts than downloading them before painting meaningful content to the page, we have to ask ourselves, “are dependencies worth it?”

Libraries enable “code once, work everywhere.” Normalizing event listening methods addEventListener and attachEvent was a common requirement and a big reason why jQuery was so quickly adopted. jQuery, currently at 252 KiB unminified (32 KiB minified and compressed), includes addClass, removeClass and toggleClass, and pretty much everything else you may need, as well as many features that aren’t needed. As many learn to use a library before or instead of learning vanilla JavaScript, libraries are often also used for things the browser now does natively. For example, if your only need is a toggleClass or similar function, a library isn’t needed as classList is natively supported in all modern browsers.

With the ubiquity of standards supporting evergreen browsers, there’s less of a need to normalize. While you would think this would lead to fewer dependencies, script bloat has not subsided. Quite the contrary. The average website makes 24 JavaScript requests to download an average of 420 KiB of JavaScript – which includes both dependencies and 3rd-party scripts. As developers, we include libraries and frameworks to help us code: increasingly not because we need to, but rather because we want to. Most of use frameworks and libraries to make our own lives easier, but there are many who add them because they want to learn how to use them rather than out of actual site or application requirements.

While frameworks and plugins can provide for faster, optimized, readable code, with function chaining, implicit iteration behavior, and other nice-to-haves, they’re not always beneficial or necessary. We should be focused on the user experience, not the developer experience. Think of libraries as scaffolding: they can help you quickly prototype and even build your application, but you don’t leave unnecessary scaffolding in place when the building, bridge or application is complete: you remove what you don’t need. Why are we sending unused functions with each request? Why are we creating web components which replicate the features of a native element, with or without a simple event handler, that are often only used once? Libraries, frameworks, and other dependencies should never be included out of laziness or for resume padding.

Using a framework or library to simplify a coding job means every site visitor will have to wait for that dependency to download. Every additional plugin contributes to bloat. In 2010, when we started keeping records about web app sizes, the average website was around 700 KiB, the size of ReactJS (145 KiB minified and compressed). ReactJS is now a starting point for many sites. That’s a lot of bytes! Does is it benefit the user? Or was it included to benefit the developer either in terms of time saved or in terms of added professional experience? What are the costs associated of including each dependency? It’s not just bloat that’s an issue, but also relying on 3rd-party dependencies that can be points of failure. For example, keeping left-pad as an external dependency caused major issues with React, Babel and many, many web applications when a similar, basic function could have been rolled into a site’s script without breaking the web!

Today the average size of a web site or application is around 2.5MB, with 403 KiB of generally minified scripts (versus 118 KiB of scripts, both minified and not, in 2010). 403 KiB may not seem that bad, being only 16% of the bloat of a modern web application. Indeed, optimizing images and other media continue to be the “low-hanging fruit” of web application bandwidth consumption. However, it’s not just the download time of application assets that matters. Performance isn’t just about download time: it’s about time to interactive and sustaining 60 frames per second (fps). When you have a site that is fully built client-side, the download time may be reasonable, but 60 fps may become a pipe dream.

When a site is built client-side, the content needs to be dynamically created – the JavaScript needs to be parsed and executed, the HTML needs to be parsed and rendered.

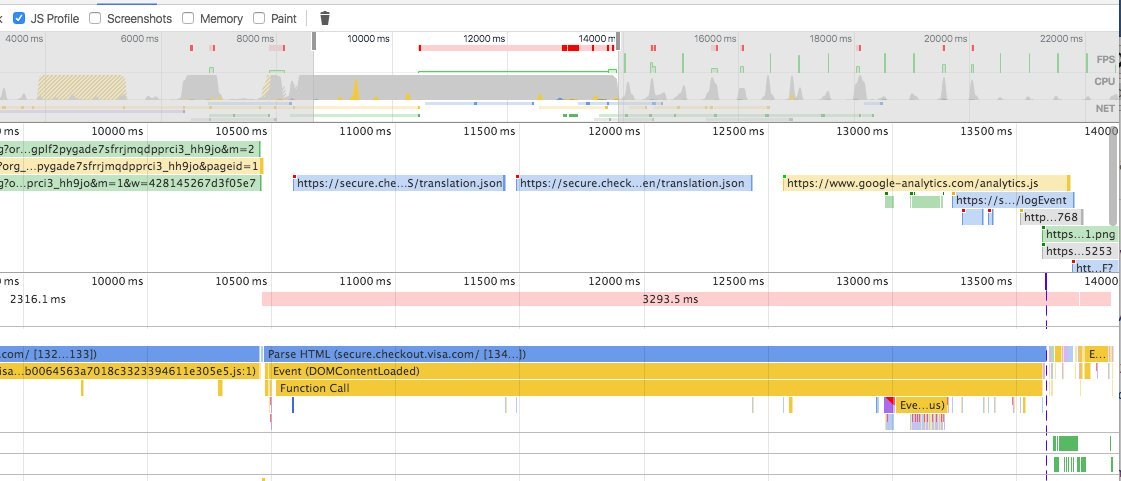

Here is a screenshot of the timeline (and the waterfall view later on) of a single-page application (SPA) which renders a fairly simple form with 55,000 lines of JavaScript. It shows a 0.3 fps frame rate and a 3+s DOMContentLoaded event, neither of which is a good user experience.

The single-page app(SPA) is just over 1MB, 56.5% of which is JavaScript (most sites’ bandwidth is a majority images or other media), with 91% of processing time spent on scripting, versus 4% on layout.

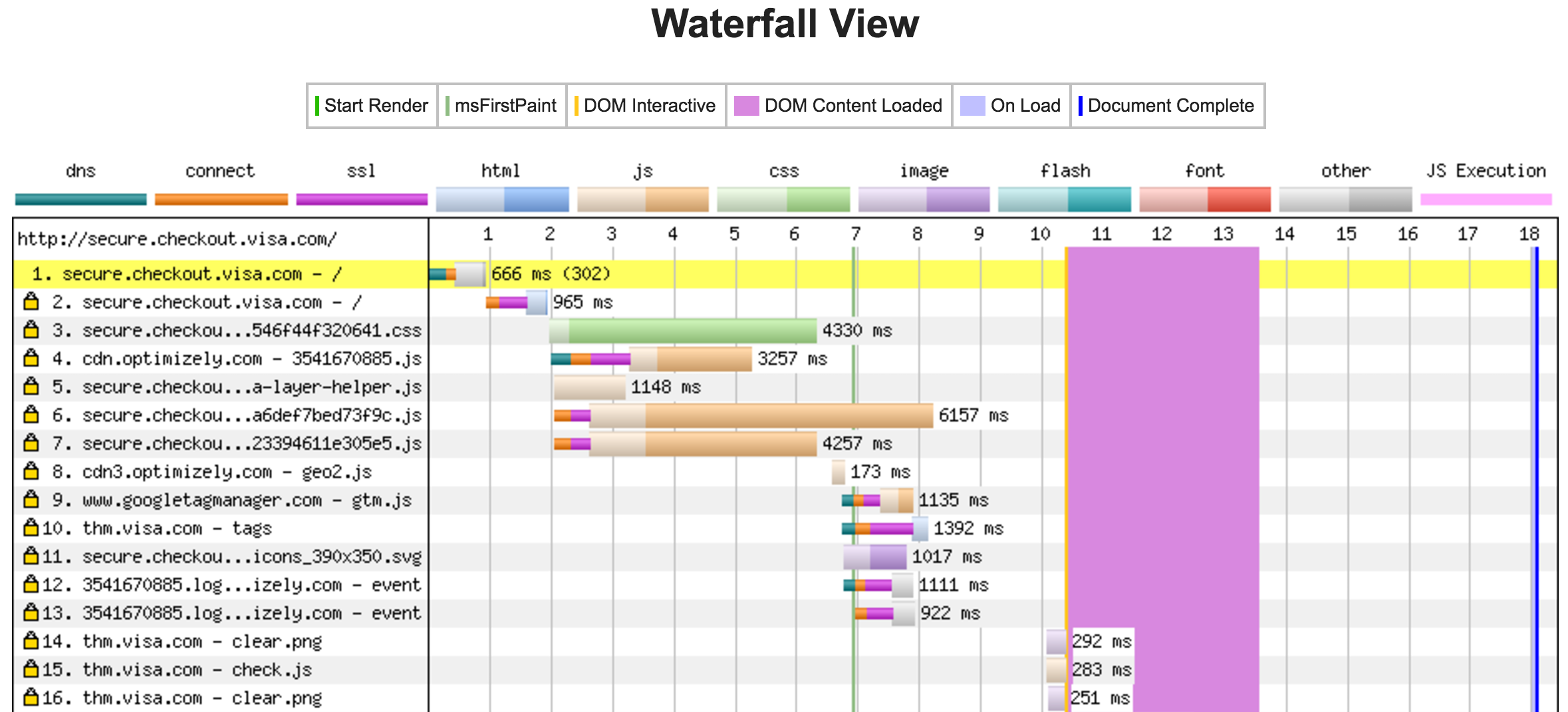

The HTML request is small. Over fast 3G, it only takes 18ms to download the 2.6 KiB HTML file, after a TTFB of 1424ms, which includes the DNS lookup, redirection to HTTPS, SSL negotiation (this is an area that can definitely be improved). The problem is, the HTML draws only one empty DOM node to the page: the body contains only an empty div with an ID to create a hook for content created client-side. It calls a few scripts that, in turn, create all the content for this client-side application, with the main components of the site downloading in 8,204 ms. While the CSS puts the background image on the body element at the 6.92 s first paint, the rest of the application doesn’t render until DOM Content Loaded at the 13.62 s mark: it takes more than 5 seconds after the content creating assets are downloaded for the client-side script to render the content to the page.

It takes over 2 seconds to parse the JavaScript and HTML, during which time nothing is drawn to the screen. This delay is followed by a 3.3s frame – as in 0.3 fps when we’re aiming for 60 fps – that includes a 3.12s DOMContentLoaded event. Yes, you read that correctly: a DOMContentLoaded event that lasts longer than three seconds.

We get a beautiful pink bar in our waterfall timeline — a sight we never want to see.

During this time the CPU is at capacity, the main thread is occupied, and 1 to 2MB of garbage gets collected at regular intervals.

I did a second run of WebPageTest to confirm the results. In this second run, the main JavaScript is requested 1.282 s after the initial request for the app is made, taking 2.525 s to download 256 KiB of content. That’s not exactly good, even for 3G, and is the area where most web performance services focus their efforts. The initial HTML, and all the CSS and JavaScript for the single page application downloaded within 6 seconds (5963 ms), mainly because the CSS was super slow to download. This needs to improve, and can improved be easily with web performance best practices, but is not the point of this post. My main issue with this site is what hasn’t happened yet: at six seconds, after the relevant resources have already been downloaded, the screen is STILL blank. It remains blank for two full seconds after the CSS and JavaScript are downloaded. It remains blank until the start of DOM Content Loaded, which itself lasts over two seconds as the JavaScript is parsed and executed. The start render is at 8.077 s, and the first meaningful paint – the background only – is at 8.7 seconds.

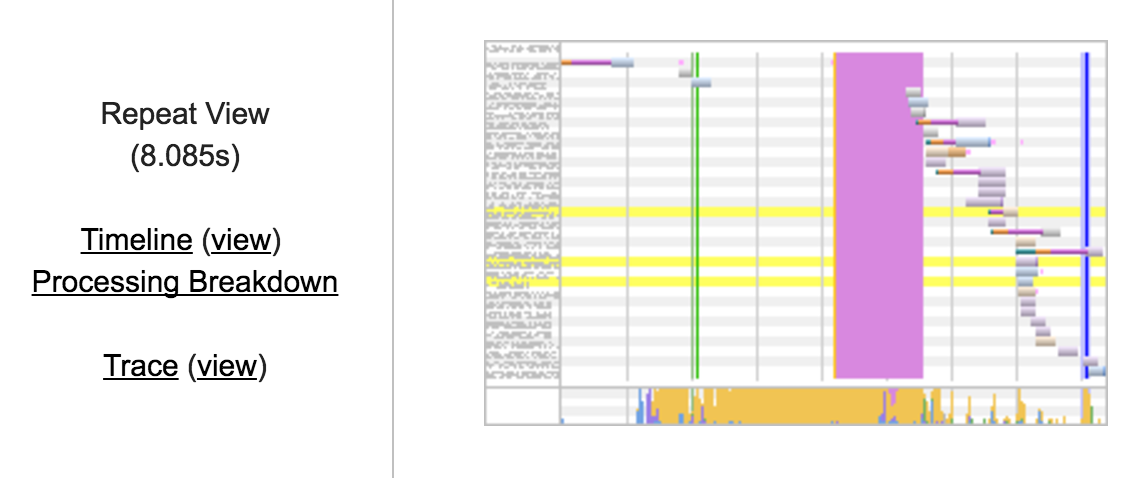

With cached assets, the browsers still spends 4.24 seconds processing script. The content, generated client-side, doesn’t get painted to the page for 5.7 seconds. The time it takes to render the cached view is longer than I would expect such a site to take to deliver everything from the server and render the first view!

Companies can spend hundred of developer hours and hundreds of thousands of dollars making download time faster, within the recommended 1 to 2 seconds. However, if they aren’t focusing on the time it takes to parse and execute client-side site-generating scripts, they are not going to improve user experience.

No matter how optimized the download speed gets, when you have 55,000 lines of JavaScript, like this single page application, it’s going to take too long while to parse and execute. There is no reason to include as many dependencies as this site includes for what is, in reality, a fairly simple form. The front-end functionality of such a simple form can be coded in under 1,000 lines of JavaScript, and less than 1,000 lines of CSS. Using semantic HTML, the functionality can be recreated in a few days without a framework and without any dependencies.

Good engineering involves finding simple solutions to sometimes complex problems. Repurposing code may make development easier, but ease of development is not the end goal.

Developers need to continue to learn to code before they learn to include frameworks. I am not saying developers should reinvent the wheel for each project, but 55,000 lines of JavaScript and six seconds to render a form you can replicate in under 1,000 lines of JavaScript?

Sometimes you really do need a framework, and a 3-second DOMContentLoaded event may be faster than a server-side render, but this is something you need to weigh. While rendering data with a thousand reuses of a single template is likely a good use case for client-side rendering, in the case of a three-field form, the cost associated with client-side rendering is too high. In general, however, you should only require your users to download functions that are actually needed for your application to work. An unnecessary, extended delay due to parsing unnecessary JavaScript is unnecessary, frustrating, and bad for business.