Nicolás (@NicPenaM) is a software developer currently working on Google Chrome's Speed Metrics team. He's an active member of the W3C Web Performance Working Group and a spec editor of various web performance APIs that have shipped in Chrome. He was born and raised in Colombia, and lives in Canada.

The web perf community talks a lot about web performance and its impact on business metrics. We even have entire sites dedicated to that. On Chrome’s Speed Metrics team, we looked into how we can better measure one of the key business metrics: abandonment. That left us with a bunch of questions:

- How do we define “abandonment”?

- How should abandonment be measured?

- What problems are we trying to solve by enabling a better measurement API

for it? - How often are page loads abandoned on the web today?

In this article, I’ll outline that exploration, answer some of those questions, show some data we collected, and finally, ask even more questions!

Ready? Let’s dive in!

Why do we care?

Abandonment is tightly coupled with page load performance. In a large scale analysis done by the Chrome Speed Metrics team, we found that slower pages are 24% more likely to be abandoned by users. I claim that when you care about web performance you should care about abandonment as well. Let’s see why.

The web performance metrics space has greatly changed recently. One notable example was the introduction of web vitals, a set of user-centric metrics that improved upon previous standards that relied upon browser-centric concepts such as onload. These metrics have prompted a lot of discussions among people who care about web performance. A lot of these metrics focus on initial page load performance, and there is ongoing investigation on how to reason about web performance beyond this.

The Paint Timing API provides the first glimpse by providing data on the first time the browser renders anything about a given page. For a while, this was only implemented in Chromium. Fortunately, this year it shipped in WebKit (thanks to Wikimedia), and Firefox began implementing it.

However, not all the gaps for initial page load performance have been filled. To see why, let’s say that a site is testing a certain experiment and trying to measure its impact on performance. If the experiment is making the experience of half of the users better by 1 second, but making the experience of the other half worse by 2 seconds, it’s easy to conclude that it’s performance-negative. At the same time, if half of the users who are now worse-off leave the site before we can measure their experiences, that could lead us to believe that the experience is performance-neutral, when in fact, it’s so bad it caused us to lose a quarter of our users!

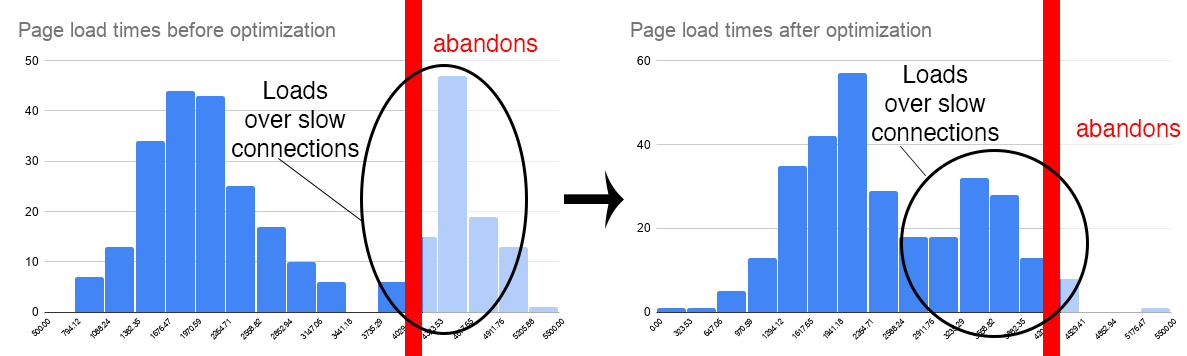

The following image illustrates a similar scenario where a performance optimization causes more of the slower page loads to no longer be abandoned and hence could be perceived as a regression:

The above examples are simple, and there are possible mitigations: look at various percentiles instead of just looking at the average, look at the volume in FCP values received, etc. Nonetheless, data from real users is already messy and hard to reason about, and this example points out that users leaving the page too early are a problem that can blindside an unsuspecting developer.

What is an abandonment?

The term ‘abandonment’ is very overloaded and it already has some very business-specific connotations. For instance, in some contexts abandonment is defined by the percentage of users who visit a shopping site but do not end up making a purchase. It could also be defined as the proportion of visitors who load items in their cart but ultimately do not follow through with a purchase (they abandon their carts). For the purposes of this article, we define abandonment as a performance-specific concept.

In this article we will be using abandonment based on FCP as the endpoint. That is, we say the page load was abandoned if the user left the page before it reached FCP. It should be noted that some developers would want to consider an endpoint different from FCP. Of particular interest here would be a developer-annotated endpoint. For example, an analytics provider may be interested in knowing the percentage of page visits where the user leaves before the analytics script has loaded. In these cases, the analytics provider would not have a chance to send the performance data back to the server. The provider could mark a page load as ‘not abandoned’ once its script has been loaded into the page. The browser could then provide the percent of page visits that were not marked, which would let the provider know the percentage of page visits for which their script was not loaded.

The case for FCP

The first principle informing the definition is that a page that did not reach FCP could not have been useful to the user. If the user is just seeing a blank screen (or the pixels from the previous page they were looking at), and then they leave the page before such pixels are updated, then they could not have gained any value from the experience. And if they just see the background color of the new site, that is also not very useful, as they see some first paint but not a contentful one. Therefore, our premise is that a user must reach FCP in order to gain value from the page being visited. If the user leaves before reaching FCP, then we can consider that page to have been abandoned.

There are other potential candidates that may be worth mentioning here. One alternative would be to use onload, but onload is not tied to the user experience and the point in time where it occurs can be very different even among sites with similar performance characteristics, so it is not a reliable point in time.

Another candidate would be to use Largest Contentful Paint (LCP), but this could be a moving target. Per its definition, LCP is only considered definitive once the page is backgrounded or unloaded or an input occurs. Before this, the candidate could change, so the timestamp could change. So in the case of users leaving the page early on, LCP may be defined but it may not represent the main content that would have been painted if the user had not left the page. It would be hard to know if the candidate used as the LCP was actually the ‘real’ LCP if the page load wouldn’t have been interrupted, especially for pages that are not static. While LCP is a metric that aims to define when the main content is visible to the user, it is not defined in a way suited to be used by our abandonment definition.

Finally, another alternative would be to use the first input as a signal that the page was not abandoned. This is a very close contender, but there are cases where this might not be advisable. For instance, if a user visits some encyclopedic site, reads the answer to the question they were inquiring about, and then leaves the site, the visit would be considered an abandon when in fact it was a very successful visit – the user was able to find the answer effortlessly.

Which navigations are considered?

The second principle is that we should only consider the user’s explicit navigations. In many cases, the user visits a URL but ends up in a different one. So we want to exclude redirects, including client-side redirects. However, it is hard to enumerate all the possible ways in which a site can redirect URLs, so we instead use a different approach to tackle this problem. For the purposes of our abandonment metric, we currently only consider page loads for which the user explicitly navigates into the page and navigates away from it. This helps exclude many edge cases, including client-side redirects and bot scripts. This also excludes page loads that would otherwise be considered valid, but it seems a reasonable tradeoff to restrict to a subset that is more manageable and less noisy despite losing part of the data. If sites wanted to track their abandonments, they would be able to apply more clever heuristics here, for instance by just filtering out URLs which they know are redirects.

Finally, the third principle in our definition is that FCP cannot be reached if the user was never trying to look at the site. If the user chooses to open a link in a new tab, never clicks on the tab to show its contents, and then closes the tab to leave the page, then FCP will not be reached but it also never had the chance of doing so. Therefore, we only consider page loads for which the page was in the foreground for a nonzero amount of time. This excludes a small subset of page loads but is nonetheless important to obtain more reliable results.

To recap, we consider the following types of page loads only:

- The user explicitly navigated into and away from the page.

- Page was in the foreground at some point in time.

Out of those page loads, we define abandonmentCount as the number of such loads which never reached FCP, and we similarly define reachedFCPCount as the number of such page loads which reached FCP. Then we define the abandonment rate of a page as the following ratio:

abandonmentCount ---------------------------------- abandonmentCount + reachedFCPCount

Abandonments in Chrome

As part of this research, we have gathered data in Chrome showing the abandonment rates Chrome sees, with focus on the two most popular OSs running Chrome. Our definition of abandonment is not final nor perfect, but it’s worth highlighting the best estimates we currently have. The first table shows overall abandonment rates in Android and Windows:

| Android | Windows | |

|---|---|---|

| Overall abandonment rate | 8.6% | 2.5% |

The above table is useful to understand how prevalent abandonment is, but is not useful in determining how performance impacts abandonment. To do so, we need to further restrict our query. In our second table we look at abandonment rates when restricted to the following page loads:

- The user explicitly navigated into and away from the page (unchanged from our abandonment definition).

- Page load started in the foreground and stayed in the foreground for more than N milliseconds.

- Page load either did not reach FCP or reached an FCP value greater than N milliseconds.

The rationale for this is that we want to consider only slow page loads, as measured by FCP. In order to look at only slow pages, we need to look at the FCP value. However, it is hard to reason about the FCP of pages that either start in the background or go into the background too early, so we restrict ourselves to only consider pages that start in the foreground and stay in the foreground for long enough to be considered slow, for some threshold of slow. This table shows what we’d expect: page loads that are slower tend to be abandoned more:

|

Abandonment after foreground duration > N ms, with an FCP > N ms |

Android | Windows |

|---|---|---|

| 500 | 8.1% | 3.2% |

| 1000 | 10.3% | 4.1% |

| 2000 | 13.0% | 5.2% |

| 3000 | 14.4% | 6.1% |

| 4000 | 17.3% | 7.2% |

| 10000 | 33.1% | 14.0% |

The data from the above tables enables us to draw some interesting insights:

- Abandonment rates on Windows are less than half than those on Android, even when restricting our queries to unperformant page loads. In some sense, it seems that expectations of users are very different on mobile compared with desktop.

- The slow increase of rates in the second table shows that users can be pretty patient: only 33% of users that have waited for 10 seconds end up abandoning, which is lower than I’d expect.

- Sites with slow FCPs will generally receive more biased data due to overlooking abandonment rates.

Open questions

I want to end this article by providing some open questions, and I look forward to conversations around these! Feel free to reach out to speed-metrics-dev@chromium.org with any comments.

- Are there ways we can improve the definition of abandonments? Focusing on FCP means that measurement gaps still persist, as analytics may not be ready by the time FCP occurs, so there could still be a gap for page loads that are ended in between FCP and when analytics is ready to report. This could be solved by the developer annotations mentioned above. This approach seems hard to get right, especially in a world where there are various third-parties involved in a single page load. Are there better solutions that we’ve overlooked?

- Previously, Chrome looked at definitions of abandonments which were restricted to looking at page loads that begin in the foreground. The initial definition presented today did not. However, we did use the previous definition in order to show correlation with FCP. What are pros and cons of either choice?

- Does the data presented support the need to expose this to web developers? If so, how could we do this? The main problem here is that a page cannot announce where to report abandonment rates, as it could be abandoned too early, before even this data is received by the browser. One possible solution would be to allow a header approach, but if we require the header before considering a page then we’ll miss some pages where not all headers are received. Therefore, ideally this would require some form of caching, like what was proposed in Origin Policy. However, that proposal is currently stalled.