Kevin Farrugia (@imkevdev) is an independent web performance consultant, currently leading the performance initiative at Tipico.

Improving web performance leads to improved conversions and increased profits. There are numerous case studies on this, and often even the non-technical product managers know this fact. So why is it that very often performance is tackled as a last-minute, fire-fighting emergency – possibly after being swamped with negative customer feedback highlighting the website’s poor performance?

I have been lucky enough to work on the performance aspect of a diverse range of projects over the years, each with its own particular setup and challenges; but with increased experience I have shifted from spending most of my time optimising the code to optimising the process. My background is as a software developer, so naturally I am inclined to dive into the code and figure out bottlenecks; propose or implement alternatives and then marvel at my own work and the improved performance scores. As a consultant, joining an already established team of highly skilled engineers; I was often looked at sceptically, so perhaps I felt the need to “prove myself” to my peers with my technical know-how.

But the manager who engaged me, wouldn’t have been looking for quick wins (in most cases), but the proverbial bigger picture. My role was to consistently improve the performance of the website (sometimes multiple websites); and I couldn’t do this alone, at least not by pushing my own code. One week after having implemented some quick wins, I would see the good Lighthouse scores vanish because someone pushed some work with unoptimized images. Instead, my role was to enable everyone to contribute to improving the website’s performance.

Competition is good

While almost everyone knew that good performance leads to good business results, not everyone knew that the website they were working on was not synonymous with good performance. Most developers were working on high-end machines with high-speed internet connections and performance was not something they were paying much attention to. This is not to discredit the developers, as they are experts and highly knowledgeable in their own domains, but they were not accustomed to treating performance as a first-class citizen.

During my first opportunity to present the research I was doing, I demonstrated the load time of the website we were building, compared to our biggest competitors and the current version of the same website which we would eventually be replacing. Our new website was the slowest. This struck a chord with the developers and some of the upper-management and performance started to become prioritised.

Comparing yourself to your competitors helps to get you motivated, but also eliminates excuses. If your competitors can build a fast website, then it is likely that you are also able to build a fast website. How often have I heard from a client that their website cannot be optimised because it has a complex API or it is image-heavy? Chances are, it could be optimised too.

Measuring

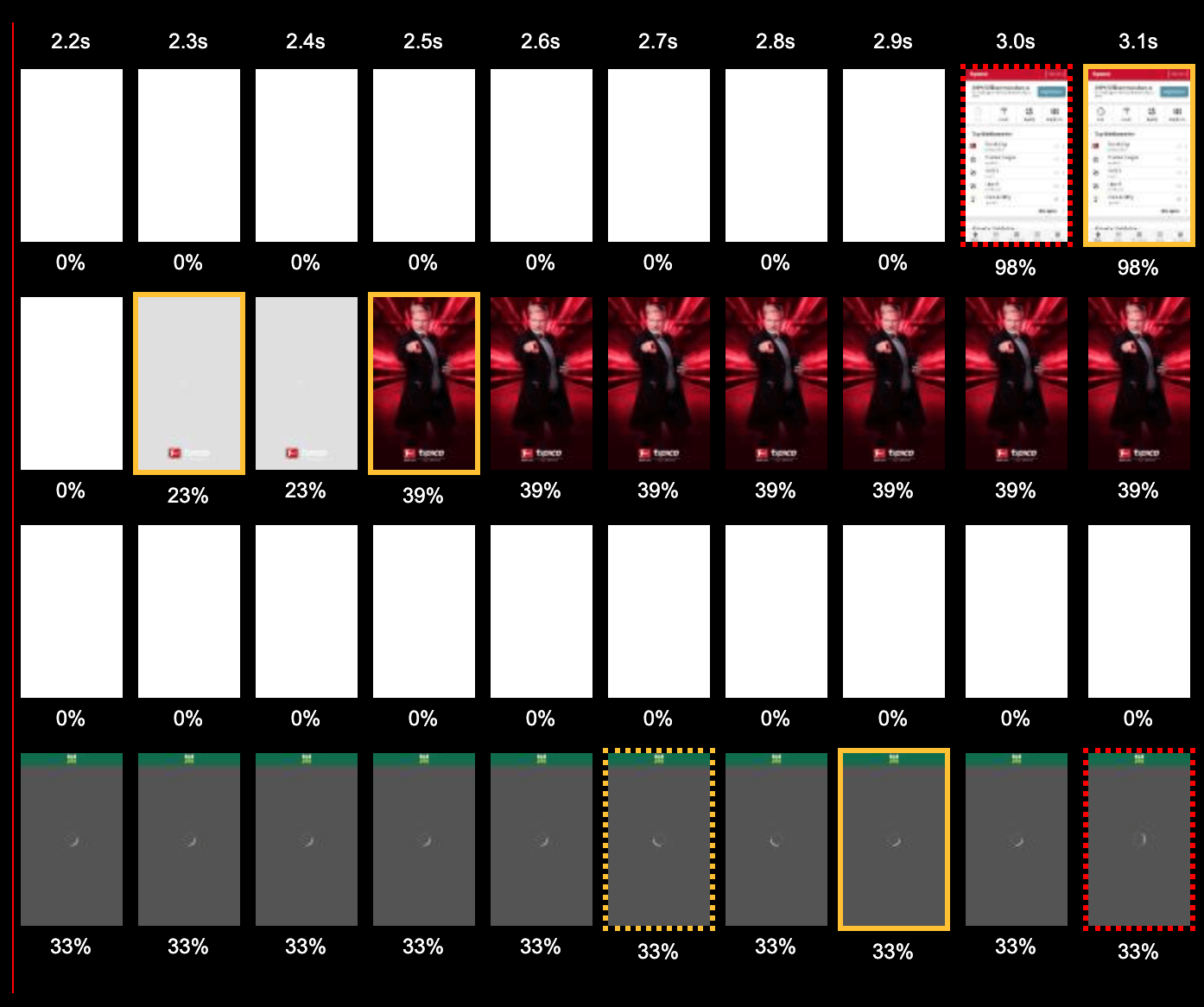

Now that we know the website we are building needs some attention, we need to understand our current position and also be able to measure any progress we’re making. To do this reliably, we tested the standard metrics (LCP, FMP, TBT, Page Load, Start Render) against our data using different environments over a period of time. I believe this phase was critical because we wanted to understand the metrics and how they react to changes and build confidence in the numbers being reported.

For us, a good metric is one which is stable, elastic and orthogonal. This means that the metric experiences little or no variance if run under the same conditions, changes in the website’s performance output a proportional change in the metric, and a single metric represents a single aspect of the website’s performance. Having multiple metrics measuring the same thing can quickly become confusing, especially if the values do not change proportionately.

Therefore while Google’s Core Web Vitals are a good starting point, in our case they were not entirely reflective of the user’s experience. Defining – and in some cases creating – our own metrics helped developers understand what is being measured and have trust in the values being reported – too many false positives and people will quickly get demotivated. And if after some time, we begin to suspect that a metric is no longer good, we would immediately get to work on improving it or finding a new one.

Reporting

These metrics are measured on a daily basis using lab tests for a variety of scenarios (new user, repeat user, no cookie consent, etc) and pages. In addition to the lab tests, we also set up real user metrics (RUM) for our production environments.

These metrics were visible on “always-on” dashboards, depicting the measurements over time and also integrated with our CI environment and source control. Noteworthy changes in any of these metrics were reported to the respective team directly into their Slack channels. This is effective in highlighting any performance gains or losses following a deployment and allowing the team to take ownership if a problem arises. As a result, developers were keen on following the progress of their own work and shifted towards a pro-active approach towards performance. “Is this bundle going to hurt our performance?”, “Should I lazy-load this module?” Questions that previously weren’t being asked.

Getting the developers excited about performance helped us progress from a technical perspective, but it was fundamental that non-technical stakeholders are also invested in the website’s performance progress. So while lab tests and fine-grained metrics are effective at identifying when and where there are changes to the website’s performance, this may be overwhelming or irrelevant for upper-management. Work was done to extract a single number or score from our RUM reports. A single value which represents the experience for most users (75th percentile) and combined different metrics into one.

The beauty in the simplicity of having a single number combining all pages and users into one, is that it changes proportionately to the most impactful changes on the website. Since this metric combined both new and repeat users; if the website had a vast majority of repeat users, improving first-visits would only result in small gains to the business and also small improvements to our metric. On the contrary, improvements to repeat visits would impact a much larger number of users and this would also be reflective in proportionate changes to our metric. This metric was reported to the teams and the C-level on a fortnightly basis.

So, where are we now?

Creating a cultural change is not easy, but by getting all stakeholders on board we were able to achieve some fantastic results. Most developers are aware and actively seeking how their work may impact performance and the company has set the website’s performance as one of the main KPIs for the upcoming year.

And what about the website itself?

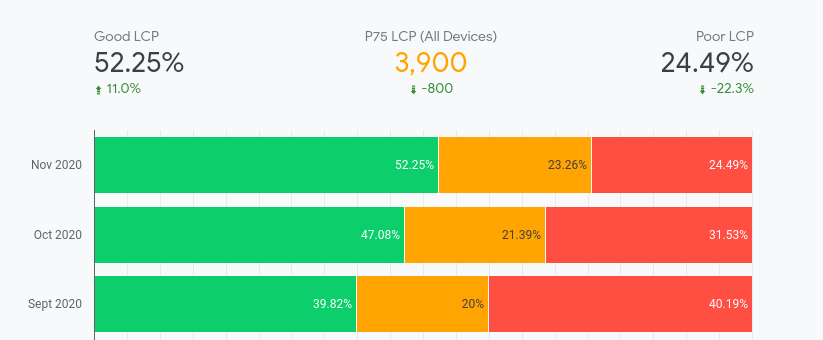

Well, we recently launched the website to the public, and while there is still lots of work to be done, it is a massive step forward from where we were when we got started. The website is the fastest it has ever been, is going through a phase of continuous month-on-month improvement and also obtained the fastest load times across the industry amongst all major competitors for all user segments tested.

And as the case studies have warned us, customer feedback has shifted towards a positive trend and conversions have improved markedly.