Erwin Hofman (@blue2blond) moved from building his own CMS to starting an agency and helping out other (e-commerce) agencies and merchants with web performance. He also co-founded RUMvision to provide merchants and agencies with pagespeed insights.

Tracking UX data and their metrics is easier than ever. For example, using Google’s Web Vitals library and sending metrics to an endpoint of choice. RUMvision was built on top of this library, and also the foundation of this article using the attribution build of the Web Vitals library.

As a matter of fact, while developing RUMvision and especially its tracking snippet, some APIs changed along the way. From one day to the other, we noticed that the buffered flag could be used for specific PerformanceObserver goals. That made things a bit easier to gather data retroactively (such as Element Timing and Server Timing data).

Largest Contentful Paint

But that isn’t today’s subject. Largest Contentful Paint (LCP) is, or actually an LCP breakdown. With Core Web Vitals, Google did a great job raising awareness around basic nuances to pagespeed and UX. LCP is one of the Core Web Vital metrics and often is an image.

It’s clear that images are the most common type of LCP content, with the img element representing the LCP on 42% of mobile pages

And one could use additional attributes to improve the rendering of the LCP candidate.

Native lazyloading

For example applying loading="lazy" to non-LCP candidates, or at least images that aren’t visible above the fold. You would be improving performance as well, as you don’t need JavaScript libraries any more. What’s not to like?

Meet fetchpriority

fetchpriority is another attribute in the same category. Without diving too much into detail: Images are not loaded at high priority by default, which is good. There are more important things, such as overall layout and thus stylesheets.

However, images having a low priority isn’t ideal for our LCP candidate. If only developers could change the priority of specific elements to help browsers out, to boost the priority once detected. And that specifically is what fetchpriority does.

fetchpriority applied to the image by Addy OsmaniEasy to implement and really a no-brainer. The community even had the chance to jump in and express their naming-preference via a Twitter poll. But despite being a no-brainer, only 0.03% of pages use fetchpriority=high on their LCP elements because it’s relatively new.

These became the ingredients of this article: I started testing the LCP differences between lazyloading and non-lazyloading your LCP image. And then became curious for the fetchpriority outcomes too.

LCP fetchpriority nuances and scope

As stated before, I would consider fetchpriority a no-brainer. Sure, you shouldn’t add it to all your resources. But solely applying it to your LCP image won’t hurt. But should you always expect an LCP improvement when doing so?

In my quest, I just wanted to know the exact wins. But I then stumbled upon other unexpected outcomes as well. Outcomes that made sense when thinking about it. But still unexpected nevertheless.

Scope of this article

I should add that the gains and even breakdown-numbers will depend on many things. Let’s consider these additional nuances when looking at RUM data:

- Are we mixing up unique and successive pageviews (and thus cached DNS lookup & resources versus uncached)?

- Where there any render blocking resources at all?

- Does the shop have an international audience? This could result in wider device and internet speed distribution amongst visitors, or visitors being further away from the origin server.

- Did adding/removing the

fetchpriorityattribute caused a server side cache invalidation that could result in a higher TTFB? - Did the visitor visit the page before i.e. was the image in the cache already?

- Did the size of the image(s) change over time? Unfortunately, platforms tend to host images on subdomains without specifying

Timing-Allow-Origin. So this prevents us from keeping track of this.

I will only dive into the unique versus successive pageview nuance though. Others didn’t cause notable differences, so were ignored in this article.

Optimized sites

I used different sites and shops to perform my tests. Some of them didn’t have fetchpriority implemented yet, so this was a perfect moment.

Within other cases, I removed it, deliberately regressing the LCP. However, as all these cases were well-optimized already, LCP would still be way within Core Web Vitals thresholds.

Because of the healthy numbers across these sites, I’m using the 80th percentile mobile data in this article.

Platforms and critical CSS

Tested sites are running on either Lightspeed using Cloudflare (by default as it’s a SaaS) or a custom CMS without a CDN. In both cases, there are no notable TTFB fluctuations on the used percentile, which is good as this prevents our data from being skewed. In the end, it’s about the image related attribution data, so we won’t be looking at the TTFB anyway.

The custom CMS cases are using critical CSS, which -in these cases- means that there were no other render no parser blocking resources.

However, within the setup of these cases, critical CSS is only applied within unique pageviews (first pageview within a new session). We will be ignoring other datasets within the critical CSS case analysis.

fetchpriority outcome in a typical setup

Let’s start with the non-critical CSS cases, as most sites aren’t using critical CSS. So this situation is more likely to apply to most sites and shops out there.

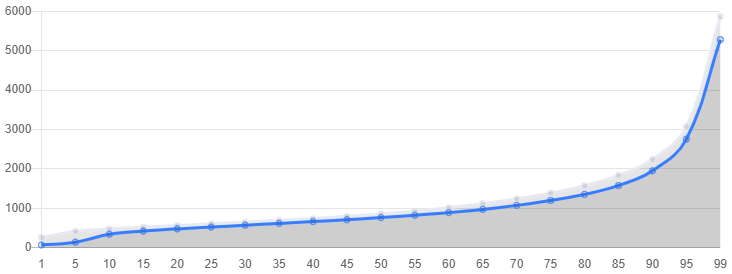

And then the key question: instead of just the 75th or 80th percentile, will everyone else within other device and connectivity conditions benefit as well? The following chart is showing LCP value in milliseconds across different percentiles.

And that’s showing a clear yes, as the blue line representing experiences with fetchpriority is showing lower numbers and thus faster LCP experiences than the grey line. If you don’t like deep-diving, then you can stop reading right here 😉

LCP breakdown

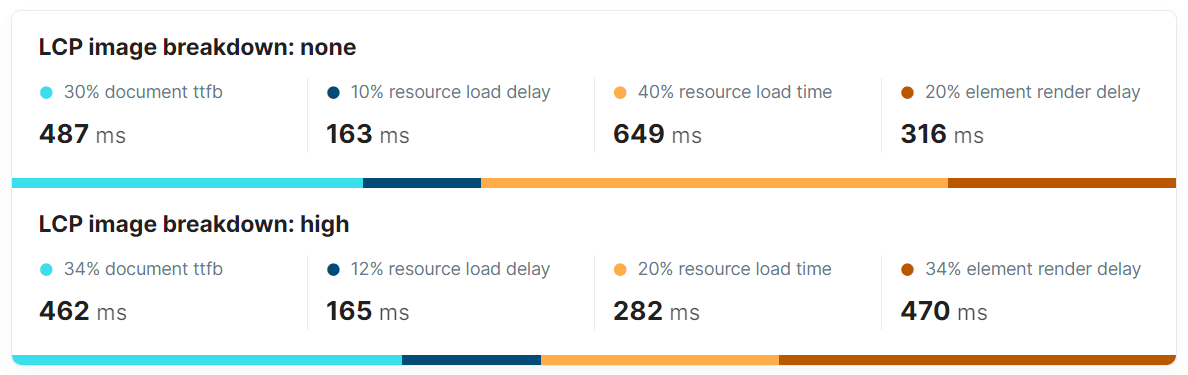

If we want to know what caused this overall improvement, we have to dive a bit deeper. Say hi to the webvitals attribution build once again, while looking at 23k productpage experiences.

Trends

Trends then become as following when comparing a fetchpriority="high" situation with a situation without a fetchpriority strategy:

This is what you’re seeing:

- Apparently, TTFB of product pages (light blue) improved by 6%

- The load delay of the image (dark blue) regressed by 4%, but improved by 8% within the other Lightspeed case. In the end both negligible.

As of this point, the impact of implementing fetchpriority="high" becomes interesting:

- Resource load time (yellow) improved significantly: 55% (45% within the other Lightspeed case)

- And the element render delay (brown) regressed by 49% (only a 15% regression within the other Lightspeed case)

Conlusion of the above is that the overall win was achieved thanks to the improved resource load time (the image travelled well ahead of a potential traffic jam). The render delay (let’s say: leaving the highway) is failing us. This could be caused by the overall healthy setup: as the image arrives sooner, it is now ending up in an exit lane traffic jam; it already had to wait a bit before, but is now forced to wait even longer for other browser tasks. Overall, still a win though.

Absolute numbers and distribution

In case you like absolute numbers instead of trends and fancy distribution bars, here’s another screenshot showing the information in a different way and going from no fetchpriority strategy to a fetchpriority="high" setup:

It now becomes very obvious where potential gains are coming from when applying the fetchpriority="high" to your LCP candidates: The resource load time used to be 40% of overall LCP timing and only accounts for 20% in the new situation. We can still see that the element render delay regressed.

Fetchpriority behaviour per pageviewtype

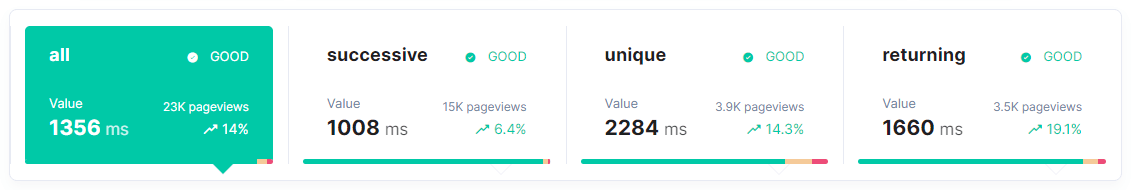

Knowing that the task of rendering the image could end up somewhere in a traffic jam, should we expect these detailed outcomes to differ per pageview type (cached/successive versus uncached/unique pageview)?

That’s what I was able to test in our monitoring:

This looks promising. Not only will fetchpriority result in an improvement across all percentiles, it even looks good across all pageviewtypes.

Breakdown per pageviewtype

Let’s break these down as well:

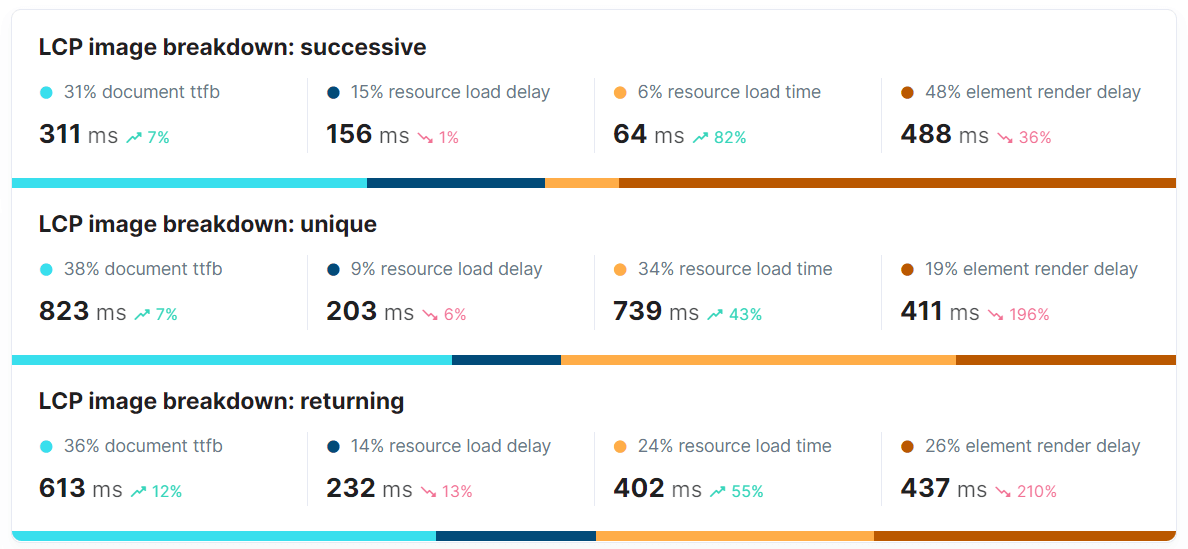

TTFB of unique and returning pageviews are higher for obvious reasons (DNS lookup, TCP connection and maybe additional waiting time taking place). Other trends are as following:

- We see TTFB improvements, but that is not related to

fetchpriorityusage, so we can ignore that. - resource load delay became slightly worse within unique (6%) and returning (13%) pageviews. But we’re talking about a maximum regression of 35ms here while the other Lightspeed case was showing an improvement. So, no convincing outcome here, it could be caused by interval noice or possible changes in timing of browser processing as prioritization changed.

- Being ahead of highway congestion once the priority is boosted, the resource load time is significantly better (82% improvement) within successive pageviews (render blocking resources being cached already).

- Element render delay is still showing a regression (36%) within successive pageviews, but below-average. Potentially because the browser could start to style the page sooner as CSS could be fetched from the browser cache. So, although the exit lane traffic jam is still there, it isn’t as big anymore at this stage.

- As a result, the exit lane traffic jam is bigger within unique and returning pageview experiences. But as the resource load time gains make up for the element render delay regressions (both on a relative as well as absolute scale), we are able to see an overall win when adding the

fetchpriorityto our LCP candidate.

To support these outcomes, I did the same test with the homepage instead of 23k product page visits. The resource load time improvement within successive visits is stuck at 55% instead of jumping to 82%. Although the 82% improvement really is the (positive) outlier here when comparing it with the other Lightspeed shop.

The element render delay regression is even reaching 250% within unique and returning homepage experiences. And the resource load delay is never showing a regression within homepage nor listing pageviews. But generally speaking, it’s showing the same trends.

fetchpriority gains when using critical CSS

I’m going to admit right away that this is where things start to fluctuate more than I expected amongst the 4 cases that are using critical CSS. Outlines were as following though:

- Resource load delay actually improved during unique pageviews within all 4 cases (20%, 25%, 28% and 29%), which only showed minor regressions before.

- Before, using

fetchpriorityturned out to massively improve the resource load time. But not when using critical CSS. Instead of the earlier 43% improvement within unique pageviews, I could now see negative numbers.

Summarized, with TTFB fluctuations excluded, results per attribution are as following where an arrow-up means that the fetchpriority="high" attribute resulted in an improvement:

| Case no | load delay | load time | render delay | overall |

|---|---|---|---|---|

| 1. | ⇧ 29% | ⇩ 8% | ⇩ 4% | ⇩ 1.5% |

| 2. | ⇧ 25% | ⇩ 25% | ⇧ 21% | ⇩ 2.8% |

| 3. | ⇧ 28% | ⇩ 35% | ⇧ 13% | ⇧ 2.4% |

| 4. | ⇧ 20% | ⇧ 10% | ⇩ 5% | ⇧ 8.4% |

As a result, I didn’t see huge LCP wins within unique pageviews when critical CSS and fetchpriority="high" was used at the same time. Towards overall LCP, it fluctuated between 1.5% regression and 8.4% improvement instead of a solid 14.3% improvement for unique and non-critical CSS pageviews. It is important to note though that other sites using critical CSS likely have a different codebase and benefitting way more from fetchpriority.

fetchprioritycan move the (LCP) image from something like request #30 and delayed fetch for several seconds to a much earlier fetch. That you’re starting from such an already-optimized base is awesome but reduces the available gains to be had (glad to see it helps in the aggregate though, even in that case)Patrick Meenan in an email conversation

Trend explanation

An explanation of these different outcomes when using critical CSS could be as following:

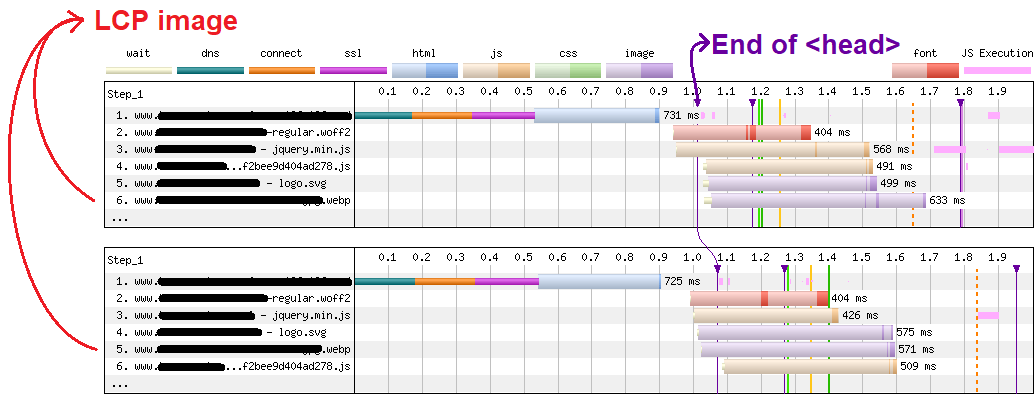

- Resource load delay improved as

fetchpriority="high"changed the stream dependency. We can see this happening in the Webpagetest waterfall below:- The webp image (which is the LCP) now became resource number 5 instead of 6.

- The start of the download of the webp image is moving to the left, happening sooner in time.

- It now even starts to download before end of the <head> section

- By accident in the setup, the logo (19.6kb) also received a

fetchpriority="high", hence getting the same treatment

- Just like the webpagetest waterfalls are showing us, I wouldn’t expect a regressed resource load time. But RUM data is showing that it regressed, up to 35%. It’s possible that more work is being done by the browser during resource load time instead of before load that caused things to move around a bit. It’s even possible that data and investigation would be needed here.

- Render delay is fluctuating, although often with convincing wins. However, it could regress if -depending on page characteristics- the image would already arrive during (long) browser tasks that would otherwise run as well, but a later detected image just wouldn’t need to wait for it as long.

Conclusion

You might not see notable LCP improvements within (unique) pageviews when your site is using critical CSS on top of an already-optimized base. In the non-critical CSS cases (but still a somehow optimized base), using fetchpriority resulted in significant LCP improvements.

As -from a development perspective- it’s way more convenient to add attributes at all times instead of conditionally, you start adding the fetchpriority attribute to your LCP candidates today. Running webpagetests and collecting your own RUM data is still adviced though!

Next to learning more about fetchpriority via web vitals attribution, I once again experienced that HTTP prioritization is a complex matter.

A thank you

I would like to thank Stoyan for providing the community with yet another webperf edition and great set of insights and reading-material, Patrick Meenan for providing me with breakdown feedback, Robin Marx for pushing me to add LCP breakdown and preload-scanner nuances and sources and while I’m at it, also perf.now for allowing the community to meet and learn in great company.