Ana's been part of web performance solutions teams since 2020 and is passionate about webperf, AI and UX. She currently helps make the web faster at Uxify.

For years, traditional optimization techniques like caching and resource compression have been the go-to solutions for speeding up websites. They’ve been effective at reducing load times and easing server strain. Performance tools have made these advanced techniques more accessible to site owners, whether they have technical expertise or not. However, as user expectations for near-instant experiences grow, these methods are starting to hit their limits. In this article, we’ll look at how speculative loading can work alongside traditional optimizations to deliver better performance results.

The evolution of web performance solutions

In the 1990s, caching was a game-changer. By keeping frequently used content like images and scripts closer to users, it made websites load faster and reduced stress on servers. The introduction of HTTP/1.1 in 1997 refined this further with headers like Cache-Control and Expires, allowing browsers to manage cached content more efficiently.

Over the next 27 years, the web has transformed. Rich media, global traffic, and personalized experiences pushed traditional caching to its limits. In response, multi-layered strategies and automated tools emerged, simplifying caching for non-technical site owners.

But today’s tools do a lot more than just store files—they actively optimize them, earning the more inclusive term web performance solutions. From compressing images to optimizing CSS with critical styles and lazy-loading fonts, these tools actively refine content delivery.

The result? A more holistic approach to performance, meeting modern requirements like Core Web Vitals, SEO standards, and the growing expectations of users.

Web performance tools and Core Web Vitals

Web performance tools have become indispensable for improving Core Web Vitals —Google’s metrics for evaluating a website’s speed, interactivity, and visual stability. It’s undisputed that these tools make it possible for websites to even pass CWV.

What’s interesting is that the thresholds for CWV metrics are specifically set based on what traditional optimization techniques can realistically achieve. These are not arbitrary benchmarks; they’re grounded in research and reflect the capabilities of current technology.

That said, even Google acknowledges that the best user experience would involve no waiting at all. So why are CWV thresholds set at levels below the ideal? The answer lies in the nature of the web itself.

The limitations of web performance solutions

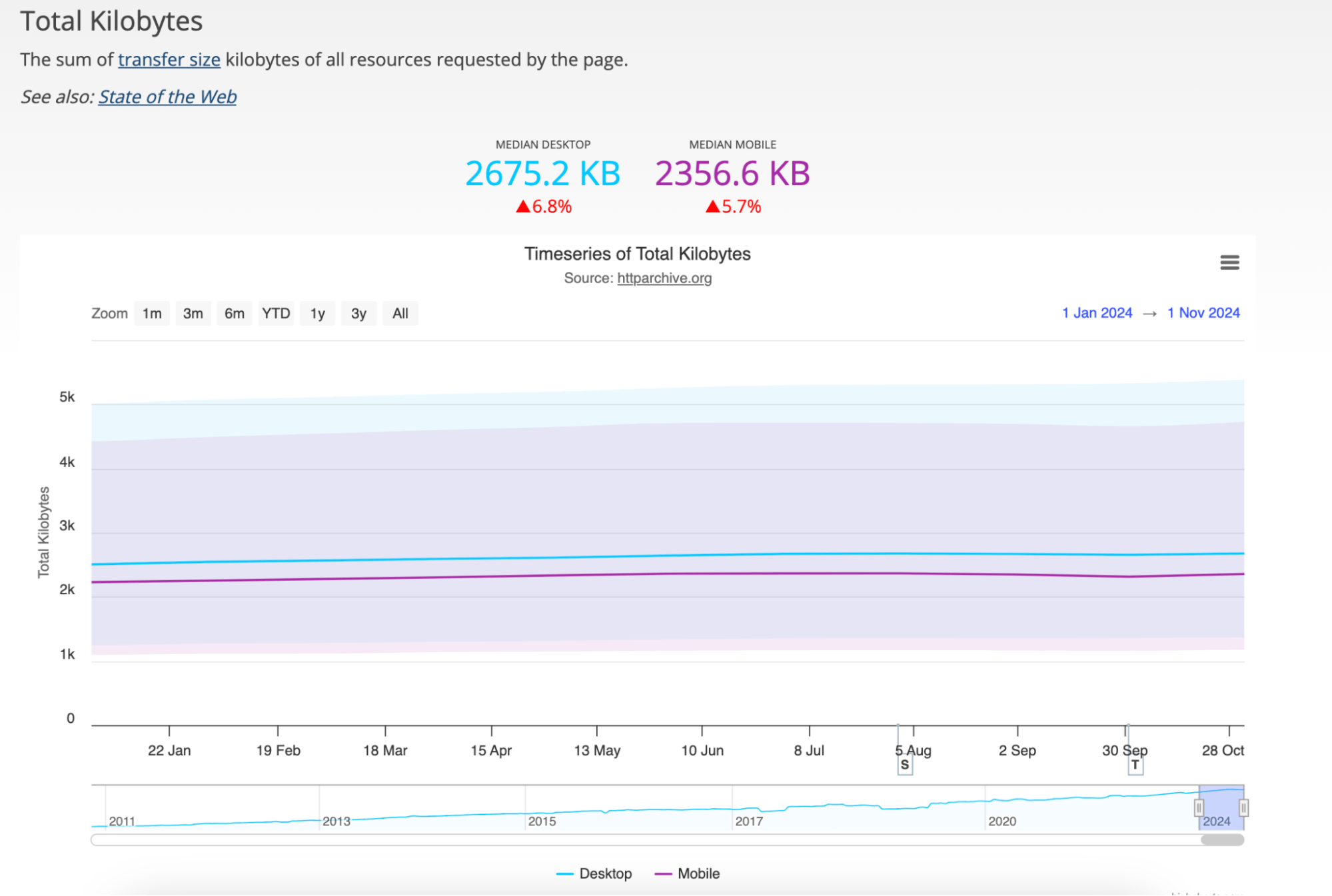

Over the years, web page sizes have grown significantly, driven by richer media, high-resolution images, custom fonts, and increasingly complex JavaScript. In 2024, the median desktop page size reached 2.68 MB, with mobile pages close behind at 2.36 MB. The increasing use of video content and interactive elements continues to add to this growth.

Source: HTTP Archive

At the same time, improvements in internet speeds have slowed, especially in regions where infrastructure upgrades are difficult. Ookla’s latest connectivity report highlights that while high-speed internet access has expanded, median speeds in many areas—particularly on mobile networks—remain largely static. As page sizes grow and the speed at which data can be transferred and rendered nears physical and technological limits, achieving further performance gains with traditional optimization methods becomes increasingly difficult.

Techniques like minification, compression, and caching remain effective but are inherently limited by the time needed to request, download, and render resources. These methods also focus primarily on content that has already been fetched or accessed. To go a step further, some web performance solutions use strategies like cache warmup to boost cache hit ratio or browser hints to pre-load resources. However, these approaches rely on predefined rules and offer a narrow scope, meaning users can still encounter delays while waiting for resources to render.

Delays seem inevitable, as they are inherent to the request/response model operating over a global network.

To break through this plateau and deliver near-instant experiences, a new approach is essential—one that moves beyond traditional optimizations and tackles performance from a predictive, user-focused angle.

The Speculation Rules API, introduced by the Chrome team, represents a breakthrough in this direction. This API enables developers to specify which pages the browser should prefetch or prerender based on anticipated user actions, allowing for near-instant navigation. This approach opens the door to significantly faster, more seamless user experiences.

A brief overview of speculation rules API

The Speculation Rules API introduces two key techniques: prefetching and prerendering. Each offers distinct trade-offs between speed and resource use, allowing developers to choose the most suitable approach based on user behavior.

Prefetching stores a page’s HTML in the browser’s cache, reducing TTFB with minimal resource overhead. It is effective in scenarios where moderate speed improvements suffice, as critical resources are ready when the user accesses the page.

Prerendering takes this further by fully rendering the page in the background, ensuring immediate interactivity when the user navigates to it. This makes it particularly useful for pages where fast responses are critical, such as e-commerce product listings, dashboards, or media-rich content.

The evolution of prerendering

Chrome’s approach to prerendering has gone through several iterations in response to the challenges of creating faster, more seamless browsing experiences. The initial attempt, <link rel="prerender" href="/next-page">, fully loaded a page in the background but was limited to Chrome and lacked broader cross-browser support. So next, Chrome introduced NoState Prefetch, which preloaded resources like images and scripts but didn’t fully load the page or run JavaScript, so it couldn’t deliver a truly “instant” experience.

With the Speculation Rules API, Chrome revisits full prerendering, but with a more thoughtful, flexible design. The API allows developers to prepare pages completely, including JavaScript execution, before a user clicks, enabling near-instant interactions. Unlike earlier methods, this approach balances efficiency with the need for modern security and privacy standards, offering developers greater control over how and when prerendering is applied.

| Feature | Old Prerender | Speculation Rules API Prerender |

|---|---|---|

| Implementation | <link rel="prerender"> |

<script type=”speculationrules”> |

| Efficiency | Heavy resource usage | Intelligent, resource-efficient |

| Flexibility | Prerender on page | Flexible rules for multiple pages |

| Security | Exposed to security risks | Privacy-preserving iframe sandbox |

| Browser Adoption | Deprecated | Supported in Chromium browsers |

What about rel=”prefetch”

If prefetching sounds familiar, that’s because it’s been around for years in the form of <link rel="prefetch" href="next-page.html">. This hint lets developers instruct browsers to fetch assets like HTML, JavaScript, or images in advance, storing them in the cache for faster access when users navigate to the next page. While effective for specific resources, traditional prefetching doesn’t render content, limiting its impact on performance.

| Feature | Old Prefetch | Speculation Rules API Prefetch |

|---|---|---|

| Implementation | <link rel="prefetch"> |

<script type=”speculationrules”> |

| Heuristics | None | Browser-based and developer rules |

| Efficiency | Often wasted bandwidth | Smarter, context-aware prefetching |

| Granularity | Static and manual | Conditional and dynamic |

| Integration | Independent | Works with prerender and other APIs |

| Scope | Basic document fetching | Flexible (documents, subresources) |

| Resource Prioritization | Low | Adaptive, based on context |

What about resource waste?

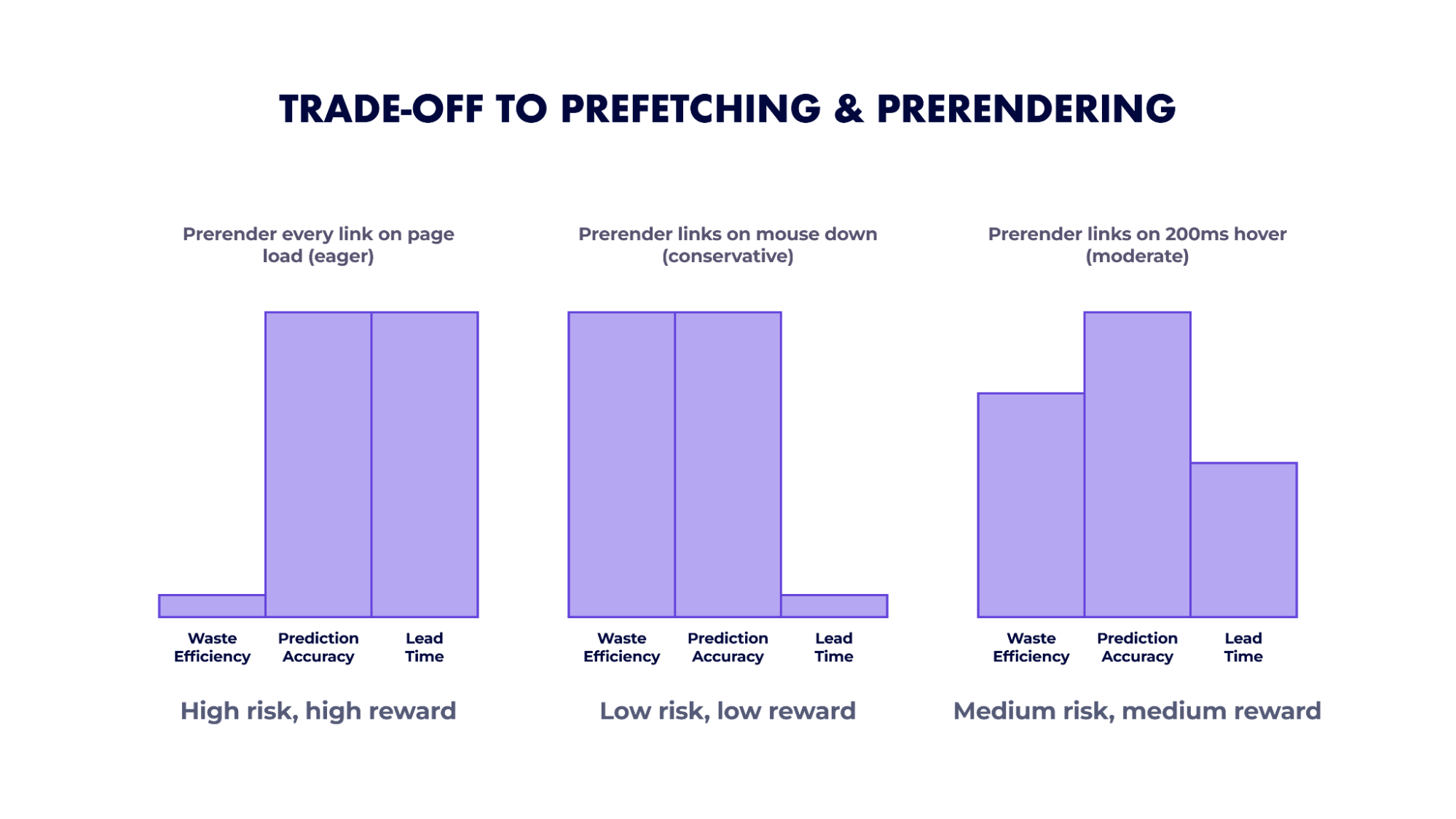

The Speculation Rules API introduces powerful tools for improving web performance, but its resource-intensive processes mean it’s not practical to simply prerender an entire site and expect optimal results. Prefetching and prerendering reduce delays by preparing pages in advance, but applying these techniques indiscriminately can waste bandwidth, strain servers, and negatively impact users on limited data plans or low-powered devices.

To use these tools effectively, developers should target high-probability interactions—prioritizing frequently accessed links or paths based on actual usage data. By targeting predictable user behavior, they can preload only what’s likely to be needed, minimizing waste and ensuring optimal resource usage.

Source: Uxify

Chromium provides built-in safeguards to manage resource allocation:

- Eager/Immediate settings: Up to 50 prefetches and 10 prerenders.

- Moderate/Conservative settings: Capped at 2 prefetches and 2 prerenders, replacing older speculations as needed.

By regularly analyzing performance metrics and refining rules, developers can ensure the API delivers meaningful speed improvements without excessive resource use. Prerendering, when applied thoughtfully, can enhance user experience while avoiding inefficiencies.

Impact of speculative navigation on Core Web Vitals

Instant navigation enhances user experience by preparing pages before navigation, even on sites already optimized for speed. Prerendering partially or fully loads a page in advance, directly benefiting Core Web Vitals:

- Largest Contentful Paint: Prerendering can bring LCP close to zero because the largest visual element on the page—whether it’s a hero image, video, or main text block—is already loaded and visible by the time the user navigates. Traditional loading requires fetching, rendering, and processing assets in real-time, but with prerendering, these steps are handled in advance, allowing content to be presented instantly when the page loads.

- Cumulative Layout Shift: CLS usually happens when images or fonts load after the initial layout, but with prerendering, these elements are already in place, creating a stable and visually consistent page on arrival.

- Interaction to Next Paint: By handling the initial resource loading and layout tasks in the background, prerendering frees up the main thread. This means that the page is ready to respond instantly to user interactions like clicks, taps, or scrolls, improving INP. JavaScript, which is often render-blocking, is parsed and prepared in advance, ensuring that user interactions aren’t delayed by loading tasks. As a result, prerendering minimizes the likelihood of delays between user actions and the corresponding visual updates, creating a seamless and highly interactive experience.

Even if the user navigates to a prerendered page before it is fully loaded, the prerendered content provides a “head start,” ensuring that critical resources are already loaded or partially loaded. In such cases, the prerendered page is moved to the main frame and continues loading without restarting the process, which makes the transition appear smoother. This early loading process means fewer visible loading indicators, faster transitions, and a more fluid experience, especially for complex or content-heavy pages.

Source: Web Dev

The cumulative effect is a site that feels almost instantaneous—users experience fewer delays, improved stability, and faster response times.

Real world data: speculative loading & traditional optimizations

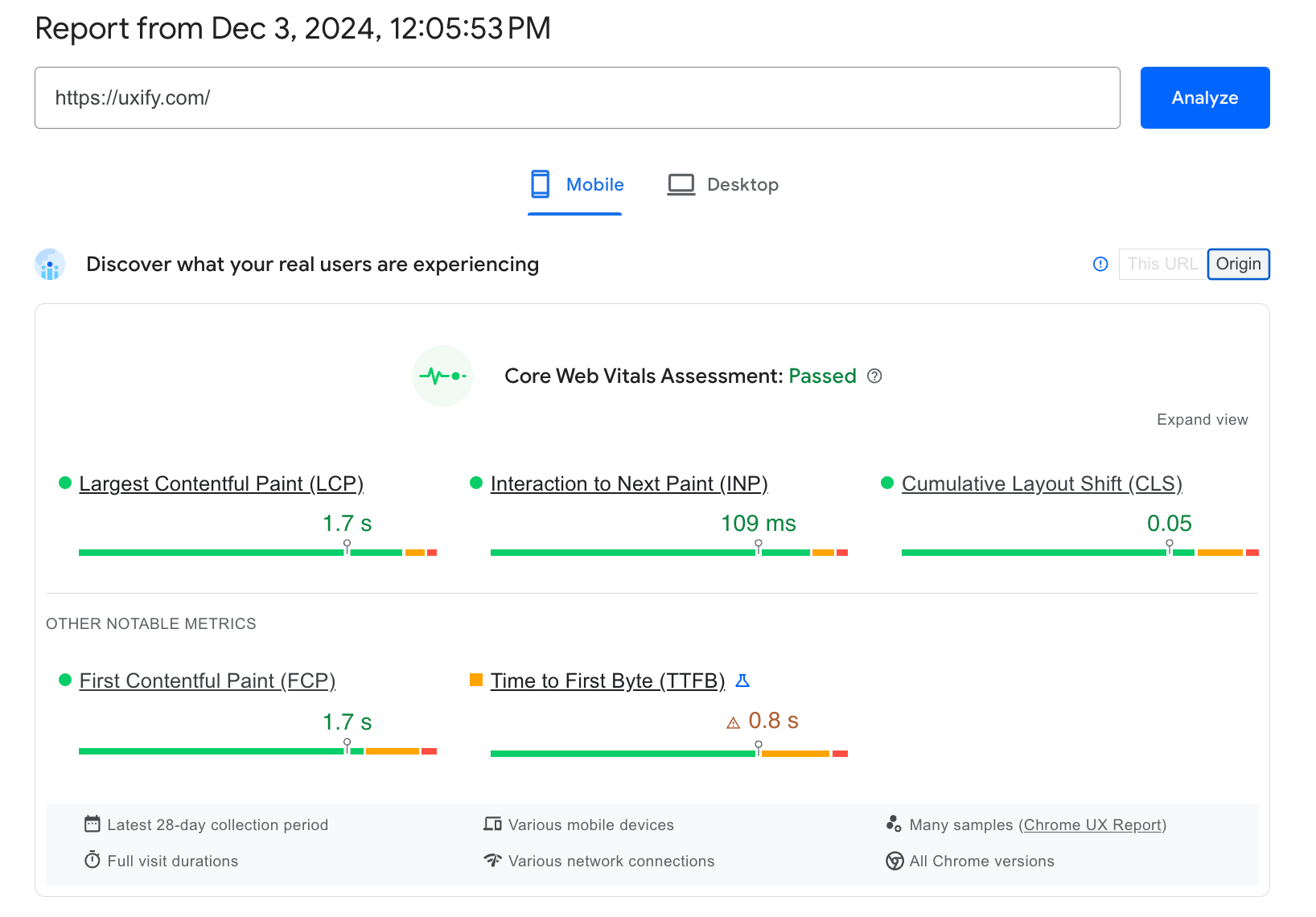

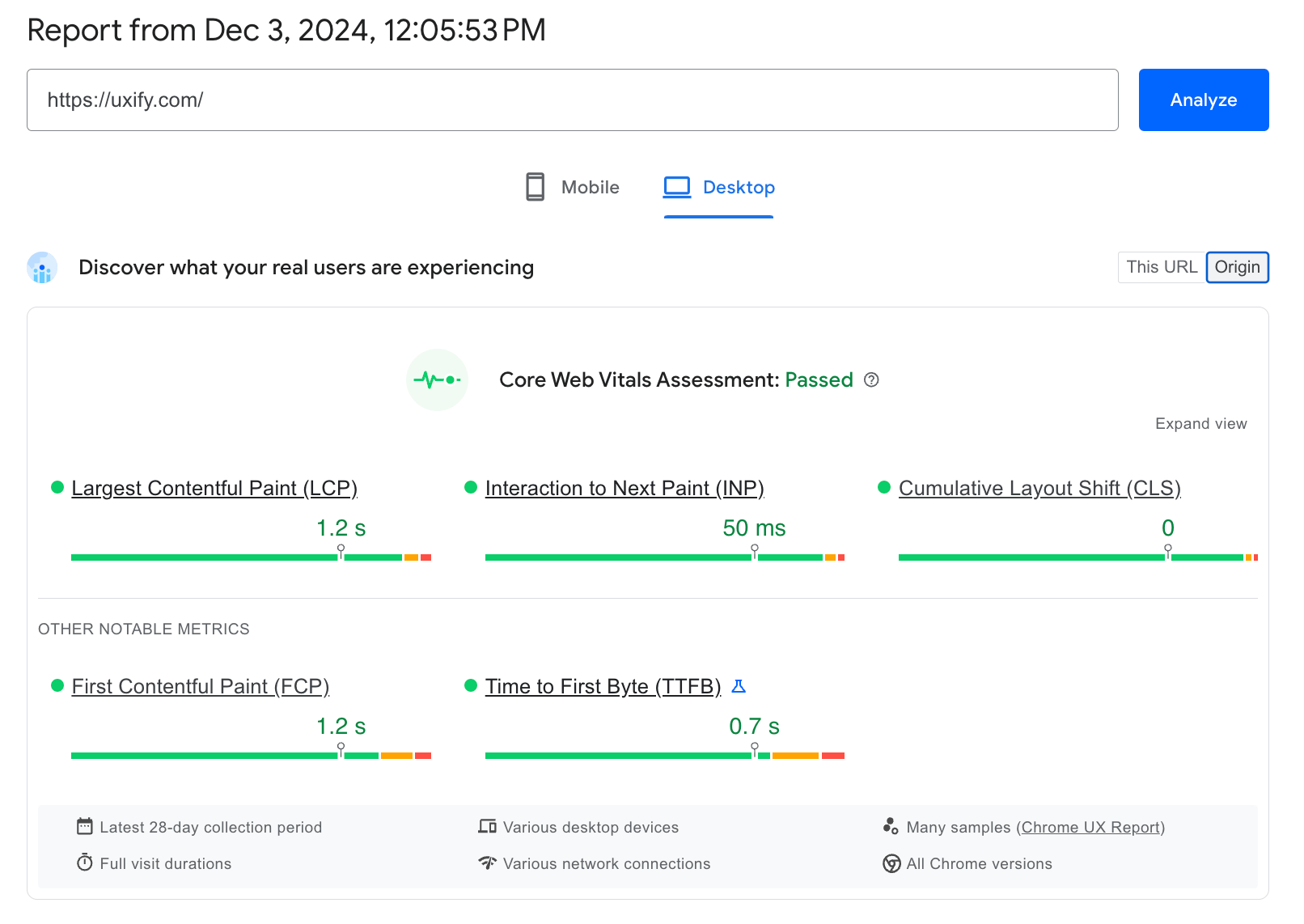

Theoretically, speculative loading has the potential to enhance web performance beyond traditional strategies. To evaluate its practical impact, we tested speculative navigations applied alongside traditional optimizations on our website that already passed Core Web Vitals using conventional methods.

Below are the results over 30 day period (Nov 1-30), comparing the performance of two strategies:

- Traditional optimizations and Speculation Rules API: A combination of conventional optimizations and a solution implementing prerender and prefetch strategies, leveraging advanced AI models to predict and optimize the most likely next pages.

- Traditional optimizations: Only using traditional optimizations

Data methodology:

This data is collected using a RUM script leveraging the web-vitals library that tracks visitors’ experiences on Chromium-based browsers, including Chrome, Edge, Opera, and others. Small discrepancies compared to CrUX reporting are expected due to the inclusion of the additional browsers and other well-documented differences in measurement methodologies. The data reflects the entire page lifecycle, but it’s worth noting that adblockers prevent some experiences from being captured.

Device type: Mobile

| Optimization type | LCP (p75, ms) | INP (p75, ms) | CLS (p75) |

|---|---|---|---|

| Traditional optimizations and Speculation Rules API | 1078 | 104 | 0.031 |

| Traditional optimizations | 2144 | 120 | 0.04 |

| Improvement (%) | 49.7% | 13.3% | 22.5% |

Device type: Desktop

| Optimization type | LCP (p75, ms) | INP (p75, ms) | CLS (p75) |

|---|---|---|---|

| Traditional optimizations and Speculation Rules API | 528 | 48 | 0 |

| Traditional optimizations | 1774 | 50 | 0 |

| Improvement (%) | 70.2% | 4% | – |

The case study shows that the Speculation Rules API provides measurable improvements even on pages already optimized to meet Core Web Vitals thresholds using techniques like caching, minification, and compression. While these pages perform well, the API offers additional enhancements in speed, responsiveness, and visual stability, demonstrating its value as a complementary optimization tool.

This is not an isolated occurrence, previous studies on the topic have also found significant improvements in LCP using speculative prerender and prefetch strategies.

How speculative navigations complement traditional speed optimization techniques

Combining web performance solutions with speculative loading creates a dynamic approach to achieving minimal load times and seamless user experiences. Modern caching solutions do more than store resources closer to users—they optimize delivery with features like image compression, code minification, and resource prioritization, reducing file sizes and cutting down on server requests. These enhancements significantly speed up loading by delivering lighter assets more efficiently.

Speculative loading complements traditional performance tools by prefetching or prerendering pages based on predicted user behavior, reducing perceived load times to nearly zero. By preparing content in advance, it enables instant transitions, eliminating delays and creating a fluid browsing experience.

The synergy between these two approaches is powerful: caching solutions make pages lighter and more efficient, which allows for faster prerendering and helps speculative loading work even better. By loading or prepainting content before it’s needed, speculative loading takes the benefits of caching even further, delivering near-instant load times. Together, these techniques create a smoother, faster browsing experience, reducing delays and ensuring that content loads almost instantly—making the most of both strategies in a seamless way.