Last year I published an article introducing the concept of a service worker caching as a web performance optimization technique. This year will expand upon how to use service worker cache by covering cache invalidation.

Invalidation should be applied properly to ensure your websites work off-line, load instantly and also include the freshest content possible without abusing the site’s storage quota.

There are several means at your disposal to ensure that your cached assets are in-line with the content on your server. This is part of a broader topic known as cache invalidation. Cache invalidation is probably the most complex part of progressive web applications and I think often overlooked.

When you update your website HTML, CSS images etc. you want your freshest content to be what the end user sees. Unfortunately, there are many steps along the way that can foil your desire.

For years we’ve leaned on cache headers like the Cache-Control header to designate how our files are cached. There are many places where our files can be cached around the Internet. The browser’s cache is the first location we think about. Your files may be cached in network routers and proxy servers. Caching around the Internet is controlled by the Cache-Control header.

But the goal of service worker caching is to make the network a progressive enhancement, or not necessary. This means you need to create an engine to manage your assets cleanly and effectively, ensuring the user has the best experience possible. When you update a resource on the server you need some mechanism in place to reasonably update this on the client as well.

If locally cached assets become stale you risk serving your user an out of date or mixed experience due to files being out of sync. Because the cache API does not have a built-in invalidation feature, you must create one for your applications.

Each domain is allocated a maximum amount of disk it can persist data. This is allocated across all persistence mediums, the Cache API is just one. There is no standard rule as to how much space is allocated. But most browsers adhere to a defecto quota standard.

Larger hard drives, with more free space, have larger quota. Less disk space means a smaller quota. Mobile devices tend to have the smallest available space. You should plan for the smallest quota to make sure your applications work their best.

Don’t get too worried, most web sites should comfortably fit within a few megabytes of storage. Having a good cache invalidation strategy means you won’t need to worry about exceeding your quota and potentially breaking the user experience.

There are several strategies I have been employing to ensure the latest content is available in my progressive web apps. This is not a complete set, but I feel a good place for everybody to start.

Updating By Hashnames

A common strategy to manage updating assets is to use hash names. This strategy is commonly used to bust cache. Hash names allow you to set a very long time to live, a year or more, and not worry about updating the file. When the file is updated it gets a new name, which is a unique URL to cache.

Creating unique names require running the file’s content through a hash algorithm, which generates a unique value. This works exceptionally well with resources like stylesheets, scripts and images. They are not part of the page’s Internet address, but referenced from the HTML. This can however be problematic for managing your HTML, because they are typically a physical address, https://yourdomain.com/html-file/, or index.html as the default document.

A common way to create hash names is performing an MD5 hash of the file’s content. Personally, I like to use a node script to manage hashing file content for names using the node crypto module.

function getHash(data) {

var md5 = crypto.createHash('md5');

md5.update(data);

return md5.digest('hex');

}

Once I get the hash value, I use the new value as the file’s name. For example, you’ll often see CSS and JavaScript files reference to buy these hash names.

Now when you update the file, even by a single character, a new hash is generated. You are responsible, as part of your rendering process, to update the HTML reference to that particular file by the new hash name.

There are drawbacks to this approach when using service worker caching. You need a way to manage how many times you cache the same file. Each time a new version is created a new file name is also created.

As new files names are created new requests or unique requests are used to cache the response. Over time this means that your cache can become polluted with multiple versions of the same file. Older versions won’t be used, but they do take up space and can eventually cause problems.

Your goal with service worker caching is to not consume the entire devices hard drive, but to responsibly cache responses. Make sure you have a routine to clean up stale cached responses.

Limiting the Number of Cached Responses

One of the simpler cache invalidation methods is limiting the number of items persisted in your service worker cache. I call this Max Item Invalidation. Your caching logic needs to check to see how many items are stored in a particular cache and if you’ve reached the maximum number then remove at least one before adding a new one.

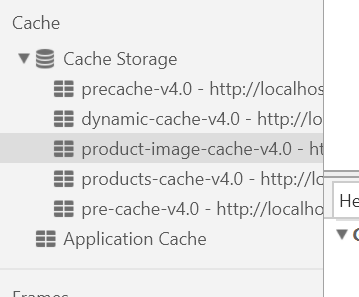

To make this strategy work I recommend creating multiple named caches. These caches should correlate to different types of responses. For example, in my Fast Furniture demonstration application I maintain named caches for product pages and product images. This strategy lets me set different caching and invalidation strategies.

The cache object keys method returns a Promise that resolves an array of keys or requests objects. They are listed in the order they were added to the cache. This means you can remove the oldest items by removing the first items. Determine how many items need to be purged, then loop through the keys, deleting the oldest entries.

cache.keys().then((keys) => {

if(keys.length > options.max){

let purge = keys.length - options.max;

for(let i = 0; i < purge; i++){

cache.delete(keys[i]);

}

}

});

Too bad you can’t simple use an Array slice method here!

I set different maximum number of records for each cache. The application needs more product images than product pages. Product images are used on a variety of pages, primarily categories, search results and individual product pages.

A visitor may not visit all of the products in a particular category, but these pages display at least 10 product images. To match the user behavior, I allow many more product images to be stored than product pages.

{

"cacheName": "product-image-cache-v4.0",

"invalidationStrategy": "maxItems",

"strategyOptions": {

"max": 100

}

},

{

"cacheName": "products-cache-v4.0",

"invalidationStrategy": "maxItems",

"strategyOptions": {

"max": 20

}

}

By controlling the number of items persisted in cache for these two assets I limit the amount of space the browser needs to cache. You will need to experiment to determine what numbers work best for your application.

For demonstration purposes Fast Furniture caches up to 20 products and 100 product images. This allows me as a developer and instructor to be able to demonstrate the max items principle easier. For real production apps those limits might be much higher to ensure that my customers have the best experience possible.

Limiting the Time a Response is Cached

The next level of cache invalidation control involves managing how long a response can be cached. This can be very tricky as you don’t always have access to the Cache-Control header to determine how long an asset should be cached.

You don’t need access to the Cache-Control header to create a good routine to invalidate responses based on their lifespan. You do have access to the date and asset was cached. This can be used very effectively.

let date = new Date(response.headers.get("date"));

console.log(date.toLocaleString());

Again, I like to partition different types of assets into different caches. By doing this I can create some logic to manage how long different assets are cached. Just like I set the maximum number of elements that can be stored in a cache, you can also define how long an item can be cached.

By accessing a cached response’s cache time you can determine how long it has been cached. When responses are added to a named cache a ‘date’ header is applied with the current time.

let date = new Date(response.headers.get("date")),

current = Date.now();

if (!DateManager.compareDates(current, DateManager.addSecondsToDate(date, 604800))) {

//either update the cached response or delete the one on hand

//update the response

cache.add(request);

//or

//delete the response

cache.delete(request);

}

If an asset has been cached longer than you would like then you can purge it and your normal cache strategies will kick in and retrieve a fresh version from the network. You could also trigger an automatic update from the network even if a request for that asset is not made. I will caution you that this can create unnecessary network check chatter and you need to use this appropriately.

One thing you should note is making a network request before the Cache-Control header expires may just return the same version because it may be cached in the browser cache. You should coordinate your Cache-Control header time to live values with the max time allowed for assets in the named cache.

More Advanced Invalidation Scenarios

There are two places you can perform these invalidation’s, when the service worker is first executed, and any time a new item is cached. It’s advisable for you to re-factor your invalidation logic outside of any event handler. This way you can reuse the code in multiple places. It also makes automated testing easier.

You can execute your clean up logic when the service worker is created, performing a full scan and update of your cached assets. Just be cautious as this might delay your service worker responding to the event that woke it from the sleep state.

You’ll also need to define configuration logic to manage your invalidation strategies. This can be done as a constant value in your service worker or you can load external rules. Personally, I lean on an external file I call a cache manifest. Do not confuse this cache manifest with the appCache manifest.

These manifest files are JSON configuration defining caching logic for either named caches or routes.

[{

"url": "/",

"strategy": "precache-dependency",

"ttl": 604800

}, {

"url": "/js/app.js",

"strategy": "precache-dependency",

"ttl": 604800

}, {

"url": "/css/site.css",

"strategy": "precache-dependency",

"ttl": 604800

}...]

By including an external cache manifest file I have a lot of control over what is cached. It also gives me flexibility to change my rules without updating my service worker.

Utilizing this methodology also leans on using IndexedDB as a persistence medium. This gives me the ability to not only store my current configuration, but also track external meta-values that I may need that don’t have access to the of the cache API. Of course, this is a much more complex technique and not where I advise you to start your journey.

fetchCacheManifest() {

let cm = this;

// With the cache opened, load a JSON file containing an array of files to be cached

return fetch(cm.CACHE_MANIFEST).then(function (response) {

return response.json()

.then(function (manifest) {

cm.idbkv.put(cm.CACHE_MANIFEST_KEY,

cm.updateManifestTTL(cm.transformManifest(manifest)));

cm.manifest = manifest;

// Once the contents are loaded, convert the raw text to a JavaScript object

return manifest;

});

});

}

Summary

Managing your service worker cache invalidation is a key step in making your progressive web app a rich robust experience. It ensures that the content your customer sees and interacts with is the freshest content possible. It also guards your app from overstepping the cache quota and potentially causing a complete purge of everything you stored.

Even if you don’t employ these strategies, you should from time to time force some sort of cache purge and update process. This can be done real-time as the user request individual assets, or behind-the-scenes with an automatic process.

For example, you can trigger this update when a service worker triggers or in response to a background event like a push notification. Just be sure to let the user know what you’re up to.

If creating this code from scratch seems intimidating Workbox has similar strategies built in to help manage your cache life cycle. The nice thing about a tool like Workbox is the scary code is abstracted away, allowing you to code more application specific logic.

Maintaining a fresh cache means that you are providing the best experience possible. You want to cache is much of your web application as possible but do so responsibly.